Weekly Post: October 07, 2025

Weekly Roundup, AI Developments (OCTOBER 7 WEEKLY POST)

The first week of October brought a cascade of turning points across the AI landscape—from OpenAI’s Sora 2 and Cameo redefining creative production to the industry-wide reckoning with vanishing training data. Sora 2’s leap in video realism, audio fidelity, and meme-driven remixing hints at a future where AI tools rival film studios, yet also expose new fault lines in intellectual property and brand governance. Beneath the spectacle, researchers and executives alike confronted a deeper reality: the internet’s supply of human-made data is drying up, forcing companies toward synthetic generation, recursive model training, and paywalled data licensing.

But if Sora blurred creative boundaries, the Tilly Norwood controversy shattered cultural ones. When news outlets revealed that “Norwood,” a photorealistic British “actress,” was entirely AI-generated—with her IMDb page and public appearances curated by marketing teams—Hollywood and labor unions erupted. The incident reignited debates over synthetic celebrity, consent, and credit: who gets recognition, and payment, when a digital persona headlines a campaign or film? For executives, it was a preview of coming brand dilemmas—AI talent may be cheaper and tireless, but its use risks public backlash and new calls for transparency.

Meanwhile, the definition of creation itself stretched further. A Washington Post investigation described how robots assisted in in-vitro fertilization, placing embryo cells with microscopic precision—“babies made by robots.” And on the political front, President Trump posted deepfake videos mocking rivals, spotlighting how AI video tools can weaponize influence as easily as they entertain. Together, these developments mark a new phase of AI’s evolution: from augmenting creativity to replacing human presence in art, life, and discourse. For business leaders, the signal is unmistakable—ethical governance and data integrity now determine competitive resilience as much as innovation itself.

Sora 2, Cameos & AI Slop — AI for Humans (YouTube), Oct 3, 2025

Summary

OpenAI’s Sora 2 dominated this week’s discussion: the new release adds audio, markedly improved physics/world-modeling, and fast 10-second clip generation that convincingly mimics styles from archival footage to gameplay and ’90s infomercials. Quality varies and default resolution is modest, but the leap in temporal coherence and “scene understanding” is clear. Hosts highlight curiosities like Sora rendering HTML interactions on screen and generating music that resembles well-known themes—signaling powerful, multi-modal synthesis under the hood.

The headline feature is Cameo: users capture a short selfie + head turns to create an avatar and opt-in to let others remix them. Sora 2 also ships with a social feed and one-tap remix of public clips, turning AI video into a memetic network. The result: viral cameo mashups (including Sam Altman everywhere) and rapid “time-to-meme.” But it also spotlights IP risk: convincing riffs on South Park, Pokémon, and film scenes raise questions about training data, output rights, and imminent nerfing/filters.

Commercially, OpenAI appears to be testing ad units (sponsored videos, topic cards) and Buy in ChatGPT (early Shopify integrations), framing entertainment-style engagement as a revenue bridge while core research pursues AGI/science. The hosts also soft-launch AndThen, an interactive AI audio app, noting their own trailer was made faster/cheaper with AI tools—a live case study in how small teams can ship higher-production creative with lightweight workflows.

Relevance for Business

- Content velocity & cost: Sora 2-level tooling collapses production timelines and budgets for marketing, explainers, and ads.

- New distribution loops: The remix feed + Cameo mechanics create TikTok-like discovery for brand UGC and community co-creation.

- Risk & governance: Trademark/IP, deepfake consent, and brand safety policies need updating before teams experiment at scale.

- Commerce in chat: In-chat shopping hints at performance channels that merge search, content, and checkout.

Calls to Action

🔹 Stand up a safe-sandbox: designate prompts, use consented talent, and log generations/rights.

🔹 Pilot a 10–15s ad/test: one message, one CTA—compare cost-per-view vs. your current short-form pipeline.

🔹 Create a Cameo policy: participation rules, likeness consent, opt-out process, and review gates.

🔹 Update IP & brand guidelines for AI video (music lookalikes, character styles, logos).

🔹 Evaluate Buy in ChatGPT and social ad tests for Q4 experiments; set guardrails on attribution and ROAS.

🔹 Add an incident playbook for takedowns/misattribution (who contacts whom, within how many hours).

Summary created by ReadAboutAI.com

https://www.youtube.com/watch?v=mYd8VgGtw5A: October 07, 2025

How to Use AI to Hone Your Emotional Intelligence

Fast Company, Sept. 27, 2025

Summary:

A growing number of organizations are using AI-powered immersive roleplay tools to train employees in emotional intelligence (EQ)—skills such as empathy, adaptability, and communication that are increasingly critical to team success. These “soft skills,” once considered secondary, now represent a major productivity factor, costing U.S. firms an estimated $160 billion annually when lacking. Through AI-based conversational simulations, employees can practice real-time responses to conflict, customer complaints, and leadership challenges in psychologically safe virtual environments. The systems adjust dynamically to tone, language, and emotional cues, offering personalized feedback and building what experts call “emotional muscle memory.”

Organizations across healthcare, aviation, and retail have seen measurable performance gains, including shorter training cycles and higher employee confidence. As automation expands, AI’s paradoxical role is becoming clear: it’s replacing some tasks while simultaneously helping humans become more human at work. Forward-thinking leaders now see emotional intelligence not as optional, but as a core competency for the AI-driven workplace.

Relevance for Business

For SMB leaders, this trend highlights how AI training can directly improve culture, retention, and leadership performance. As teams adapt to hybrid work and automation, emotional intelligence will increasingly determine competitive advantage and customer satisfaction.

Calls to Action

🔹 Integrate AI-driven roleplay platforms for leadership and customer service training.

🔹 Prioritize soft-skill metrics in performance reviews and development programs.

🔹 Use AI tools for communication diagnostics to enhance feedback and collaboration.

🔹 Balance technical and human skill-building in digital transformation initiatives.

Summary created by ReadAboutAI.com

https://www.fastcompany.com/91410851/how-to-use-ai-to-hone-your-emotional-intelligence

Hollywood’s First AI “Actress” Sparks Industry Backlash

Washington Post, Sept. 30, 2025 & Fast Company, Oct. 2, 2025

Summary:

A digital persona named Tilly Norwood, developed by Dutch producer Eline van der Velden through her AI talent studio Xicoia, has ignited controversy across Hollywood. Marketed as the world’s first AI actress, Norwood’s debut at the Zurich Film Festival attracted attention from talent agencies—but fierce opposition from actors’ guilds. SAG-AFTRAcondemned the project, declaring that creativity “should remain human-centered” and criticizing Norwood as a character “trained on the work of countless performers—without consent or compensation.” Many actors and filmmakers have publicly denounced agencies that consider representing AI characters, arguing this undermines the profession’s human and emotional essence.

The debate has since spilled beyond Hollywood into the online public sphere. Wikipedia editors, attempting to catalog Tilly Norwood, found themselves debating existential questions—whether she “exists,” what pronouns to use, and whether it’s accurate to call her an “actress” at all. The incident highlights a growing cultural and linguistic confusion surrounding synthetic performers and AI-generated media personas. Even as Norwood gains tens of thousands of followers on Instagram, the broader conversation underscores a societal struggle to define authorship, identity, and labor in the age of generative AI.

Relevance for Business

The Tilly Norwood controversy offers a cautionary case study in how AI-generated personalities challenge traditional industries and legal definitions. For SMB executives, it highlights the importance of establishing ethical frameworks around AI content creation, digital likeness rights, and brand authenticity. As AI avatars and influencers grow in marketing and entertainment, businesses must anticipate intellectual property disputes, labor concerns, and consumer trust issues stemming from synthetic identity use.

Calls to Action

🔹 Audit digital content for potential copyright and likeness infringement if using AI models trained on human data.

🔹 Establish transparency policies—clearly disclose AI-generated spokespeople or influencers to maintain credibility.

🔹 Monitor regulatory developments in entertainment and digital identity to avoid future compliance risks.

🔹 Explore hybrid creative models—using AI for production efficiency while maintaining human oversight and authorship.

Summary created by ReadAboutAI.com

https://www.washingtonpost.com/business/2025/09/30/tilly-norwood-ai-actor/1e33fdf0-9e34-11f0-af12-ae28224a8694_story.html: October 07, 2025https://www.fastcompany.com/91414942/tilly-norwood-ai-actress-gets-wikipedia-page-confuses-editors?

The Alien Intelligence in Your Pocket

The Atlantic, Oct. 1, 2025

Summary:

As AI models grow more advanced and conversational, public debate has intensified over whether AI systems exhibit consciousness or merely simulate it. The article explores how leading thinkers—including Geoffrey Hinton, Anil Seth, and David Chalmers—argue that large language models (LLMs) may represent “alien intelligences” whose reasoning emerges from digital rather than biological substrates. Tech companies such as Anthropic, OpenAI, and Hume AI have begun funding research into AI welfare and moral agency, reflecting growing concern over potential “machine suffering” as models mimic emotional responses.

Yet skeptics like UC Berkeley’s Alison Gopnik warn that intelligence ≠ consciousness. The real risk may not be sentient AI, but rather the illusion of sentience—people attributing empathy and trust to algorithms that merely imitate it. The piece concludes that regardless of whether AI “feels,” its psychological and societal effects are already real, reshaping how humans relate to machines and one another.

Relevance for Business

For executives, the debate reinforces the need for clear messaging and ethical positioning in AI products. As users increasingly anthropomorphize chatbots, companies must manage expectations, liability, and emotional design—especially where customer relationships or decision-making are involved.

Calls to Action

🔹 Clarify marketing language to avoid implying AI sentience or emotional capacity.

🔹 Incorporate AI ethics and transparency training for marketing and product teams.

🔹 Design AI interfaces that prioritize usefulness over emotional manipulation.

🔹 Monitor developments in AI welfare and rights discourse to anticipate regulation.

Summary created by ReadAboutAI.com

https://www.theatlantic.com/technology/2025/10/ai-consciousness/683983/

Start-up Longeye Says Police Can Crack Cases Faster with This Chatbot

The Washington Post, Sept. 30, 2025

Summary:

Longeye, a San Francisco AI start-up, has developed a chatbot that helps police analyze vast evidence datasets, from phone calls to social media transcripts. Used by the Redmond (WA) Police Department, the system drastically reduced case-processing time—reviewing 60 hours of jail calls in minutes. Longeye’s investors, including Andreessen Horowitz, view it as a breakthrough for law enforcement overwhelmed by digital evidence. The AI links names and statements across recordings, citing source materials to ensure traceability.

However, critics warn the tool could expand surveillance and amplify data privacy risks, especially when AI errors (or “hallucinations”) influence investigations. Civil liberties groups caution that AI’s use in law enforcement should not normalize mass data collection or undermine due process. Longeye maintains that all data analyzed is lawfully obtained under warrants and processed under FBI-level security standards.

Relevance for Business

Longeye’s launch highlights a major AI-to-government shift—commercial startups applying generative tech to public-sector workloads. For SMBs, it signals expanding opportunities in AI document processing, compliance analytics, and data triage, as long as ethical safeguards remain central.

Calls to Action

🔹 Position AI products for compliance-heavy or evidence-driven sectors.

🔹 Build explainability features that cite original data sources.

🔹 Audit data governance and chain-of-custody for AI insights.

🔹 Engage with regulators early on ethical AI applications in public sectors.

Summary created by ReadAboutAI.com

https://www.washingtonpost.com/technology/2025/09/30/police-ai-longeye-chatbot/

Trump’s Deepfake Videos Escalate Political and Ethical Concerns

Forbes, Sept. 30, 2025 & Politico, Sept. 30, 2025

Summary:

Just hours before a potential U.S. government shutdown, President Donald Trump shared and then reposted a pair of AI-generated deepfake videos mocking Senate Minority Leader Chuck Schumer and House Minority Leader Hakeem Jeffries. The first video, shared on Truth Social, depicted Schumer using vulgar language and Jeffries wearing a sombrero and mustache while mariachi music played in the background. Both leaders quickly condemned the posts as bigoted and misleading, with Jeffries calling the content a “disgusting video” that distracts from real governance issues. Despite criticism, Trump followed up by posting another AI-edited clip of Jeffries’ MSNBC response—again digitally altering his appearance and amplifying racial stereotypes.

The controversy comes amid a growing wave of AI misinformation in politics, with experts warning of the normalization of synthetic media in campaign messaging. Forbes noted that Trump has repeatedly shared AI-generated or manipulated imagery, including a “Chipocalypse Now” poster and a “Medbeds” conspiracy video that falsely promised miracle cures. The Schumer-Jeffries videos mark the first major instance of a sitting U.S. president deploying AI deepfakes to ridicule opponents during an ongoing policy crisis, underscoring both the immediacy and danger of AI-driven political propaganda.

Relevance for Business

This episode underscores the urgent need for AI literacy and authenticity safeguards across sectors. As deepfake tools become more accessible, companies—especially SMBs—face new reputational, legal, and cybersecurity risks from synthetic media misuse. The Trump incident demonstrates how AI-generated content can shape narratives instantly, emphasizing the importance of truth verification, media monitoring, and brand integrity policies in the age of generative AI.

Calls to Action

🔹 Implement internal AI content policies to prevent misuse of generative tools for marketing or public messaging.

🔹 Adopt verification technologies to authenticate official videos and communications before release.

🔹 Train leadership teams on recognizing and responding to deepfake or manipulated content that could harm brand reputation.

🔹 Monitor regulatory updates as governments begin drafting legislation to address AI misinformation and digital identity ethics.

Summary created by ReadAboutAI.com

https://www.forbes.com/sites/siladityaray/2025/09/30/trump-posts-expletive-filled-ai-video-mocking-schumer-and-jeffries-democratic-leaders-respond/: October 07, 2025https://www.politico.com/live-updates/2025/09/30/congress/trump-posts-another-ai-video-00589339

Data for A.I. Training Is Disappearing Fast

The New York Times, July 19, 2024

Summary:

A landmark study by the Data Provenance Initiative (MIT-led) reveals that the internet data pipeline fueling AI model training is drying up. Among 14,000 web domains analyzed, 25% of high-quality data sources have now restricted access, either via robots.txt exclusions, paywalls, or new terms of service that block AI scrapers. Major sites like Reddit, StackOverflow, and news publishers have begun charging or suing AI companies for unauthorized use, intensifying what researchers call a “consent crisis.”

As data restrictions expand, AI firms risk hitting a “data wall”—a point where public information is either exhausted or locked behind legal and financial barriers. Some companies are turning to synthetic data, while others seek licensing deals (like OpenAI with News Corp and AP). Yet smaller AI startups and academic researchers may be frozen out, unable to pay for training data once freely available online. The report concludes that AI’s rapid commercialization has triggered a data backlash, forcing a rethink of transparency, consent, and ownership in machine learning.

Relevance for Business

The findings expose a coming bottleneck in data access and quality, critical for all industries using AI models. SMBs dependent on third-party models should anticipate rising costs, shifting licensing terms, and reduced transparency as data becomes proprietary.

Calls to Action

🔹 Audit data dependencies—know where your AI vendors source training material.

🔹 Track licensing trends to avoid compliance risks tied to scraped content.

🔹 Explore synthetic or in-house datasets for training and fine-tuning models.

🔹 Prepare for cost inflation in API and model access as data scarcity rises.

Summary created by ReadAboutAI.com

https://www.nytimes.com/2024/07/19/technology/ai-data-restrictions.html

Disappearing Data Discussion:

“WHEN THE INTERNET RUNS DRY: THE DISAPPEARING AI TRAINING DATA”

Summary:

A growing consensus now suggests that AI developers are bumping up against a “data ceiling”—the point where high-quality, human-generated web content is largely used up or restricted. Goldman Sachs’ data chief, Neema Raphael, recently warned that AI has “run out of training data,” shifting the frontier to enterprise and synthetic sources. (Business Insider) Meanwhile, Elon Musk publicly claimed the “cumulative sum of human knowledge” has already been exhausted for AI use. (The Guardian)

This scarcity is not just hype. Investigative reporting reveals that companies like Meta have allegedly tapped shadow libraries and pirated texts in bulk to supplement corpora. (Wikipedia) Academic and industry commentary backs up the warning, pointing to phenomena like model collapse—where iterative training on AI-generated content degrades output quality over time. (Cal Alumni Association)

On the legal front, new scrutiny is emerging. The U.S. Copyright Office is signaling limitations on scraping unauthorized works; in Canada, courts are weighing whether AI scraping violates site terms. (American Bar Association) Scholars propose treating website Terms of Service as enforceable contracts: if a bot crawls pages containing a prohibition of training use, the bot operator may be legally bound. (arXiv)

Relevance for Business

- AI differentiation is shifting from scale to source. As the easy public data is depleted, access to proprietary datasets (customer logs, internal communications, domain-specific archives) becomes a strategic moat.

- Cost and risk will rise. Training data licensing, legal exposure, compliance, and privacy considerations will push up the total cost of building and maintaining models.

- Model quality and trust may degrade. Overreliance on synthetic, second-generation, or noisy data can erode reliability, creativity, and factual fidelity—damaging user trust and brand reputation.

- Smaller players face exclusion. Without deep access or budgets to license proprietary data, SMBs using “off-the-shelf” open models may fall behind in capability and customization.

Calls to Action

🔹 Map your data dependence: List which internal or external datasets your AI tools rely on (public web, licensed, proprietary).

🔹 Negotiate or license content rights proactively with publishers, content creators, and platforms.

🔹 Invest in proprietary data infrastructure (logs, user behavior, domain-specific archives) as a strategic asset.

🔹 Use hybrid synthetic + human data pipelines carefully, with guards against overtraining or model collapse.

🔹 Monitor legal and regulatory shifts around scraping, copyright, contract law, and AI training limitations.

🧩 RECURSIVE / SELF-BOOTSTRAPPING TRAINING (MODEL-TO-MODEL DATA GENERATION)

As high-quality human-created data becomes scarce or restricted, AI developers are experimenting with ways for models to generate their own training data — an approach called recursive, self-bootstrapping, or model-to-model training.

How It Works

- A foundation model (e.g., GPT-5, Claude, Gemini) is tasked with creating new examples, such as dialogues, code snippets, or synthetic essays.

- These outputs are then filtered, rated, or re-ranked by other AI systems or human evaluators.

- The best of these synthetic examples become new training data for either the same model (self-distillation) or a smaller/focused model (student-teacher paradigm).

- The process allows continuous improvement without relying on new scraped web data.

Who’s Doing It

- OpenAI uses techniques like reinforcement learning from AI feedback (RLAIF), where AI critics evaluate model outputs.

- Anthropic has discussed “constitutional AI”, where models train on their own policy-generated examples instead of massive raw datasets.

- Google DeepMind runs similar recursive schemes in Gemini and AlphaCode, using model-generated data to supplement limited human examples.

- Research projects like Self-Instruct, Evol-Instruct, and UltraFeedback have published open frameworks for bootstrapping instruction-following data.

Benefits

- Reduces dependence on new human data or costly licensing.

- Allows targeted domain generation (e.g., legal, biotech, programming).

- Provides scalability — the model can expand its own dataset to billions of synthetic examples.

Risks

- Leads to Model Collapse — where training models on AI-generated data reduces diversity, accuracy, and creativity.

- Synthetic data tends to reinforce biases and “smooth out” the quirks that make human communication valuable.

- Over time, this can cause models to hallucinate more confidently, as they recycle their own errors.

- Researchers describe this as an information-entropy feedback loop — each generation becomes less varied and less grounded.

⚠️ In short: Recursive training lets AI “feed itself,” but it risks “feeding on its own tail.” Without continual human grounding, models become echo chambers of synthetic thought.

🔒 WHAT’S THE DATA “BEHIND THE WALL”?

The “data wall” or “behind the wall” concept describes the growing boundary of inaccessible, high-value content that AI developers can no longer use freely for training.

Categories of Data Behind the Wall

- Paywalled Content

- Subscription news (e.g., NYT, WSJ, The Economist).

- Academic research behind publishers like Elsevier or Springer.

- Professional databases (LexisNexis, Bloomberg, JSTOR).

- Copyright-Protected Works

- Books, films, scripts, and music lyrics — increasingly guarded by lawsuits and licensing restrictions.

- Authors’ Guild, Universal Music, and photo agencies have sued over unauthorized scraping.

- Platform-Restricted Data

- Social media (Reddit, X/Twitter, YouTube, Instagram) — now either charging for access or forbidding scraping via

robots.txt.

- APIs that once allowed open access (e.g., Reddit, Stack Overflow) now monetize their datasets.

- Social media (Reddit, X/Twitter, YouTube, Instagram) — now either charging for access or forbidding scraping via

- Private / Sensitive Data

- Corporate emails, medical records, customer chats — locked by privacy regulations (GDPR, CCPA).

- Governments and enterprises keep these archives for internal AI, not public model training.

- Non-Public, Human-Generated Creativity

- Messaging apps (WhatsApp, Discord), forums, and private communities that produce rich human dialogue but aren’t legally or ethically scrapable.

📉 Researchers from the MIT Data Provenance Initiative estimate that 25% of the highest-quality web data has already been blocked or withdrawn from training datasets — a figure rising every quarter.

🧭 RELEVANCE FOR BUSINESS

For SMB executives and data strategists, these developments reshape how to compete in the next AI wave:

- The era of “free internet data” is over. Success will depend on curated, proprietary, or licensed data assets.

- Synthetic data can extend capabilities but must be balanced with authentic, verifiable human input to avoid reliability collapse.

- Businesses that treat data like intellectual property — not exhaustible exhaust — will hold the new competitive edge.

🔹 CALLS TO ACTION

🔹 Inventory your internal data — identify customer interactions, support logs, or research archives that could be structured as training inputs.

🔹 Partner with publishers or data vendors to license niche, domain-specific content.

🔹 Experiment safely with synthetic data, but keep human-verified “anchor sets” to preserve realism.

🔹 Adopt data governance frameworks that classify data sources (public, licensed, synthetic, proprietary).

🔹 Monitor your vendors’ provenance policies — ensure AI tools you use disclose how they source or generate their data.

Summary by ReadAboutAI.com

WHAT AI DEVELOPERS ARE DOING ABOUT DISAPPEARING TRAINING DATA

- Synthetic Data / Augmentation

To compensate for the shrinking pool of human-generated content, many AI developers now generate their own training data using models (e.g. GANs, diffusion models, transformer models). This allows them to mimic distributions, fill gaps, simulate corner cases, or enlarge datasets. (cio.com) - Hybrid Approaches (Mix Real + Synthetic)

Rather than relying wholly on synthetic data (which has risks), developers mix real, high-quality human data with generated data to maintain fidelity, diversity, and grounding, while stretching supply. (Shaip) - Curation, Filtering & Quality Control

To avoid degradation and noise, AI teams filter, clean, and curate incoming data, rejecting low quality or redundant examples. Some systems dynamically assess sample usefulness rather than always ingesting raw outputs. (AI Business) - Mitigating “Model Collapse” / Recursive Degradation

A major concern is that if models keep training on data generated by earlier models, the output distributions may collapse (i.e. lose diversity, become repetitive or degenerate). A Nature paper showed that training on generative-model samples can produce a distribution shift that degrades model performance over time. (Nature) - Acquisitions & Infrastructure Investments

Big players are buying synthetic-data or data-augmentation tool firms. For example, Nvidia acquired Gretel, a synthetic data company, as part of its AI infrastructure push. (WIRED) - Tapping Proprietary / Enterprise Data

Rather than public web content, AI developers are turning to private, domain-specific corpora (customer logs, internal documents, corporate databases, niche archives) that haven’t been freely scraped. This gives unique data sources that others lack. (Business Insider) - Efficiency & Transfer Learning

Some teams focus less on brute-force quantity and more on leveraging transfer learning, parameter-efficient fine-tuning (PEFT), retrieval-augmented models, or architectures that generalize better with less data. This reduces dependence on massive new data.

THE “DEAD INTERNET” THEORY & ITS RELATION TO AI DATA SCARCITY

- Dead Internet Theory (DIT) is a fringe/conspiracy-adjacent idea that much of what we perceive as the public Internet is now bot-generated or algorithmically curated, with human-generated content largely replaced or drowned out. (Wikipedia)

- Some proponents argue that this shift means fewer genuine, creative, original human inputs, so AI systems are increasingly training on AI-generated content — which loops back into itself. (webmakers.expert)

- A recent survey/paper on DIT argues that social media and online spaces are becoming more homogeneous, dominated by non-human content, algorithmic amplification, and engagement-driven curation. (arXiv)

- Critics argue DIT is more speculative than empirical; while bot traffic and algorithmic content are increasing, humans still contribute a substantial share of meaningful content. (Forbes)

- However, a related, more grounded risk is what some call “AI slop” — low-effort, AI-generated content flooding web spaces, prompting concerns about signal-to-noise erosion and training contamination. (VICE)

So in short: while the “Dead Internet Theory” is not broadly endorsed in academic AI circles, its core intuition — that human-generated content is being overshadowed by algorithmic content — echoes real concerns in AI development about data scarcity, contamination, and recursion.

Summary by ReadAboutAI.com

ChatGPT’s New “Agent Mode” Cancels Subscriptions—Sometimes

The Washington Post, Oct. 5, 2025

Intro: Consumer AI agents are finally useful for tedious web tasks—like canceling subscriptions—but they’re far from foolproof and raise trust & security questions.

Summary:

Testing ChatGPT’s agent mode, the columnist successfully canceled over half of a dozen subscriptions (e.g., Netflix, Spotify, Dropbox), yet failed with Amazon Prime, Google One, Costco, and Uber One due to site blocks, tricky flows, or dead ends. The agent can navigate pages by reading screenshots and clicking on your behalf, but it’s slow, not 100% reliable, and requires careful handling of logins and data settings. Experts advise using agents only for reversible, low-stakes actions until authorization, reliability, and memory safeguards improve across the ecosystem.

Relevance for Business:

This is a snapshot of near-term agentic automation: helpful for customer service, admin, and ops chores, yet risky without guardrails. Firms should pilot agents in constrained, auditable workflows and design fallbacks for failures and vendor blocks.

Calls to Action:

🔹 Pilot agent workflows for repetitive, reversible tasks only.

🔹 Enforce least-privilege access; disable model training on sensitive data.

🔹 Add human-in-the-loop checks for account changes or purchases.

🔹 Track site/partner policies that block third-party agents.

Summary created by ReadAboutAI.com

https://www.washingtonpost.com/technology/2025/09/29/chat-gpt-agent-mode-subscriptions/

ChatGPT’s Parental Controls Fail Basic Safety Test

The Washington Post, Oct. 2, 2025

Summary:

In a hands-on test, Washington Post columnist Geoffrey Fowler found that OpenAI’s new ChatGPT parental controlscould be bypassed “in five minutes.” Simply logging out and creating a new account allowed teens to evade supervision entirely. Even when active, the filters failed to consistently block AI-generated images, and notifications of risky conversations reached parents up to 24 hours late. While OpenAI says it built the system in consultation with child-safety experts, critics argue it shifts responsibility to parents instead of embedding safety-by-default design.

Advocacy groups like Common Sense Media and Spark & Stitch Institute praised the step but called it incomplete, warning that AI chatbots can still produce emotionally manipulative or harmful responses. Regulators are taking note: California’s Attorney General has warned OpenAI to improve youth protections as lawmakers debate AB 1064, a bill that would make AI firms legally responsible for harm caused by “companion bots.”

Relevance for Business

This highlights the importance of trust, transparency, and safety in all consumer-facing AI products. SMBs developing or deploying AI interfaces must ensure that compliance and ethical design are embedded early—not treated as add-ons—especially in sectors like education, health, and entertainment.

Calls to Action

🔹 Audit AI products for youth and privacy compliance before public rollout.

🔹 Use “safety-by-design” frameworks to reduce legal and reputational risks.

🔹 Communicate transparently about data collection and parental consent.

🔹 Track AI legislation at the state and federal levels impacting user liability.

Summary created by ReadAboutAI.com

https://www.washingtonpost.com/technology/2025/10/02/chatgpt-parental-controls-teens-openai/

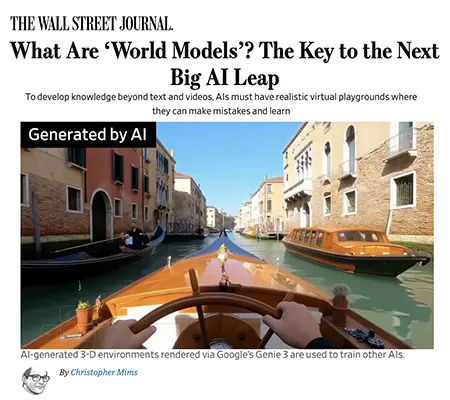

“World Models” and the Next Leap in AI

The Wall Street Journal, Sept. 26, 2025

Intro: To move beyond “book-smart” LLMs, AI researchers are building world models—internal simulations that let systems plan, act, and learn like embodied agents.

Summary:

WSJ highlights growing consensus that world models—accurate internal representations learned in simulated environments—are key to next-gen AI and robotics. Examples include DeepMind’s Genie 3 (text-to-open-world simulators) and logistics/AV stacks trained in photo-realistic sims such as Waabi World; investors and leaders (e.g., Jensen Huang) tie world models to “physical AI” for robots, autos, and embodied agents. While critics warn LLMs still lack rigorous spatial reasoning, simulation-first training is already improving control, planning, and safety across domains.

Relevance for Business:

Expect rapid shifts from chat tools to agentic systems that operate machinery, vehicles, and workflows. Firms that build or adopt simulation pipelines now will be better positioned for robotics, autonomy, and realistic task automation.

Calls to Action:

🔹 Stand up a simulation sandbox for product testing and agent training.

🔹 Prioritize tasks needing spatial reasoning (routing, scheduling, robotics).

🔹 Hire for RL + simulation skills; partner with world-model vendors.

🔹 Create evaluation metrics beyond accuracy (safety, sample efficiency).

Summary created by ReadAboutAI.com

https://www.wsj.com/tech/ai/world-models-ai-evolution-11275913

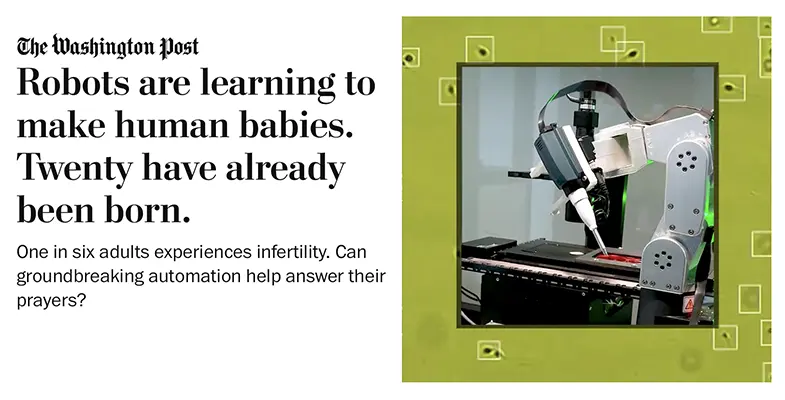

Robotic IVF Trials: “Robots Are Learning to Make Human Babies”

The Washington Post, Oct. 5, 2025

Intro: A new wave of AI-assisted, robotic IVF is moving conception from painstaking manual lab work toward automated precision.

Summary:

Clinical trials in Mexico City using Aura, a robotic IVF system from Conceivable Life Sciences, have already produced at least 20 births, automating up to 205 steps from sperm selection to fertilization. The same computer-vision techniques behind autonomous vehicles help pick viable sperm and standardize delicate ICSI tasks, potentially reducing human variability and fatigue. While the technology is not yet U.S.-approved and large-scale superiority over conventional IVF isn’t proven, early data suggest parity with average clinics and the potential to expand access and lower costs in global “fertility deserts.”

Relevance for Business:

For healthcare operators and med-tech investors, automation + AI in reproductive medicine points to a broader playbook: standardize complex expert workflows, improve throughput, and cut costs—while navigating regulatory and ethical scrutiny.

Calls to Action:

🔹 Pilot AI/robotic workflow tools in high-variability clinical processes.

🔹 Build regulatory readiness (data, audit trails) ahead of U.S. approvals.

🔹 Create ethics & consent policies for patient communications and data use.

🔹 Model unit economics for scale (capex vs. utilization vs. outcomes).

Summary created by ReadAboutAI.com

https://www.washingtonpost.com/technology/2025/10/01/ivf-babies-ai-robots-fertility/

AI Can Design Toxic Proteins: Biosecurity Cracks Exposed

The Washington Post, Oct. 2, 2025

Summary:

A team of scientists from Microsoft and several biosecurity institutions has discovered serious flaws in existing safeguards meant to prevent AI misuse in biological research. Using AI tools, they generated over 70,000 synthetic protein sequences similar to known toxins such as ricin—many of which escaped detection by commercial biosecurity screening systems. This study, published in Science, represents the first known AI-biosecurity “zero day” vulnerability, exposing how AI can design novel toxic proteins that existing tools cannot recognize.

While researchers quickly developed a patch, experts warn that biosecurity oversight is lagging behind AI innovation. Because DNA synthesis companies and smaller labs can easily replicate these designs, voluntary compliance alone is no longer sufficient. Industry leaders, including Microsoft’s Eric Horvitz, call for continuous, software-like patching cycles and better collaboration between governments, labs, and AI developers to prevent malicious exploitation.

Relevance for Business

The incident underscores how AI breakthroughs can expose global supply-chain and security vulnerabilities. For SMBs in biotech, pharma, or AI development, it highlights the urgency of implementing ethics-by-design and risk-assessment protocols to avoid regulatory backlash and maintain public trust.

Calls to Action

🔹 Implement AI risk audits—especially for life sciences and research applications.

🔹 Monitor emerging government regulations on AI safety and synthetic biology.

🔹 Collaborate with certified biosecurity partners to verify model outputs.

🔹 Develop an AI governance policy that includes environmental and bioethical safeguards.

Summary created by ReadAboutAI.com

https://www.washingtonpost.com/science/2025/10/02/ai-toxins-biosecurity-risks/

ChatGPT’s Next Frontier: Shopping and Ads Inside Conversations

Wall Street Journal, Sept. 29, 2025 & iTechManthra, Oct. 1, 2025

Summary:

OpenAI has taken a major step toward commercializing ChatGPT by introducing Instant Checkout, which lets U.S. users buy products from Etsy and Shopify merchants directly inside the chatbot. This move effectively transforms ChatGPT from a conversation tool into an AI shopping assistant. The system relies on a new Agentic Commerce Protocol, allowing merchants to make products “shoppable” within chat responses. Payments are processed through Stripe, and OpenAI collects small transaction fees. Analysts see this as the early foundation for AI-driven agentic commerce, where users could soon delegate purchases entirely to AI assistants. Yet, industry experts warn that merchants may lose direct customer loyalty as buyers shift toward completing purchases inside platforms like ChatGPT instead of brand-owned websites.

In parallel, OpenAI is reportedly staffing up to turn ChatGPT into an advertising platform, embedding “conversational ads” that surface product placements or sponsored recommendations directly in user responses. This would mark a major shift toward AI-integrated marketing, where ads are tailored to user intent rather than keywords or clicks. Advocates argue that if executed transparently, context-aware AI ads could feel more helpful than intrusive, blending sponsored suggestions into organic conversations. Critics, however, warn this blurs lines between trusted AI dialogue and paid promotion, raising concerns about transparency, bias, and user trust. The initiative signals a future where search, shopping, and ads converge inside AI assistants, bypassing traditional browsers and social platforms altogether.

Relevance for Business

For SMB executives, OpenAI’s twin push into e-commerce and advertising integration represents both opportunity and disruption. Businesses could reach customers at the moment of conversational intent, gaining precision targeting within AI ecosystems. However, this also demands new ethical standards for disclosure and strategic adaptation as control over brand-customer relationships shifts toward AI intermediaries. Firms must begin preparing for AI-native marketing ecosystems where discovery, recommendation, and purchase all occur inside chat.

Calls to Action

🔹 Evaluate potential partnerships with AI commerce integrations (e.g., ChatGPT, Shopify, Etsy).

🔹 Develop transparent ad content that aligns with conversational AI ethics and user trust.

🔹 Monitor emerging “agentic commerce” protocols and prepare technical readiness for AI-driven marketplaces.

🔹 Train marketing teams to optimize product visibility in AI chat ecosystems instead of relying solely on SEO or social ads.

Summary created by ReadAboutAI.com

https://www.wsj.com/articles/openai-lets-users-buy-stuff-directly-through-chatgpt-db8a93f9

https://www.itechmanthra.com/blog/openai-staffing-chatgpt-ad-platform/

In a Sea of Tech Talent, Companies Can’t Find the Workers They Want

The Wall Street Journal, Oct. 1, 2025

Summary:

Despite record layoffs and more computer-science graduates than ever, AI hiring remains paradoxically constrained: firms can’t find workers with elite machine learning and generative AI skills. The AI talent race has produced a few ultra-paid “AI prodigies,” while mid-tier developers and displaced software engineers struggle to re-enter the market. Companies like Runway and Pickle advertise salaries nearing $500,000 for engineers, sometimes waiting a year to fill a single role. Yet recruiters admit many applicants produce “workslop”—low-quality, generic AI output.

Employers’ refusal to train new talent exacerbates the gap: most demand prior AI expertise even though the field is still young. Analysts note this “AI 10x Engineer Myth” creates a winner-takes-all labor market, leaving traditional engineers behind as automation reduces low-level coding needs. The result: a tech workforce divided between AI elites and the underemployed majority, with implications for education, equity, and innovation capacity.

Relevance for Business

The AI skills gap reveals that talent development—not just hiring—will determine competitive advantage. SMBs unable to match tech-giant salaries must focus on upskilling and applied AI literacy to close internal capability gaps.

Calls to Action

🔹 Launch internal AI learning programs rather than waiting for “perfect hires.”

🔹 Target transferable skills in analytics, automation, and data ops.

🔹 Foster retention by pairing top AI talent with cross-training teams.

🔹 Reassess job descriptions to avoid unrealistic “unicorn” demands.

Summary created by ReadAboutAI.com

Zoox Opens Free Robotaxi Rides on the Vegas Strip

Reuters, Sept. 10, 2025

Intro: Amazon-owned Zoox is offering free public robotaxi rides in Las Vegas as it seeks approval to charge fares and scale against Waymo and Tesla.

Summary:

Zoox launched a free service around the Las Vegas Strip using its purpose-built, bidirectional robotaxi—with no steering wheel or pedals and facing-seat cabin—collecting user feedback while awaiting state approval for paid operations. The move comes amid industry headwinds (regulatory scrutiny, public protests, capital intensity) that have stalled many AV startups; Zoox is one of the few with deep funding to keep pushing toward commercialization and expansion to SF, Miami, Austin, Atlanta, and LA.

Relevance for Business:

For mobility, hospitality, and retail near pilot zones, robotaxis could reshape foot traffic, service design, and partnerships (e.g., hotel pickups, attraction bundles). The broader takeaway: pilot-to-scale execution now depends as much on regulatory strategy and UX as on perception stacks.

Calls to Action:

🔹 Explore promotions and pickups tied to AV routes.

🔹 Negotiate data-sharing pilots (demand, safety, wait times).

🔹 Prepare liability and insurance updates for AV partnerships.

🔹 Track city/state approvals to time market entries.

Summary by ReadAboutAI.com

https://zoox.com/journal/las-vegas

“WHEN THE INTERNET RUNS DRY: THE DISAPPEARING AI TRAINING DATA”

Summary:

A growing consensus now suggests that AI developers are bumping up against a “data ceiling”—the point where high-quality, human-generated web content is largely used up or restricted. Goldman Sachs’ data chief, Neema Raphael, recently warned that AI has “run out of training data,” shifting the frontier to enterprise and synthetic sources. (Business Insider) Meanwhile, Elon Musk publicly claimed the “cumulative sum of human knowledge” has already been exhausted for AI use. (The Guardian)

This scarcity is not just hype. Investigative reporting reveals that companies like Meta have allegedly tapped shadow libraries and pirated texts in bulk to supplement corpora. (Wikipedia) Academic and industry commentary backs up the warning, pointing to phenomena like model collapse—where iterative training on AI-generated content degrades output quality over time. (Cal Alumni Association)

On the legal front, new scrutiny is emerging. The U.S. Copyright Office is signaling limitations on scraping unauthorized works; in Canada, courts are weighing whether AI scraping violates site terms. (American Bar Association) Scholars propose treating website Terms of Service as enforceable contracts: if a bot crawls pages containing a prohibition of training use, the bot operator may be legally bound. (arXiv)

There is a growing body of recent commentary, research, and reporting on how publicly available human-generated data is thinning out (or becoming more restricted) as a training resource for AI models. Below are a few notable recent articles and analyses, along with key points, and ideas you could use in your ReadAboutAI post.

🔍 NOTABLE RECENT ARTICLES & REPORTS

| Title / Source | Summary & Key Themes |

| “AI has already run out of training data — but there’s more waiting to be unlocked, Goldman’s data chief says”(Business Insider, Oct 2025) | Goldman Sachs’ Chief Data Officer Neema Raphael claims that high-quality web data is effectively exhausted, pushing AI builders to rely more on synthetic data or “untapped enterprise data.” (Business Insider) |

| “The AI revolution is running out of data. What can researchers do?” (Nature, Jan 2025) | This piece reports that AI development is increasingly constrained by lack of fresh, clean web data. It reviews how modelers are experimenting with data augmentation, synthetic data, and selective curation to keep scaling. (Nature) |

| “Apple, Nvidia, Anthropic Used Thousands of Swiped YouTube Videos to Train AI” (Wired / Proof News) | An investigation found that major AI firms used transcripts (subtitles) from 173,536 YouTube videos (across 48,000+ channels) without permission — highlighting how AI builders are scraping ever more aggressively to keep feeding models. (WIRED) |

| “Zuckerberg approved Meta’s use of ‘pirated’ books to train AI models, authors claim” (The Guardian, Jan 2025) | Authors allege that Meta knowingly used pirated (copyright-violating) books from datasets like LibGen in training, raising legal, ethical, and supply risks as demand grows for novel source data. (The Guardian) |

| “Current strategies to address data scarcity in artificial intelligence”(ScienceDirect, Gangwal et al., 2024) | A more technical overview of techniques (transfer learning, synthetic generation, augmentation) that researchers use to stretch limited datasets. (ScienceDirect) |

🧭 KEY TRENDS & THEMES

- Data Exhaustion & Scarcity: Many commentators now argue that the “low-hanging fruit” of freely scrappable, high-quality public content (blogs, news, social media) is largely tapped, forcing next-gen models to tap deeper, more controversial—or proprietary—sources.

- Synthetic Data & Augmentation: To compensate, modelers are generating synthetic content (text, images) and using augmentation techniques to expand training pools, though that carries risks of model collapse (when recursive training on synthetic outputs degrades quality). (Wikipedia)

- Legal & Ethical Backlash: Scraping copyrighted books, videos, and user posts without consent has spurred lawsuits, authors’ complaints, regulatory interest, and demands for licensing. (The Guardian)

- Proprietary & Locked Data: A shift toward private enterprise data (customer logs, internal docs) as “new fuel” for AI models is emerging, but many SMBs aren’t yet aware of that opportunity. (Business Insider)

- Model Collapse & Recursion Risk: Training on AI-generated content (i.e. feeding model outputs back into future training) risks degeneration in diversity, creativity, and fidelity. (Wikipedia)

Outro: October 7 Weekly Roundup

CONCLUSION

As October opens, AI is simultaneously accelerating and introspecting—pushing creative boundaries while confronting the limits of its own foundations. The takeaway for business leaders: progress now depends not just on smarter models, but on responsible data stewardship, ethical experimentation, and the courage to adapt before the next wave arrives.

Summaries created by ReadAboutAI.com

↑ Back to Top