AI Updates December 23, 2025

This Week in AI Developments

2025 was the year frontier AI models became everyday tools. OpenAI shipped GPT-5 and GPT-5.2, pushing big gains in reasoning, long-context handling, and “agentic” tool use—making it more realistic to let an AI plan and execute multi-step work instead of just drafting text. Google answered with Gemini 3, a multimodal family where the “Flash” tier gives free and low-cost access to surprisingly strong reasoning and coding, while also powering new AI features across Search, Workspace, and YouTube. Apple finally entered the arena with Apple Intelligence, baking privacy-preserving AI into iPhone, Mac, and Vision Pro for smarter notifications, on-device writing help, and visual understanding across languages and regions. At the same time, AI media tools matured: OpenAI’s Image 1.5 and Google’s NanoBanana raised the bar for realistic marketing visuals with accurate text; Meta’s SAM Audio made it possible to separate voices, music, and noise from a single recording; and Google expanded SynthID watermarking so its Gemini app can detect whether short videos were generated by Google AI—early infrastructure for deepfake detection and brand safety.

As we close out 2025, this ReadAboutAI.com weekly intelligence brief captures a year that moved from hype to pressure, from scale to scarcity, and from “AI will change everything” to “show me real returns.” Across the past seven days of reporting, Wall Street asked whether an AI bubble is forming even as CEOs signaled that AI budgets will increase in 2026. New forecasts warn of energy constraints, data-center moratorium politics, and debt-driven infrastructure, while enterprise adoption shifts from pilots to agent-based workflows, hybrid compute strategies, and CFO-mandated ROI. AI is no longer a curiosity—it is now a line-item, a financing risk, and a competitive filter.

This week also marked a tangible shift in the capabilities arms race. OpenAI launched GPT-5.2 Codex and a new Frontier Science benchmark to reclaim research momentum, while Google’s Gemini 3 Flash delivered a small-fast-cheap alternative that can outperform larger models in reasoning tasks. And in one of the clearest signs of evolution, OpenAI released ChatGPT Images / Image 1.5, finally solving readable text in images, face and brand consistency, and multi-step editing—turning AI images from novelty into usable business assets. The episode also spotlighted layer-based editing from Qwen, Apple’s single-image Gaussian splats for Vision Pro, and Sora video remixing, signaling a 2026 wave of non-technical creators producing studio-grade content.

But beneath capability gains sits cultural friction. Demand for GPUs has OpenAI “bursting at the seams,” diverting compute from research into commercial products. Political opposition to data-center growth is intensifying. China is inching closer to high-end chip self-sufficiency. And socially, the backlash against Larian Studios’ limited AI use shows how fast public narratives can turn accusatory—even when AI is only used for moodboards, rough drafts, or ideation. Heading into 2026, SMB executives face a dual mandate: use AI to accelerate output—and communicate transparently to avoid trust erosion. ReadAboutAI.com will continue curating these developments in the new year with clarity, pragmatism, and executive-level focus.

ChatGPT Images 1.5, GPT-5.2 Codex & Gemini 3 Flash – AI For Humans (Dec 19, 2025)

OpenAI’s new ChatGPT Images / Image 1.5 model takes a big step forward in image editing, face consistency, and especially readable text in images, positioning it as a strong answer to Google’s NanoBanana Pro. The hosts walk through hands-on tests—holiday cards, pet photos, complex resumes, and multi-step edits—showing that Image 1.5 is faster, better at character consistency, and can generate full ATS-style resumes and dense text layouts with minimal errors, though realism still sometimes lags NanoBanana’s ultra-photorealistic output. They also highlight emerging tools like Qwen’s “image layering” (auto-extracting objects into editable layers) and Apple’s single-image Gaussian splats that turn photos into navigable 3D scenes for devices like Vision Pro, plus playful AI video remixing via Sora.

Behind the scenes, the episode repeatedly comes back to compute scarcity and the AI infrastructure race. In a new video, Greg Brockman candidly admits OpenAI is “bursting at the seams” for compute, sometimes diverting GPU capacity from research to popular products like image generation. OpenAI responds with the launch of GPT-5.2 Codex (a more agentic coding model) and a Frontier Science benchmark designed to re-assert its research edge. In parallel, Google’s Gemini 3 Flash—a small, fast, cheap multimodal model—delivers performance that rivals or beats bigger models on some reasoning benchmarks, while remaining free to consumers and low-cost via API. This connects to broader tensions over data centers, with rising anti-AI sentiment, political calls for moratoriums on new data centers, and reports of China moving closer to high-end AI chip self-sufficiency, all shaping how fast and where AI capability will grow.

Culturally, the hosts focus on the Larian Studios “AI in games” controversy as a case study in how quickly online communities can polarize around AI. Larian clarified they only use generative AI lightly for early ideation, keeping art, writing, and performances fully human, yet headlines and gamer backlash framed it as “replacing artists.” They argue that AI is already embedded in creative tools (from Photoshop’s generative fill to auto-rotoscoping) and that we’re entering a world where AI-assisted workflows will be the norm, even for studios that brand themselves as “human-first.” Meanwhile, YouTube’s Playable Builders with vibecoded mini-games, Meta’s SAM Audio for text-prompt audio separation, modular “Tron 2” robots, and collaborative Sora video remixes all point to a near future where non-technical creators and small teams can rapidly ship games, videos, and experiences that previously required full studios.

Relevance for Business (SMB Executives & Managers)

For SMBs, the new ChatGPT Images / Image 1.5 and NanoBanana Pro tier of tools make high-quality marketing and brand assets—ads, product shots, social posts, infographics, resumes, menus—much cheaper and faster to produce. The big change is not just prettier pictures; it’s reliable text rendering, face/brand consistency, and emerging layer-based editing that make AI images usable in real campaigns, not just experiments.

The episode’s compute discussion is a warning light for planning: capacity limits and energy constraints will influence pricing, rate limits, and availability of advanced models in 2026–2027. With OpenAI and Google optimizing different trade-offs (OpenAI leaning into premium, deeply integrated agents; Google into fast, cheap, ubiquitous models like Gemini 3 Flash), SMBs will need a multi-vendor strategy and should expect rapid changes in model lineups.

Finally, the Larian discourse and broader anti-AI sentiment show that introducing AI into creative, customer-facing, or employee-sensitive workflows is not just a technical decision—it’s a communications and trust challenge. Employees, customers, and fans may react strongly if they feel human work is being quietly replaced, even when AI is only used for moodboards or rough drafts. SMB leaders will need transparent policies, clear value statements, and guardrails around AI use, especially in creative, HR, and customer communications.

Calls to Action (For Busy SMB Leaders)

🔹 Train managers on AI-related sentiment: use the Larian example to discuss how your customers and staff might react to AI adoption, and design opt-in, human-in-the-loop workflows that emphasize augmentation, not replacement.

🔹 Run a focused experiment with ChatGPT Images / Image 1.5: pick one use case (e.g., holiday campaign graphics, product one-pagers, or event flyers) and compare time, cost, and quality vs. your current design workflow. Capture lessons for a 2026 “AI-assisted design” playbook.

🔹 Benchmark Google Gemini 3 Flash vs your current model for one or two internal tasks (summarizing documents, drafting emails, light coding) to see if a cheaper / faster tier can offload routine work while reserving premium models for complex jobs.

🔹 Pilot “agentic coding” with GPT-5.2 Codex (or equivalent) on a low-risk internal tool—such as a simple dashboard, form, or report generator—to understand how far AI software agents can go in your environment and where you still need human developers.

🔹 Update your AI governance and comms plan: explicitly document where AI is allowed in creative workflows (e.g., ideation, thumbnails, moodboards) and where human control is required (final copy, final illustrations, HR decisions). Prepare a short, plain-language explanation for employees and customers.

🔹 Monitor AI infrastructure risks (data-center moratoriums, chip supply, vendor pricing changes) as part of your IT and vendor-management reviews, so you’re not surprised by sudden rate limits or cost hikes in 2026.

🔹 Explore new media tools like Meta SAM Audio and AI video remixing for content repurposing—e.g., clean noisy conference recordings, isolate speakers, or turn one keynote into multiple clips and vertical videos for social channels.

Summary by ReadAboutAI.com

https://www.youtube.com/watch?v=QHtSj9qR6do: December 23, 2025

TECH TRENDS 2026: AI COMES OF AGE — WSJ / DELOITTE (DEC. 10, 2025)

Deloitte positions 2026 as a scaling year—not experimentation. Boards now see AI as a mandate to rebuild operating models, reskill workforces, upgrade compute infrastructure, and shift toward agentic workflows.

The report highlights five enterprise forces:

- AI goes physical — industrial robots, drones, autonomous systems

- Agentic reality check — multi-agent orchestration replacing siloed task automation

- Compute economics shift — cloud becomes cost-prohibitive at scale; hybrid GPU data centers and edge adoption surge

- The great rebuild — AI-native org design, new roles (agent supervisors, edge engineers, prompt architects)

- Cyber paradox — AI introduces systemic security risk and adversarial attack surfaces

Adjacent signals to track: synthetic data, neuromorphic chips, wearables, privacy threats from agents, and generative-engine optimization (GEO).

Relevance for Business

Executives must shift AI from pilots to production, from cloud-only to hybrid, and from workforce fear to agent-human collaboration. Competitive advantage now comes from architecture—not experiments.

Calls to Action

🔹 Build hybrid compute strategies (cloud + on-prem + edge)

🔹 Create agent-workforce management frameworks

🔹 Hire for AI-native job roles

🔹 Embed AI cybersecurity controls from day one

🔹 Track synthetic-data and inference-economics adoption

Summary by ReadAboutAI.com

https://deloitte.wsj.com/cio/tech-trends-2026-ai-comes-of-age-24d63738: December 23, 2025

The Great AI Hype Correction of 2025 — MIT Technology Review (Dec 15, 2025)

AI’s mass-market enchantment cycle is cooling after three years of aggressive promises about AGI, workforce replacement, and economic transformation. Following the high-expectation launch of GPT-5—which failed to deliver a decisive leap forward—2025 became a pivot year from hype to sober assessment. AI adoption in enterprises is slowing; many pilots remain stalled, and surveys show tool usage is high among individuals but low in enterprise-scale operational value.

The article argues that LLMs are impressive but not magic—they mimic intelligence yet fail to generalize like humans. Research momentum continues, but funding and infrastructure spending may be entering dot-com-style risk territory. Still, AI-driven companies like Synthesia are scaling to thousands of enterprise customers, reinforcing that niche applications remain viable.

Rather than collapse, MIT frames 2025 as a “hype correction”—a reset that forces business, investors, and researchers to clarify use-cases, costs, environmental burdens, and realistic expectations. The next wave is positioned not as AGI, but as “the return of research”—incremental gains, specialized agents, and problem-specific deployments.

Relevance for Business

This piece encourages SMB leaders to avoid panic but retire magical thinking. The winners now are:

- Teams integrating AI into workflows—not replacing workers

- Companies investing in evidence-based ROI

- Organizations who treat AI as incremental efficiency, not a silver bullet

Executives should prepare for slower—but more durable—AI economics.

Calls to Action

🔹 Audit all AI pilots—advance beyond “experiments stuck in pilot mode.”

🔹 Focus on workflow augmentation, not headcount replacement.

🔹 Prioritize ROI-measurable deployments (customer service, analytics, automation).

🔹 Shift to hybrid AI procurement—pair commercial LLMs with specialized tools.

🔹 Expect compute/energy cost scrutiny—optimize before scaling.

Summary by ReadAboutAI.com

https://www.technologyreview.com/2025/12/15/1129174/the-great-ai-hype-correction-of-2025/: December 23, 2025

“What Is AI? Why the Debate Matters More Than Ever”

MIT Technology Review — Will Douglas Heaven (July 10, 2024)

Artificial intelligence has become the most influential—and least agreed-upon—technology of our time. In this expansive MIT Technology Review analysis, Will Douglas Heaven traces how AI has evolved from a technical research field into a cultural, political, and economic force, even as experts remain deeply divided on what AI actually is. The rise of large language models like ChatGPT has made AI feel concrete and humanlike, but researchers warn that this familiarity masks profound uncertainty about how these systems work, what they understand, and how much trust they deserve.

At the center of the debate is whether modern AI systems are merely advanced pattern-matching machines or whether they exhibit early signs of reasoning and general intelligence. Some researchers point to surprising behaviors—creative problem solving, code generation, and mathematical reasoning—as evidence of a potential paradigm shift. Others counter that these models remain “stochastic parrots,” producing convincing language without true understanding, grounding, or intent. The disagreement is not academic: AI capabilities are already shaping work, education, finance, healthcare, and governance, even as their limitations remain poorly understood.

The article argues that AI’s power lies not just in technology, but in how it is framed and sold. Since the term “artificial intelligence” was coined in the 1950s, hype, metaphor, and science fiction have repeatedly inflated expectations—sometimes driving funding and progress, other times leading to disappointment and backlash. Today’s AI boom reflects the same pattern: extraordinary claims, contested evidence, and enormous stakes. Heaven concludes that society must learn to separate capability from myth, agree on clearer definitions, and confront AI’s impacts without assuming either inevitable utopia or catastrophe.

Relevance for Business

For SMB executives and managers, this article reframes AI as more than a tool upgrade—it is a strategic uncertainty. Conflicting expert views mean that vendor claims, roadmaps, and ROI projections should be treated cautiously, especially when wrapped in AGI or “human-level intelligence” narratives. Understanding the limits of today’s AI helps leaders avoid over-automation, misplaced trust, and reputational risk, while still capturing real productivity gains.

Just as importantly, the piece highlights that language matters: how AI is described influences regulation, customer trust, employee adoption, and long-term investment decisions. Businesses that adopt AI thoughtfully—grounded in reality rather than hype—will be better positioned as standards, laws, and expectations continue to evolve.

Calls to Action

🔹 Pressure-test AI vendor claims, especially around “intelligence,” reasoning, or autonomy

🔹 Adopt AI incrementally, validating outputs before embedding them into critical workflows

🔹 Train leaders and teams to distinguish capability from marketing language

🔹 Plan governance and oversight for AI systems that appear humanlike but lack accountability

🔹 Monitor regulation and public sentiment, as definitions of AI shape policy and trust

Summary by ReadAboutAI.com

https://www.technologyreview.com/2024/07/10/1094475/what-is-artificial-intelligence-ai-definitive-guide/: December 23, 2025

USING AI TO AUGMENT, NOT JUST AUTOMATE — WSJ / DELOITTE (NOV. 14, 2025)

This piece argues the next workforce shift hinges on augmentation—not replacement. AI agents should free employees from repetitive tasks, opening time for creativity, problem-solving, and relationship-driven work.

Salesforce executives advocate “the four Rs”: reskill, redeploy, redesign work, rebalance responsibilities. AI should be evaluated through persona analysis, task decomposition, and outcome measurement—not headcount elimination.

Employees increasingly expect AI as a workplace benefit, and candidates now screen employers based on AI tooling availability and automation readiness. Continuous upskilling is essential, with AI-driven talent marketplaces matching skills to internal opportunities.

Relevance for Business

Executives face a shift from staff reduction to talent value expansion. Augmentation unlocks retention, culture, and productivity gains—especially in skilled operational roles.

Calls to Action

🔹 Redesign jobs around high-value skills and agent collaboration

🔹 Build AI learning journeys and internal talent marketplaces

🔹 Treat AI tools as employee-experience benefits

🔹 Encourage continuous experimentation and refinement

Summary by ReadAboutAI.com

https://deloitte.wsj.com/cio/using-ai-to-augment-not-just-automate-3a0526db: December 23, 2025

THE TRUTH PHYSICS CAN NO LONGER IGNORE (THE ATLANTIC, DEC. 15, 2025)

This essay argues that AI breakthroughs are forcing physics to confront living systems as fundamental scientific objects, instead of reducible “particle machines.”

Since 2024’s Nobel Prize honored AI-related research, physicists are reevaluating “reductionism”—the belief that everything reduces to particles. Emerging fields like complexity science show that life is self-organizing, autonomous, information-driven, and cannot be predicted from atomic behavior alone.

Life is presented as a dynamic pattern, constantly rebuilding itself, using information to pursue goals, unlike any inert system. The article argues that understanding life’s emergent behavior may become essential to designing general intelligence and assessing claims of AI consciousness.

Relevance for Business

Executives evaluating AGI narratives, hype cycles, or autonomous decision-making should ground expectations in biological complexity—not marketing. The future of AI governance may require life-science-based evaluation frameworks.

Calls to Action

🔹 Scrutinize AGI claims—distinguish biological intelligence from computational scaling

🔹 Include life-science experts in AI risk governance

🔹 Treat autonomy claims cautiously in vendor assessments

🔹 Watch for new regulatory definitions of “intelligent agents”

Summary by ReadAboutAI.com

https://www.theatlantic.com/science/2025/12/physics-life-reductionism-complexity/685257/: December 23, 2025

FRESH CONCERNS ABOUT AI SPENDING RATTLE WALL STREET (WSJ, DEC. 12, 2025)

AI enthusiasm snapped as investors questioned how long it will take for multi-hundred-billion-dollar AI spending to produce meaningful profits. Broadcom fell 11%—its worst day since January—despite record results, because investors focused on margin dilution, slower-than-expected OpenAI contracts, and uncertainty beyond 2027.

Oracle dropped 13% for the week after reporting heavy capital expenditures, sparking fears that hyperscalers are betting ahead of revenue. Chip names including Nvidia and AMD also fell.

Bond markets showed parallel anxiety—the yield spread on Oracle’s 2055 debt widened, and credit markets accelerated selling of AI-linked debt instruments. Wall Street now believes delays in data-center power, construction, and regulatory approvals may push out hundreds of billions in scheduled CapEx.

Relevance for Business

Cloud-AI pricing and availability could be more volatile than expected. Capital delays mean hardware scarcity will persist, and vendors will push higher pass-through costs.

Calls to Action

🔹 Expect multi-quarter delays in new AI capabilities

🔹 Negotiate compute pricing now—before scarcity worsens

🔹 Don’t model ROI on 2025 hype curves—extend to 2027+

🔹 Evaluate contract clauses for service-delivery delays

Summary by ReadAboutAI.com

https://www.wsj.com/finance/stocks/ai-fed-interest-rate-stock-market-749e18e2: December 23, 2025

SOME HISTORICAL CONTEXT FOR THE HUGE AI SPENDING BOOM — WASHINGTON POST OPINION (DEC 17, 2025)

Summary (Executive Edition)

George F. Will places today’s AI-capex frenzy alongside 1920s stock-buying manias and the 1800s railroad expansion. AI investment may account for half of GDP growth in early 2025, similar to railroads once representing 60% of the stock market.

He notes that overbuilding ahead of demand creates booms and busts — but also national infrastructure. Just as railroads overbuilt, collapsed, then unified markets, AI data-center overbuild may create massive long-term productivity gains even after short-term destruction.

One caution: U.S. stocks are historically exposed. AI-related assets have driven nearly half of household wealth growth, meaning a correction could hurt consumer confidence.

Relevance for Business

- AI-infrastructure spending ≠ proof of ROI — but this looks like a platform shift.

- Leaders should prepare for volatility and financing pressure.

- SMBs benefit long-term from cheaper compute, automation, and logistics infrastructure.

Calls to Action

🔹 Model recession scenarios if AI-valuations correct.

🔹 Avoid single-vendor infrastructure dependency.

🔹 Use downturns to acquire discounted AI capacity.

Summary by ReadAboutAI.com

https://www.washingtonpost.com/opinions/2025/12/17/artificial-intelligence-spending-boom-ai/: December 23, 2025

“We Asked Five AIs to Edit Photos, Create Art, and Show Emotion — The Results Reveal How Far Image AI Has Come”

EXECUTIVE SUMMARY (NON-SPOILER VERSION)

The Washington Post — Review by Geoffrey A. Fowler & Kevin Schaul (Dec. 16, 2025)

AI image generation has entered a new phase of realism—and risk. In an interactive evaluation, The Washington Post tested five leading AI image generators across five demanding tasks: editing a recognizable face, creating original ‘art,’ removing a person from a photo, conveying authentic emotion, and accurately rendering human hands. Together, the tests reveal how quickly image models are improving—and where critical weaknesses and ethical concerns remain.

Across the experiments, judges assessed visual accuracy, consistency, emotional credibility, and technical precision. Some tools showed dramatic improvements in facial preservation, lighting realism, and object interaction, while others struggled with anatomy, artifacts, and contextual errors. The tests highlight that AI image quality is no longer limited by obvious flaws—but by subtle details that can mislead even trained observers.

At the same time, the report surfaces unresolved issues around copyright, attribution, bias, and misuse. One model generated a fake photo credit referencing a real photographer, raising questions about training data transparency and safeguards. As realism increases, so does the risk of deepfakes, misinformation, and brand misuse, making AI image tools as much a governance challenge as a creative breakthrough.

RELEVANCE FOR BUSINESS

For SMB executives and managers, the takeaway is not which tool performed best—but that AI image generators are now powerful enough to influence trust, reputation, and decision-making. These tools can accelerate marketing, product visualization, and content creation, while simultaneously increasing exposure to legal, ethical, and reputational risk.

The report makes clear that choosing an image generator is no longer a purely creative decision—it is a strategic business choice involving risk tolerance, commercial safety, and disclosure practices.

CALLS TO ACTION

🔹 Evaluate AI image tools by use case, separating internal experimentation from public-facing content

🔹 Update brand and marketing guidelines to address synthetic images and attribution standards

🔹 Educate teams on deepfake risks, especially for social media and advertising

🔹 Ask vendors about safeguards, including watermarking, training data sources, and provenance

🔹 Monitor regulatory and legal developments around AI-generated imagery and copyright

Summary by ReadAboutAI.com

https://www.washingtonpost.com/technology/interactive/2025/best-ai-image-generator/: December 23, 2025

THE VIEW FROM INSIDE THE AI BUBBLE — THE ATLANTIC (DEC. 14, 2025)

This long-form Atlantic dispatch from Alex Reisner at the NeurIPS AI-research conference in San Diego describes a surreal scene of AI hype, safety panic, lavish recruiting parties, and economic disconnects. The article opens with Max Tegmark briefing journalists about “saving the world from AGI” while promoting a new AI-safety index where no company scored above a C+.

The author notes the gap between AGI fear-narratives and scientific reality. Even Sam Altman calls AGI a “weakly defined term,” yet warnings of “human extinction” dominate discourse. Meanwhile, the actual NeurIPS output tells a different story — of 5,630 posters, only two papers even mentioned AGI in their titles.

Real power at NeurIPS was in talent parties, equity bidding wars, and secret recruitment lounges, with grad students claiming $1M–$1.5M starting packages and Cohere hosting a party aboard the USS Midway aircraft carrier, complete with oysters, king prawns, ceviche, and VIP-style décor.

The core critique: AI leaders talk about sci-fi extinction while ignoring real harms like chatbot addiction, misinformation, and labor extraction. Zeynep Tufekci warns that obsession with superintelligence distracts researchers from truth-erosion, mental-health damage, creative-industry pillaging, and political manipulation.

The piece ends bluntly: who benefits from AGI catastrophe talk? Young technologists chasing equity windfalls and elite labs that sustain massive losses (OpenAI expects losses until 2030) while the economy and culture subsidize their speculative bets.

Relevance for Business

SMB executives should ignore sci-fi hype and focus on productivity, safety, and cost control.

Public AI narratives are dominated by fear branding, not operational ROI.

Workforce markets are distorted — top-end AI salaries are irrational and unsustainable.

Summary by ReadAboutAI.com

https://www.theatlantic.com/technology/2025/12/neurips-ai-bubble-agi/685250/: December 23, 2025

GOOGLE FACES NEW ANTITRUST PROBE IN EUROPE OVER AI TRAINING CONTENT — FAST COMPANY (DEC. 9, 2025)

The EU has launched a sweeping antitrust investigation into Google’s use of web content and YouTube videos to fuel AI Overviews, AI Mode, and its generative-AI models — all without compensating rights-holders or offering an opt-out.

Regulators suspect Google gave itself a privileged advantage by training models on publisher content and user-uploaded videos, then pushing that material into search products that divert traffic away from the original creators. Officials specifically cited AI Overviews (automatic summaries above search results) and AI Mode (chatbot-style answers).

The probe will examine whether Google:

- Imposed unfair terms and conditions on publishers and creators

- Used YouTube uploads to train AI while blocking rival model developers

- Built AI search tools that bypass publisher monetization

The case is being reviewed under the EU’s traditional competition law (not DMA) — meaning penalties could reach 10% of global annual revenue.

Google responded that complaints could “stifle innovation in a competitive market,” while EU Vice President Teresa Ribera said innovation cannot erase fairness, compensation, or democratic principles.

Relevance for Business

- Expect AI-copyright liability to expand rapidly.

- AI search is shifting power from publishers to platforms — meaning SMBs may lose organic visibility.

- Vendor contracts must address data-usage rights, opt-outs, and indemnification.

Calls to Action

🔹 Demand indemnity from AI-search vendors

🔹 Monitor organic traffic loss from AI Overviews

🔹 Track EU and FTC enforcement — U.S. may follow

🔹 Secure clear content licensing terms before feeding data into models

Summary by ReadAboutAI.com

https://www.fastcompany.com/91457338/google-european-union-antitrust-ai-overviews-youtube-content: December 23, 2025

Mozilla’s Delicate Dance with AI — Fast Company (Dec 8, 2025)

✅ EXECUTIVE SUMMARY

Mozilla avoided losing its Google-Search default revenue pipeline, which funds nearly $500M of its $653M revenues, but still faces market-share decline and platform irrelevance. Rather than pushing AI aggressively into Firefox like Microsoft or Google, Mozilla is positioning itself as the privacy-preserving alternative.

Firefox introduces optional—not default—AI assistants, letting users choose among ChatGPT, Gemini, Copilot, Claude, and Mistral’s Le Chat, while enabling on-device processing for translation and tabs. Mozilla refuses to “jam AI down their throats”—reflecting that 12%–15% of users resist AI entirely.

Its challenge remains market share (<4% globally) and the need to grow beyond search royalties. Mozilla is attempting new revenue streams—VPN integration, privacy-preserving ad-tech (Anonym), and EU DMA-boosted mobile adoption (up 149% in France).

Relevance for Business

For SMB leaders, Mozilla highlights an emerging consumer tension:

privacy-first digital products vs. forced AI integration.

This is a strategy case study in:

- Governed AI rollout

- Optionality vs. coercion

- Competing with platform monopolies

Calls to Action

🔹 Adopt opt-in AI policies for customer interfaces.

🔹 Market privacy as a differentiator, not a constraint.

🔹 Track EU DMA shifts—browser choice markets may forecast app shifts.

🔹 Consider multi-AI interoperability rather than single-model lock-in.

Summary by ReadAboutAI.com

https://www.fastcompany.com/91455128/mozilla-firefox-browser-ai: December 23, 2025SUPERSIZED DATA CENTERS WILL TRANSFORM AMERICA (WASHINGTON POST, DEC. 15, 2025)

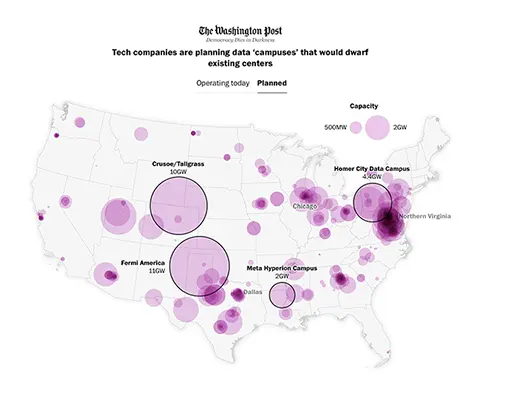

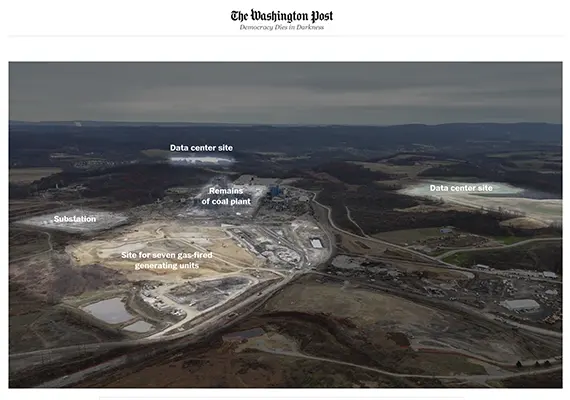

A massive wave of AI-powered hyperscale energy campuses is reshaping U.S. communities. Former coal plants—like Homer City in Pennsylvania—are being reborn as 4.4-GW data campuses, with onsite 30-acre gas-generation stations.

Charts in the article show:

- An average operating data center draws 45MW (≈ a city of 56K residents)

- Planned data centers average ~430MW

- Homer City will draw as much power as Philadelphia’s households

By 2030, AI data centers may consume >10% of U.S. electricity, driven by U.S.–China competition. Forecasts predict 60–150% growth in energy use, reaching 430 TWh, enough to power 16 Chicagos.

Because power demand is surging beyond the grid’s capacity, operators are returning to natural gas and even coal, undermining climate pledges. Grid monitors warn of unplanned outages and blocked interconnections. Meta is spending $30B on a Louisiana campus the size of Manhattan, and Wyoming projects include 10 GW onsite generation.

Relevance for Business

AI compute will drive energy costs up nationwide, increasing cloud pricing. Sustainability claims may collide with natural-gas dependency. Regions with energy access will dictate AI winners.

Calls to Action

🔹 Expect higher cloud energy-linked surcharges

🔹 Audit AI workloads for power efficiency

🔹 Add sustainability disclosures into AI vendor assessment

🔹 Review state-level energy incentives—location matters

Summary by ReadAboutAI.com

https://www.washingtonpost.com/climate-environment/interactive/2025/giant-data-centers-energy-pollution/: December 23, 2025

ORACLE IS THE CANARY IN THE AI DEBT MINE (MARKETWATCH, DEC. 9, 2025)

Oracle briefly rode a $300B OpenAI partnership and a surge toward $1T market cap, but is now down 36% from its peak, with credit default swaps (CDS) spiking, signaling fears about Big Tech’s debt-fuelled AI binge.

Oracle issued $18B in bonds—maturing as far as 2065, holds a 462% debt-to-equity ratio, and generates negative $6B free cash flow. Analysts warn that Oracle may reach a tipping point where lenders refuse more capital, creating a vicious financing spiral.

The article warns that Oracle is effectively acting as OpenAI’s off-balance-sheet financier, raising concern that $450–500B in performance obligations may evaporate if customers renegotiate. CDS spreads recently hit 128 basis points—the highest since 2009. Analysts call Oracle a proxy for systemic AI credit risk, as hyperscalers may borrow $1.5T of the $2.9T needed for AI CapEx.

Relevance for Business

AI infrastructure is entering a debt-driven maturity phase, which could trigger credit tightening, price shocks, and defaults. SMBs relying on vendors like Oracle must evaluate financial stability as a security risk.

Calls to Action

🔹 Assess cloud-AI partners for debt exposure

🔹 Expect financing models (private credit, SPVs) to obscure risk

🔹 Do contingency planning if AI partners cannot deliver capacity

🔹 Ask hard questions about RPO realism in contract negotiations

Summary by ReadAboutAI.com

https://www.wsj.com/wsjplus/dashboard/articles/oracle-is-the-canary-in-the-coal-mine-for-big-techs-debt-fueled-ai-spending-spree-cdde5eac: December 23, 2025

“A Robot Smaller Than a Grain of Salt Can ‘Sense, Think and Act’”

The Washington Post — Mark Johnson (Dec. 12, 2025)

Researchers have crossed a long-standing threshold in microrobotics. A team from the University of Pennsylvania and the University of Michigan has built a fully autonomous robot less than one millimeter in size—smaller than a grain of salt—that can sense its environment, make basic decisions, and move on its own. The device integrates a tiny computer, sensors, solar cells, and a propulsion system, solving a technical challenge that has eluded scientists for more than 40 years .

While the robot is not yet ready for medical use, experts describe it as the vanguard of a new class of machines with potential applications ranging from precision drug delivery to non-invasive diagnostics and cell-level monitoring. The robot operates using light-powered solar cells, swims through liquid using electrically induced flows, and communicates wirelessly with its operators. Researchers emphasize that future milestones include safe materials, operation in diverse environments, and eventually swarms of robots that can coordinate with one another .

RELEVANCE FOR BUSINESS

For SMB executives and managers, this breakthrough is less about immediate deployment and more about where robotics and AI-enabled hardware are heading. As computation, sensing, and autonomy shrink in size and cost, robotics will increasingly move from factories into healthcare, logistics, inspection, and diagnostics—often invisibly embedded into existing workflows.

This development also signals a broader trend: AI is escaping the screen. As intelligence migrates into physical devices, businesses will need to think beyond software licenses toward regulation, liability, safety, and trust in autonomous systems operating in real-world environments.

CALLS TO ACTION

🔹 Track advances in microrobotics and medical robotics, even if adoption is years away

🔹 Plan for AI beyond software, including physical and embedded systems

🔹 Monitor regulatory and safety discussions around autonomous medical and industrial devices

🔹 Evaluate long-term partnerships in robotics, diagnostics, and smart hardware

🔹 Educate leadership teams on how shrinking compute changes risk, scale, and opportunity

Summary by ReadAboutAI.com

https://www.washingtonpost.com/health/2025/12/12/robot-miniature-tiny-solar-computer/: December 23, 2025

Stanford Health Care Deploys AI Agents to Access Personalized RWE — TechTarget (Nov 13, 2025)

✅ EXECUTIVE SUMMARY

Stanford Health Care is piloting real-world-evidence AI agents inside Epic to support clinical decisions. Using Microsoft Dragon Copilot, ambient clinical conversations are transcribed into structured data; that data feeds Atropos Health’s Evidence Agent, which surfaces personalized studies and treatment pathways—without the physician manually querying.

Atropos’ internal research notes that only ~14% of medical decisions currently rely on strong evidence; AI agents now run instant mini-studies on comparable patients (gender, age, ethnicity, comorbidities). This also supports health equity use-cases (e.g., Hispanic-female kidney disease cohorts).

The solution is also being positioned for:

- clinical trial eligibility

- formulary decisions

- prior authorization automation

- pharmacy policy optimization

Relevance for Business

This is a major agentic-AI workflow milestone outside of hype cycles. It shows:

- How enterprise workflows integrate AI invisibly

- Why healthcare operational AI is early but real

- How ambient data capture + RWE can reduce admin overhead

Any SMB in regulated industries can learn from this governance-first deployment.

Calls to Action

🔹 Study agent-to-agent orchestration workflows.

🔹 Explore ambient data capture in service settings.

🔹 Prioritize evidence-based automation—not black-box predictions.

🔹 Pilot AI where regulatory transparency is required.

Summary by ReadAboutAI.com

https://www.techtarget.com/searchhealthit/feature/Stanford-Health-Care-deploys-AI-agents-to-access-personalized-RWE: December 23, 2025

IBM’s $11B Acquisition of Confluent Shows Investors Still Believe in AI — Fast Company (Dec 8, 2025)

✅ EXECUTIVE SUMMARY

IBM is paying $11 billion in cash to acquire Confluent, the leading real-time enterprise data-streaming platform.Confluent stock jumped nearly 30% after the announcement—an indicator that despite market skepticism, investor appetite for AI-ready infrastructure remains strong.

Confluent gives IBM a platform for event-driven data flows, essential for agentic AI, LLM-powered automation, and real-time analytics. IBM CEO Arvind Krishna says the deal creates “a smart data platform built for AI”—a positioning reinforced by IBM’s rising AI revenue (over $9.5B in Q3 2025).

IBM will operate Confluent as a distinct business unit, signaling plans to keep Kafka-style streaming neutral while folding it into enterprise-grade governance and IT modernization. The acquisition also shows AI strategy shifting from model obsession to data-pipeline ownership.

Relevance for Business

SMB executives should note a strategic shift: AI value is moving to data readiness and infrastructure. Organizations without clean, governed, real-time data cannot deploy agentic AI or automation. Confluent becoming IBM-owned signals consolidation and rising expectations for enterprise-grade data interoperability.

Calls to Action

🔹 Evaluate your data streaming maturity—batch processing won’t support AI.

🔹 Prepare for event-driven architectures to enable automation.

🔹 Consider vendor consolidation strategies in 2026.

🔹 Use this as a market signal: AI infrastructure remains investable.

Summary by ReadAboutAI.com

https://www.fastcompany.com/91456429/confluent-cflt-stock-price-ibm-11-billion-deal-shows-investors-still-think-ai-is-popping: December 23, 2025

THE ENTRY-LEVEL HIRING PROCESS IS BREAKING DOWN— THE ATLANTIC (DEC 2025)

Summary (Executive Edition)

The Atlantic argues that America’s entry-level hiring system has collapsed, with companies insisting on experience for “entry-level roles,” endless automated filters, AI résumé screens, and months-long interview gauntlets. The article notes that lower- and middle-income applicants are hit hardest, because unpaid internships, relocation, and costly certifications have become prerequisites.

Employers are using AI-driven screening systems that reject viable humans early, causing a paradox: companies complain about talent shortages while turning away qualified workers. The piece suggests the economy is wasting productive capacity — particularly affecting recent college graduates.

The story warns that AI-mediated hiring may widen inequality, depress mobility, and eliminate informal pathways into careers.

Relevance for Business

- SMBs face a hiring arbitrage moment: move faster than corporate HR, sweep up talent.

- Automated screening ≠ better outcomes — executives who rethink hiring gain competitive advantage.

- There is PR and legal exposure: algorithmic bias regulation is coming.

Calls to Action

🔹 Scrap degree requirements or unpaid “experience.”

🔹 Recruit on potential — not filters.

🔹 Audit AI-hiring tools for disparate impact.

🔹 Pilot paid apprenticeships to build your own pipeline.

Summary by ReadAboutAI.com

https://www.theatlantic.com/ideas/2025/12/grade-inflation-ai-hiring/685157/: December 23, 2025

AI’S ENERGY IMPACT IS STILL SMALL — BUT HOW WE HANDLE IT IS HUGE — MIT TECH REVIEW (MAY 20, 2025)

Summary (Executive Edition)

MIT researchers argue that AI energy demand is a climate stress-test. While AI currently represents 4.4% of U.S. electricity, projections show data centers may consume 6–12% by 2028, concentrated in states like Virginia at 25% load.

The article frames AI as the “small problem we must solve before electrifying everything else” — EVs, heat-pumps, industry. The recommended solution is a “Grid New Deal”: transmission upgrades, clean-power incentives, transparency mandates, nuclear deployment, and demand-management.

Ethical AI now includes climate ethics.

Relevance for Business

- AI-compute inflation will pressure utility rates, product margins, cooling costs.

- Early movers in clean-powered AI sourcing lock in cost advantages.

- ESG investors will demand energy disclosures for AI usage.

Calls to Action

🔹 Ask vendors for carbon-intensity reporting.

🔹 Run pilots on demand-response compute.

🔹 Site compute in clean-grid states.

Summary by ReadAboutAI.com

https://www.technologyreview.com/2025/05/20/1116274/opinion-ai-energy-use-data-centers-electricity/: December 23, 2025https://www.technologyreview.com/2025/05/20/1116327/ai-energy-usage-climate-footprint-big-tech/: December 23, 2025

WE DID THE MATH ON AI’S ENERGY FOOTPRINT — HERE’S THE STORY YOU HAVEN’T HEARD — MIT TECH REVIEW (MAY 20, 2025)

Summary (Executive Edition)

MIT offers the most granular accounting yet of per-query AI power usage, noting that LLMs consume 80–90% of energy during inference, not training. They calculate that a mid-sized Llama model can require 6,706 joules per text response, and a 5-second AI video can require 3.4 million joules — equivalent to riding 38 miles on an e-bike.

The article shows U.S. data-center electricity doubled after 2017, now 4.4% of national load, with leading utilities shifting toward natural gas for baseload. Transparency is nearly non-existent, enabling black-box energy costs that ratepayers may subsidize.

The Lawrence Berkeley Lab projects AI could require 165–326 terawatt-hours by 2028, enough to power 22% of U.S. households, and that ratepayers may absorb higher bills.

Relevance for Business

- AI usage equals operating costs. CFOs must plan around inference spend.

- Utilities may impose rate surcharges tied to data-center demand.

- Regulatory disclosure mandates are inevitable.

Calls to Action

🔹 Track per-query compute budgets.

🔹 Run energy-efficient model swaps (small models for tasks).

🔹 Build an internal AI-emissions inventory.

Summary by ReadAboutAI.com

https://www.technologyreview.com/2025/05/20/1116327/ai-energy-usage-climate-footprint-big-tech/: December 23, 2025AI Risk, AI in the Physical World, and Whether AI Might Be Conscious

🧭 EXECUTIVE SUMMARY — December 23, 2025

1. Is humanity on a collision course with AI? — Fast Company (Dec 10 2025)

2. AI could transform the physical world — Fast Company (Dec 08 2025)

3. Anthropic’s Kyle Fish is exploring whether AI is conscious — Fast Company (Dec 04 2025)

This week’s Fast Company coverage delivers a powerful contrast: rising fears that AI could automate labor, concentrate economic power, or even cause catastrophic harm, alongside a more grounded call for human-in-the-loop engineering, and finally a speculative discussion about whether AI systems could have subjective experience or suffering. For SMB leaders, these conversations aren’t academic—they shape regulation, capital allocation, hiring strategy, operational risk, and brand trust as we enter 2026.

In the first piece, Nate Soares warns that society is “building the airplane while flying it with no landing gear,” arguing that we are not prepared for the negative externalities of superintelligence, nor for a world where economic value concentrates among a small elite. The second piece shifts from existential risk to practical industrial deployment, noting that autonomous vehicles, life-science imaging, and AI-driven materials discovery still depend heavily on human judgment and expertise. The third explores whether models like Claude could exhibit conscious-like states, highlighting Anthropic’s investment in AI welfare testing, including the shocking claim of a 20% probability that chatbots may have some form of conscious experience.

Taken together, these stories reinforce one message: AI is accelerating faster than social, economic, and ethical frameworks. Businesses—especially SMBs—cannot sit on the sidelines.

📌 Relevance for Business (SMBs & Mid-Market Leaders)

- Regulation and Liability: If industry watchdogs frame superintelligence risk as catastrophic, expect safety standards, audits, and model-risk controls to move down-market.

- Operational Design: Physical-world AI deployment still requires human expertise, safety operators, domain oversight, and training loops.

- Capital Ramp: If AI automates labor at scale, SMBs must prepare for business-model redesign—not cost-cutting alone.

- Ethics as Competitive Advantage: Questions around AI consciousness, welfare, or emotional manipulationwill shape brand trust, HR adoption, and AI-governance policies.

- Strategic Hiring: Firms deploying automation will need operators, annotators, domain specialists, and safety reviewers—not just prompt engineers.

🔹 Calls to Action (Practical Takeaways for Executives)

🔹 Establish an AI-Safety & Governance checklist for every vendor or tool (misuse, hallucination, liability, IP leakage, harmful autonomy).

🔹 Require human-in-the-loop sign-off for any customer-facing automation, especially logistics, diagnostics, transportation, or compliance.

🔹 Evaluate whether 2026 AI adoption requires new job functions (annotation roles, supervisory operators, data-risk leads).

🔹 Add an AI ethics statement to customer-facing materials—transparency builds trust.

🔹 Run small domain-specific pilots using physical-world AI (quality control vision, robotics process automation, warehouse optimization).

🔹 Track emerging AI liability frameworks—expect insurance carriers to price AI-driven risk soon.

🔹 Begin monitoring employee and customer sentiment—trust and comfort are adoption bottlenecks.

🔹 Avoid vendor hype: adoption without expertise increases error exposure, legal exposure, and brand damage.

Summary by ReadAboutAI.com

https://www.fastcompany.com/91448156/is-humanity-on-a-collision-course-with-ai-why-the-downsides-need-to-be-reckoned-with-soon: December 23, 2025https://www.fastcompany.com/91448186/ai-could-transform-the-physical-world-to-do-so-it-will-need-human-expertise: December 23, 2025

https://www.fastcompany.com/91451703/anthropic-kyle-fish: December 23, 2025

https://www.fastcompany.com/section/world-changing-ideas: December 23, 2025

AMAZON IS BUILDING A SURVEILLANCE EMPIRE ON THE BACKS OF DELIVERY DRIVERS — DAIR (DEC 1, 2025)

Summary (Executive Edition)

DAIR’s report alleges Amazon has created a workplace-surveillance regime across Delivery Service Partners, forcing drivers to use AI cameras, phone apps, telematics, routing algorithms, and behavioral scoring.

The paper claims these systems reduce safety, penalize “normal” human behavior (looking down, drinking water), dock wages, and trigger automated terminations. By outsourcing the workforce, Amazon can evade labor-law accountability while implementing algorithmic control frameworks.

The report warns this model is becoming a blueprint for automation without worker protections, reshaping logistics labor worldwide.

Relevance for Business

- Surveillance-as-workforce-management carries legal, PR, and regulatory risk.

- Union, DOJ, and EU regulators are increasingly focused on algorithmic labor exploitation.

- SMBs with delivery/logistics ops must avoid Amazon-style opacity.

Calls to Action

🔹 Audit AI workforce-monitoring systems for harm.

🔹 Don’t tie pay to black-box algorithms.

🔹 Provide human appeal pathways.

Summary by ReadAboutAI.com

https://www.dair-institute.org/blog/amazon-is-building-a-surveillance-empire-on-the-backs-of-delivery-drivers/: December 23, 2025https://www.dair-institute.org/projects/: December 23, 2025

https://www.dair-institute.org/team/: December 23, 2025

CEOS TO KEEP SPENDING ON AI, DESPITE SPOTTY RETURNS — WSJ EXCLUSIVE (DEC. 14, 2025)

Teneo’s annual survey shows 68% of CEOs plan to increase AI spending in 2026, even though less than half of current projects generated positive returns.

AI shows the strongest returns in marketing and customer service, while security, legal, and HR lag. Investors expect AI payback within six months, while 84% of large-company CEOs believe it will take longer.

Notably, 67% of CEOs believe AI will increase entry-level hiring, and 58% expect senior leadership headcount to rise—undermining the “AI will replace jobs” narrative.

M&A optimism remains high—78% expect increased deal activity in 2026, following a 40% YoY rise in M&A deals.

Relevance for Business

Executives are signaling AI spending is non-optional—even with unclear ROI. AI may drive hiring, not shrink it, and payback expectations are shortening.

Calls to Action

🔹 Focus AI investment on marketing + customer experience, where ROI exists

🔹 Set 6–9 month ROI targets—not multi-year gambles

🔹 Expect talent expansion, not contraction

🔹 Prepare for AI-driven M&A consolidation cycles

Summary by ReadAboutAI.com

https://www.wsj.com/tech/ai/ceos-to-keep-spending-on-ai-despite-spotty-returns-2eaeb6b9: December 23, 2025

BROADCOM EARNINGS: STOCK SINKS DESPITE RECORD REVENUE (WSJ, DEC. 11, 2025)

Broadcom posted record quarterly revenue of $18B and $63.9B for the year, beating expectations, driven overwhelmingly by AI accelerator chip sales to customers like Google, Meta, and OpenAI.

However, shares dropped because AI revenue carries lower margins and because executives guided flat non-AI growth. CEO Hock Tan downplayed custom chips (XPUs), suggesting next-gen GPUs remain dominant, and that many hyperscalers may still build their own designs. Investors worry about future backlogs, margin pressure, and dependence on AI buildout cycles.

But long-term analysts see custom silicon potentially reaching 25–30% of the accelerated compute market, with Broadcom positioned to control 70–80% of it. Many expect total AI chip demand to hit $1T annually.

Relevance for Business

Executives should expect AI compute pricing volatility, margin instability, and rapid chip architecture changes. If Broadcom is right, GPU dominance may continue longer, affecting cloud AI pricing and vendor dependence.

Calls to Action

🔹 Don’t assume custom silicon replaces GPUs—budget for both

🔹 Expect cloud providers to shift pricing based on AI chip margins

🔹 Optimize workloads to reduce dependency on volatile compute markets

🔹 Track XPU and custom-ASIC maturity before committing

Summary by ReadAboutAI.com

https://www.wsj.com/business/earnings/broadcom-avgo-q4-earnings-report-2025-d870bcfc: December 23, 2025

BROADCOM AND CHIP STOCKS MAKE J.P. MORGAN’S TOP PICKS (MARKETWATCH, DEC. 16, 2025)

J.P. Morgan analysts argue that AI-related semiconductor spending will keep running hot into 2026, despite persistent speculation of an “AI bubble.” Spending on data-center CapEx is expected to jump another 50% next year, following an estimated 65% surge in 2025, which could benefit memory makers, networking, ASIC developers, and chip-equipment suppliers.

Top picks include Broadcom, Marvell, Analog Devices, and Micron, with Broadcom forecast to generate $55B–$60B in AI revenue in FY2026 as adoption of application-specific integrated circuits (ASICs) accelerates. Marvell is positioned for upside as AI deployment expands and cyclical revenue returns. Analysts expect semiconductor industry revenues to grow 10–15% in 2026, with shortages ironically stretching demand cycles.

Sur remains bullish long-term: rising compute intensity, greater enterprise adoption of AI, and automotive/industrial recovery could sustain a “runway of several years” for chipmakers.

Relevance for Business

SMB leaders should note that AI infrastructure investment is still accelerating—not peaking. Chips, networking hardware, and memory will get more expensive and harder to acquire. Companies building AI workloads must budget for high-cost, scarce compute well into 2027.

Calls to Action

🔹 Budget for higher compute & GPU/ASIC pricing through 2026–27

🔹 Expect supply constraints—secure hardware or cloud credits early

🔹 Track Broadcom & Marvell for custom-compute partnerships

🔹 Review ASIC options if GPU access bottlenecks execution

Summary by ReadAboutAI.com

https://www.wsj.com/wsjplus/dashboard/articles/broadcom-and-these-chip-stocks-make-j-p-morgans-list-of-top-picks-693b1d5d: December 23, 2025

AI IS ABOUT TO EMPTY MADISON AVENUE (WSJ, DEC. 15, 2025 – OPINION)

Google, Meta, and Amazon are spending hundreds of billions of dollars on AI to automate advertising creation, testing, optimization, and placement—removing agencies from the loop. Their platforms (e.g., Google Performance Max, Meta Advantage+, Amazon Creative Studio) can generate hundreds of ad variations, benchmark results, and deploy winning campaigns at platform scale.

These systems now deliver 14–17% better campaign conversions, accelerating advertiser lock-in and turning Big Tech into one-stop AI creative factories. Creative freelance work is collapsing—WPP cut 25% of its freelancers. TikTok now offers free AI-generated avatars, and platforms like Adobe Firefly and Getty’s AI catalog are replacing stock photography.

The economics improve as the systems scale, meaning AI will consolidate the trillion-dollar ad industry, pushing out creative workers and agency intermediaries. The next frontier: video ads generated by Veo, Sora, and other multimodal systems for pennies.

Relevance for Business

Small businesses now access enterprise-grade ad automation, but dependence on platforms will erase negotiating leverage and pricing transparency. The winners will be firms that master platform AI ad tools—not agencies.

Calls to Action

🔹 Shift spend toward Performance Max / Advantage+ automation

🔹 Reduce reliance on legacy creative agencies

🔹 Re-skill teams for prompt-driven digital asset creation

🔹 Expect pricing power and lock-in from platform ecosystems

Summary by ReadAboutAI.com

https://www.wsj.com/opinion/ai-is-about-to-empty-madison-avenue-58ab2ea2: December 23, 2025

OPENAI UPDATES CHATGPT AMID BATTLE FOR KNOWLEDGE WORKERS — WSJ (DEC. 11, 2025)

OpenAI launched GPT-5.2, calling it its strongest model for professional knowledge work, with improvements in math, science, coding, spreadsheets, presentations, multimodal recognition, and tool-use.

The rollout followed Sam Altman’s “code red” strategy to respond to Google’s Gemini, which now outperforms ChatGPT in expert-level reasoning, logic puzzles, and image-based tasks. Anthropic is also accelerating its enterprise sales push.

OpenAI claims GPT-5.2 beat or tied industry professionals on 70.9% of measured tasks (GDPval) across 44 occupations including manufacturing, healthcare, finance, and pro services. Disney is investing $1B in OpenAI and licensing characters for Sora multimedia training.

Relevance for Business

The AI productivity war is now enterprise-focused—spreadsheets, presentations, analytics, and long-context project execution. SMBs gain access to knowledge-worker automation that once required analysts or consultants.

Calls to Action

🔹 Pilot knowledge-work automation (Excel, slides, reporting)

🔹 Compare Gemini vs. GPT-5.2 vs. Claude for accuracy and ROI

🔹 Track vendor multimodal roadmaps for images and video

🔹 Reskill teams to manage tool-driven workflows

Summary by ReadAboutAI.com

https://www.wsj.com/articles/openai-updates-chatgpt-amid-battle-for-knowledge-workers-995376f9: December 23, 2025

“She Blew the Whistle — Then Her Career Fell Apart” — Washington Post (Dec. 15, 2025)

Executive Summary

Tech whistleblowers who publicly expose safety failures, propaganda, platform harms, or political manipulationoften face blacklisting, stalled careers, social isolation, and financial instability. The story centers on Yaël Eisenstat, Meta’s former head of election integrity, who alleged Facebook allowed sophisticated political ad-targeting to mislead the public. After speaking out, she says she was ghosted by employers, cut out of opportunities, and waited four years to regain career footing.

Multiple former Meta employees—Sarah Wynn-Williams, Arturo Béjar, Frances Haugen, Anika Collier Navaroli—describe ostracism, accusations of disloyalty, and legal intimidation intended to discourage criticism. Wynn-Williams faces potential gag-order fines of $50,000 per violation, and Meta is seeking “at least tens of millions of dollars” in damages.

Some whistleblowers end up blacklisted from Silicon Valley, relying on savings, becoming academics, or leaving the industry altogether. A small number—like Haugen—parlay visibility into books, speaking tours, or nonprofit leadership. But most report a severe emotional and economic toll, saying truth-telling rarely leads to industry reform, even though disclosures fueled hearings, legislation attempts, and new state-level whistleblower protections.

The article highlights emerging protections: the California AI accountability bill, a proposed U.S. AI Whistleblower Act, and nonprofits offering legal and emotional support for employees exposing AI safety risks, model vulnerabilities, user harm, or catastrophic-risk behavior.

Despite personal sacrifice, whistleblowers cite ethical duty: “I can sleep at night now.” The piece frames whistleblowing as a public-interest function without corresponding career safeguards, particularly relevant as AI accelerates high-risk deployment at scale.

🏢 Relevance for Business (SMBs & Mid-Market)

This matters to executives because:

- AI and data governance are becoming legal risk domains

- Employees raising concerns may trigger regulatory exposure

- Ethical culture strengthens reputation, hiring, retention, and customer trust

- State and federal laws will increase corporate liability for AI harm

- SMBs deploying AI without oversight risk brand damage and litigation

Ethics is no longer “nice to have.” It’s a compliance and competitive requirement.

🔹 Calls to Action

🔹 Establish a whistleblower-safe channel inside your company.

🔹 Build an AI risk review committee (privacy, safety, governance).

🔹 Run model-risk audits for bias, misinformation, and youth safety.

🔹 Train leadership on retaliation risks and legal protections.

🔹 Document decisions around AI deployment and content safety.

🔹 Treat ethical disclosures as innovation signals—not threats.

🔹 Add AI ethics to ESG and brand-trust messaging.

Summary by ReadAboutAI.com

https://www.washingtonpost.com/technology/2025/12/15/big-tech-whistleblowers-speak-out/: December 23, 2025

“A CIO’s Playbook for AI Investment” — Fast Company (Dec. 8, 2025)

🧭 Executive Summary

AI isn’t a technology strategy — it is a business strategy powered by AI, argues Stephen Franchetti, former CIO at Slack and current CIO at Samsara. CIOs fail when they treat AI as a standalone science project instead of anchoring it to measurable business KPIs. At Samsara, that meant using AI to cut support chat volume by 59%, auto-resolve 27% of IT tickets, and deliver ~40% code acceptance through AI code-assist, freeing engineers for higher-value work.

Franchetti recommends applying a Venture Capital–style portfolio mindset: assume only 10% of AI pilots will yield high returns, which forces companies to run many small experiments, “fail fast,” and prioritize those with real business impact. AI must scale through change management, with CEO-level sponsorship, department-level innovation, and an “AI Champions Network” that teaches literacy and encourages bottom-up adoption.

The cultural message is equally important: frame AI as partnership, not replacement. Employees should see AI as a tool that eliminates low-value work, enabling more advanced development, customer support, and operations. Samsara uses AI help agents to reduce support load, accelerate software development, and unlock strategic work capacity across departments.

Franchetti concludes that a CIO’s mandate must shift from technology buyer to strategic portfolio manager, obsessively aligned to outcomes and cultural transformation. The question shifts from “What AI platform should we buy?” to “What business problem are we solving?”

🏢 Relevance for Business (SMBs & Mid-Market)

This article matters because mid-market leaders are now expected to:

- Deploy AI for measurable KPIs (sales, support efficiency, speed to market)

- Treat AI as ongoing portfolio investment—not a one-time software purchase

- Create internal training infrastructure to avoid “AI shelfware”

- Build cultures where AI augments workforce capacity rather than threatens it

For executives with limited resources, a 10% success expectation encourages experimentation without fear of failure.

🔹 Calls to Action

🔹 Ask one question per investment: What KPI does this AI improve?

🔹 Build a pilot funnel (5–10 small experiments each quarter).

🔹 Launch an AI Champions Network across sales, finance, and ops.

🔹 Establish CEO-sponsored AI priorities—not just IT ownership.

🔹 Track hard ROI metrics (tickets reduced, time-to-resolution, hours saved).

🔹 Position AI as workforce acceleration—not layoffs.

🔹 Stop chasing vendors—pair business need to outcome first.

Summary by ReadAboutAI.com

https://www.fastcompany.com/91454162/a-cios-playbook-for-ai-investment: December 23, 2025

AI’S INFLUENCE ON E-COMMERCE IS STILL MUDDY (TECHTARGET, DEC. 5, 2025)

Cyber Week delivered record e-commerce activity: 202.9M shoppers, a flip toward online majority, 7% sales growth, and huge mobile traffic. However, it is still unclear how much AI caused these gains.

Adobe reported 758% growth in LLM-driven retail referrals, while Salesforce saw 300% growth and claims its Agentforce AI influenced 20% of orders worth $67B. But no external data validates these claims.

AI shopping agents are still niche, used by very few consumers. Analyst consensus: generative-AI search is promising, but agentic commerce may stall. Gartner predicts 15% of companies will abandon agentic commerce by 2028.

Meanwhile, Buy-Now-Pay-Later—not AI—drove $10.1B in sales, rising 9% YoY. Emotional purchases (gifts, discretion) still favor human judgment.

Relevance for Business

LLM discoverability matters more today than full AI agents. Retailers should optimize structured product feeds, metadata, and LLM-search compatibility, rather than chase unproven chat-commerce.

Calls to Action

🔹 Optimize content for LLM search and ChatGPT-style referrals

🔹 Don’t overspend on AI agents—pressure vendors for proof

🔹 Track BNPL economics as a revenue accelerant

🔹 Treat AI-influence claims with skepticism and demand audits

Summary by ReadAboutAI.com

https://www.techtarget.com/searchcustomerexperience/opinion/At-this-juncture-AIs-influence-on-e-commerce-is-still-muddy: December 23, 2025

Evaluate 10 AI Chatbots for Business Use Cases — TechTarget (Dec 3, 2025)

✅ EXECUTIVE SUMMARY

This report frames the evolution of business chatbots from:

- Rule-based

- Intent-based NLP

- LLM-driven agentic systems

Demand is shifting toward multi-turn, transactional, workflow-integrated chatbots across HR, IT, customer service, sales, and regulated industries. Adoption drivers include retrieval-augmented generation, multimodal input, privacy controls, and enterprise connectors.

The article breaks the market into three strategic groups:

- Horizontal platforms (Cognigy, Kore.ai, Yellow.ai, Dialogflow)

- Foundation-model infrastructures (Azure OpenAI, Bedrock, Anthropic, OpenAI Enterprise)

- Vertical domain chatbots (Aisera, Hyro, Paradox, Kasisto, PolyAI)

It argues that chatbot selection hinges on governance maturity, integration readiness, and data residency—not model quality alone.

Relevance for Business

- Start with use-case clarity

- Map data governance

- Evaluate integration engineering constraints

- Avoid single-vendor lock-in

Calls to Action

🔹 Decide whether you need horizontal, vertical, or foundation models.

🔹 Prioritize enterprise connectors over fancy model demos.

🔹 Build multi-department deployment roadmaps.

🔹 Apply human-in-the-loop guardrails.

Summary by ReadAboutAI.com

https://www.techtarget.com/searchenterpriseai/tip/Evaluate-AI-chatbots-for-business-use-cases: December 23, 2025

OPENAI ENDS VESTING CLIFF TO WIN TALENT (WSJ EXCLUSIVE, DEC. 13, 2025)

OpenAI has eliminated its six-month equity vesting cliff, allowing new hires to access stock immediately—a radical move designed to win the AI talent war, particularly against Meta, Google, Anthropic, and Elon Musk’s xAI, which has already shortened vesting.

OpenAI is spending $6 billion this year on stock-based compensation—nearly half of projected revenue. Top researchers now command $100M-plus packages, and some received one-time multimillion-dollar bonuses after Meta tried to poach engineers.

The elimination of vesting cliffs shows that the employee leverage now exceeds employer leverage. It also reflects instability—xAI has suffered departures across legal, finance, engineering, plus reputational harm from antisemitic chatbot outputs and sexualized bot products.

Relevance for Business

AI hiring is moving toward no-strings equity, upfront compensation, and extreme bidding wars. That will raise pricing for AI development and shrink available talent for SMBs.

Calls to Action

🔹 Assume talent scarcity and inflated compensation in AI projects

🔹 Outsource model-building unless you can pay Silicon Valley premiums

🔹 Evaluate vendor risk tied to talent churn and political reputation

🔹 Avoid long-horizon custom AI builds that require proprietary staff

Summary by ReadAboutAI.com

https://www.wsj.com/tech/ai/openai-ends-vesting-cliff-for-new-employees-in-compensation-policy-change-d4c4c2cd: December 23, 2025

AN AI BUBBLE BURST? EARLY WARNING SIGNS AND HOW TO PREPARE — TECHTARGET (DEC. 10, 2025)

The article warns that AI may be entering a reset—not a collapse—after two years of overinvestment and limited enterprise ROI. CIOs face fragmented tools, stalled pilots, and pressure from CFOs to prove value, as “95% of organizations are experiencing zero return” on generative AI, according to MIT data.

Analysts highlight circular financing (Nvidia investing in OpenAI, which buys Nvidia chips; Oracle financing OpenAI workloads), unsustainable burn rates (OpenAI may lose $12B per quarter, expecting $44B more in losses through 2029), and national-security framing to justify bailouts.

Multiple structural cracks are emerging:

- 35 GW U.S. electricity shortfall by 2028

- Public confidence at its lowest since 1997

- CFO-led scrutiny replacing hype-led purchasing

But experts see three waves of the reset: layoffs, budget cuts to unproven AI divisions, then a healthier innovation cycle focused on narrow, profitable, practical AI.

Relevance for Business

SMB executives should prepare for a shift from AI novelty to ROI discipline. The winners will implement:

- narrow models

- edge AI

- clear 90-day value tests

- profit-first vendors

Calls to Action

🔹 Require measurable ROI within 90 days—or walk away

🔹 Favor narrow use cases over “general AI” claims

🔹 Audit AI budgets—cut 80% of experiments that never shipped

🔹 Partner only with profitable or cash-flow-stable AI vendors

🔹 Avoid “state-of-the-art” models when smaller options suffice

Summary by ReadAboutAI.com

https://www.techtarget.com/searchcio/feature/An-AI-bubble-burst-Early-warning-signs-and-how-to-prepare: December 23, 2025

COREWEAVE’S STAGGERING FALL AND AI BUBBLE FEARS (WSJ EXCLUSIVE, DEC. 15, 2025)

CoreWeave—a data-center darling backed by Nvidia, Magnetar, and Coatue—has erased $33B in valuation in six weeks, collapsing 46% amid fears of an AI debt bubble, construction delays, and dependency on OpenAI.

A 60-day Texas rain delay pushed back a 260-MW facility leased to OpenAI, triggering investor panic. Design revisions added more delays, and CoreWeave’s CEO issued contradictory public statements, worsening the slide.

The business model: use high-interest debt to buy Nvidia chips, install them in leased facilities, and rent GPU power. But with operating margins at ~4%—lower than its interest rates—the company is unprofitable, losing $110M last quarter. Analysts warn it has “the ugliest balance sheet in technology.”

Bond premiums are surging: its default insurance hit 7.9 percentage points, reflecting systemic credit stress.

Relevance for Business

AI compute infrastructure is being funded with expensive leverage and vendor consolidation risk. Any slowdown in construction or demand could trigger credit crunches and service instability.

Calls to Action

🔹 Demand financial transparency from GPU/cloud vendors

🔹 Do not rely on single-provider GPU access (CoreWeave/OpenAI)

🔹 Build multi-region redundancy for GPU compute

🔹 Treat AI-infrastructure contracts like credit-exposure agreements

Summary by ReadAboutAI.com

https://www.wsj.com/tech/ai/coreweave-stock-market-ai-bubble-a3c8c321: December 23, 2025

“Tracking AI’s Growing Energy Appetite”

MIT Technology Review — Special Reporting Project (2025)

AI’s rapid expansion is quietly reshaping global energy demand. In a major new web project, MIT Technology Review presents one of the most comprehensive examinations to date of how much power AI consumes today, how fast that demand is growing, and where the energy will come from. The reporting goes beyond model training to focus on AI inference—the everyday queries, image generation, and video creation that power real-world AI use—which experts say is poised to surpass training as the dominant driver of energy consumption.

The project traces the real-world consequences of this growth, from water-intensive data centers in Nevada to large-scale infrastructure projects in Louisiana, revealing how AI’s expansion is tied to regional resource strain, emissions, and energy trade-offs. While the report highlights why nuclear-powered data centers remain an uncertain solution, it also explores reasons for cautious optimism, including advances that could make future AI systems far more energy efficient—if decisions made now prioritize sustainability and transparency.

Relevance for Business

For SMB executives and managers, this reporting reframes AI not just as a software investment, but as a long-term infrastructure and cost issue. As AI usage scales, energy availability, pricing, and sustainability pressures will increasingly shape vendor costs, service reliability, and regulatory exposure—even for smaller organizations.

Understanding where AI’s power demands are headed helps leaders make smarter platform choices, anticipate cost pass-throughs, and align AI adoption with environmental and governance expectations from customers, partners, and regulators.

Calls to Action

🔹 Ask AI vendors about energy efficiency and how inference costs scale with usage

🔹 Factor infrastructure risk into AI strategy, not just features and performance

🔹 Prepare for cost volatility tied to data center expansion and energy markets

🔹 Align AI adoption with sustainability goals to meet stakeholder expectations

🔹 Track policy and reporting standards around AI energy use and emissions

Summary by ReadAboutAI.com

https://www.technologyreview.com/supertopic/ai-energy-package/: December 23, 2025https://www.technologyreview.com/2025/05/20/1116327/ai-energy-usage-climate-footprint-big-tech/: December 23, 2025

Closing: AI update for December 23, 2025

Under the surface, 2025 was also about infrastructure, regulation, and risk. Nvidia’s Blackwell and Blackwell UltraGPUs, plus “AI factory” systems like the GB200 NVL72, turned data centers into specialized AI power plants, promising enormous performance gains but also tying AI progress to energy, cooling, and supply-chain constraints that affect pricing and availability for everyone—including SMBs. In Europe, the EU AI Act moved from theory to practice: bans on “unacceptable-risk” AI kicked in from February, and by August, new transparency rules and codes of practice started applying to general-purpose AI models, forcing providers to document training data and risks more clearly.

For small and mid-size businesses, the net result is a landscape where powerful, consumer-grade AI (from GPT-5.2, Gemini 3, and Apple Intelligence) is increasingly available “out of the box,” while regulators and chipmakers reshape the cost, trust, and compliance environment underneath. The opportunity in 2026 will be less about “trying AI for the first time” and more about choosing the right mix of tools, vendors, and guardrails—and turning this year’s breakthroughs into concrete productivity, better customer experiences, and new revenue.

We at ReadAboutAI.com —thank you for reading, learning, and building with us.

We look forward to 2026 with sharper insights, faster summaries, and continued curation for leaders who need clarity—not hype.

All Summaries by ReadAboutAI.com

↑ Back to Top