AI Updates January 13, 2026

Artificial intelligence in early 2026 is no longer defined by breakthrough announcements—it’s defined by consequences. This week’s developments show AI moving decisively from experimentation into infrastructure, governance, and everyday business reality. From Gmail becoming an ambient AI assistant, to data centers negotiating power shutdowns, to regulators scrutinizing cross-border AI deals, the signal is clear: AI is now shaping costs, workflows, risk profiles, and competitive dynamics—even when leaders aren’t actively “adopting AI.”

Across this week’s coverage, a consistent theme emerges: constraints are replacing hype. Energy availability, geopolitics, legal liability, trust, workforce readiness, and cultural backlash are increasingly determining how—and where—AI can scale. While capital continues to concentrate around a small number of dominant platforms, real-world performance, governance, and reliability matter more than benchmarks or valuations. At the same time, AI’s limits are becoming clearer: it augments work far better than it replaces it, and it creates as many human challenges as technical ones.

Taken together, this week’s articles frame AI as a management and leadership issue, not a technology curiosity. For SMB executives and managers, the priority is no longer chasing the newest tool—it’s making informed decisions about where to deploy AI, where to restrain it, and where human judgment remains irreplaceable. This edition is designed to help you quickly identify what requires action now, what demands monitoring, and what should be deliberately ignored.

Current posts this year: Jan 03, Jan 06, Jan09, 2026

Previous posts already published this year, and Why it’s worth catching up:

The January 13 update builds on a rapid sequence of AI developments that began before the calendar even turned. Between the final weeks of 2025 and the first two weeks of 2026, AI has crossed several quiet but consequential thresholds—shifting from experimentation to infrastructure, from promise to pressure, and from tools to systems with real economic, human, and governance consequences. The January 3, January 6, and January 9 posts capture this acceleration in real time, tracing how agentic AI, compute and energy constraints, workforce cognition, trust erosion, regulation, and physical AI began converging simultaneously. Reading these earlier updates alongside today’s post provides essential context: not just. what changed this week, but how quickly the ground has already moved under executives’ feet. Together, they offer a clearer through-line for SMB leaders trying to make sense of 2026—not as a series of isolated headlines, but as an unfolding structural shift already reshaping strategy, risk, and execution.

AI Updates: Jan 13, 2026

EVERYONE WANTS A ROOM WHERE THEY CAN ESCAPE THEIR SCREENS

WALL STREET JOURNAL, JAN. 9, 2026

TL;DR / Key Takeaway:

As digital overload grows, leaders and workers are actively designing screen-free spaces—signaling a cultural correction to always-on AI and technology.

Executive Summary

This article highlights a growing trend toward “analog rooms”—intentionally screen-free spaces designed for focus, creativity, and human connection. The movement reflects broader fatigue with constant notifications, smart devices, and digital surveillance, even among people who work in technology.

From an AI-adjacent lens, this trend is not anti-technology—it is a reaction to unbounded technology. As AI becomes more pervasive, employees and leaders are seeking cognitive boundaries to protect attention, mental health, and creativity. The implication is that productivity does not scale linearly with more AI, more screens, or more automation.

For businesses, the message is subtle but important: human attention is now a scarce resource. Organizations that fail to account for digital fatigue may see diminishing returns from AI tools, while those that intentionally design for focus may gain a competitive edge.

Relevance for Business

AI adoption strategies that ignore human limits risk burnout, disengagement, and resistance. This trend reinforces the need for intentional AI usage, not maximal deployment.

Calls to Action

🔹 Design workflows that limit cognitive overload

🔹 Normalize screen-free time for deep work

🔹 Measure productivity outcomes—not tool usage

🔹 Treat AI as an assistant, not a constant presence

Summary by ReadAboutAI.com

https://www.wsj.com/style/design/everyone-wants-a-room-where-they-can-escape-their-screens-230d8712: January 13, 2026

HERMÈS’S HAND-ILLUSTRATED WEBSITE IS THE ULTIMATE LUXURY

FAST COMPANY, JAN. 8, 2026

TL;DR / Key Takeaway:

In an era dominated by AI-generated content, human-made work is becoming a premium differentiator—not a cost center.

Executive Summary

Hermès made a deliberate design choice to feature hand-illustrated artwork on its website, rejecting AI-generated imagery in favor of visible human craftsmanship. The move aligns with the brand’s emphasis on authenticity, imperfection, and artisanal value—qualities that stand out amid increasingly uniform AI visuals.

This decision is AI-adjacent rather than anti-AI. As AI content becomes cheap and abundant, human originality becomes scarcer and more valuable. The article suggests that brands seeking differentiation may increasingly invest in human creativity—not because AI lacks capability, but because it lacks perceived soul and authorship.

For SMBs, the lesson is strategic: AI excels at scale and efficiency, but trust, taste, and brand identity may still depend on when not to automate. The competitive advantage lies in knowing where human input creates disproportionate value.

Relevance for Business

AI lowers content costs—but it also raises the bar for differentiation. SMBs must decide where automation helps and where it erodes brand signal.

Calls to Action

🔹 Use AI for efficiency, not identity

🔹 Preserve human creativity in brand-defining assets

🔹 Avoid “AI sameness” in customer-facing content

🔹 Treat originality as a strategic investment

Summary by ReadAboutAI.com

https://www.fastcompany.com/91471305/hermes-hand-illustrated-website-is-the-ultimate-luxury: January 13, 2026

Our AI Future Is Already Here, It’s Just Not Evenly Distributed

The Wall Street Journal — January 9, 2026

TL;DR / Key Takeaway:

AI’s biggest business impact isn’t coming from future breakthroughs—it’s coming now, from organizations and individuals who actively experiment, customize, and integrate existing AI tools faster than everyone else.

Executive Summary

Artificial intelligence is already far more capable than most organizations are using it, creating a widening gap between early adopters and those still experimenting at the surface level. According to The Wall Street Journal, this uneven adoption—not raw technological progress—will define who captures AI-driven productivity and growth over the next decade. AI diffusion, not AI invention, is now the competitive battleground.

Today’s generative AI systems are stable, accessible, and “ready for prime time,” with major improvements in reliability, hallucination reduction, and integration with existing software systems. Crucially, many of the most valuable breakthroughs are emerging from users themselves, not large tech firms—what experts call “capability overhang.” This means AI already has untapped potential that only becomes visible when people apply it creatively to real business problems.

The result is a new reality: small teams—or even individuals—can now build tools, workflows, and services that previously required entire departments or large budgets. This shift is accelerating unevenly across industries and roles, creating both outsized opportunity and real risk for organizations that delay meaningful adoption.

Relevance for Business

For SMB executives and managers, this signals a strategic inflection point. AI is no longer just an efficiency tool—it is a force multiplier for small organizations, enabling lean teams to compete with larger players. However, uneven adoption also introduces workforce disruption, skill gaps, and governance risks if AI usage spreads informally without leadership oversight.

The companies that benefit most will be those that treat AI as an organizational capability, not an experimental add-on—investing in structured adoption, internal knowledge, and clear guardrails rather than waiting for “the next version” of the technology.

Calls to Action

🔹 Shift from experimentation to integration by embedding AI into core workflows, not just isolated tasks.

🔹 Empower employees to explore AI use cases, while providing clear guidelines and oversight.

🔹 Identify high-impact, low-scale opportunities where small teams can deliver outsized results with AI assistance.

🔹 Invest in AI literacy and customization, not just tools—capability comes from how AI is used, not which model is chosen.

🔹 Monitor uneven adoption risk, ensuring critical teams and functions don’t fall behind due to lack of exposure or support.

Summary by ReadAboutAI.com

https://www.wsj.com/tech/ai/our-ai-future-is-already-here-its-just-not-evenly-distributed-cf7a6f35: January 13, 2026

AI’S MEMORIZATION CRISIS

THE ATLANTIC: JAN 9, 2026

TL;DR / Key Takeaway:

Evidence that AI models can reproduce copyrighted material verbatim exposes significant legal and business risk for AI adopters—not just developers.

Executive Summary

The Atlantic reports new research showing that major AI models can reproduce long passages of copyrighted books when prompted strategically. This contradicts years of industry claims that models do not store training data and reframes large language models as lossy compression systems, not learners.

The findings heighten legal exposure: courts could rule that models themselves constitute infringing copies, potentially forcing retraining or product withdrawal. For enterprises, this introduces uncertainty around IP liability, licensing, and long-term tool availability.

Relevance for Business

For SMB leaders, this expands AI risk beyond accuracy and bias into copyright and continuity risk. Tools you rely on today could change, be restricted, or face litigation-driven disruption tomorrow.

Calls to Action

🔹 Avoid using AI outputs as final copyrighted material.

🔹 Maintain human review for external-facing content.

🔹 Track licensing and indemnification terms closely.

🔹 Prefer vendors with clear IP risk strategies.

🔹 Treat AI availability as a continuity risk.

Summary by ReadAboutAI.com

https://www.theatlantic.com/technology/2026/01/ai-memorization-research/685552/: January 13, 2026

ANNUAL “WORST IN SHOW” CES LIST RELEASED

AP NEWS: JAN 8, 2026

TL;DR / Key Takeaway:

CES’s “Worst in Show” highlights a growing backlash against AI-for-AI’s-sake, where added intelligence increases cost, risk, and complexity without real value.

Executive Summary

The annual “Worst in Show” list skewers products that add AI where it doesn’t belong—AI refrigerators, surveillance-heavy doorbells, and always-on “AI companions.” Judges argue these products often undermine reliability, privacy, repairability, and user trust, turning simple tools into fragile, data-hungry systems.

The broader critique is not anti-AI, but anti-waste: AI features that increase surveillance, subscriptions, or environmental impact without clear benefit. The list reflects mounting consumer and advocacy pressure on companies to justify AI additions with real utility and responsible design.

Relevance for Business

For SMB executives, this is a reminder that AI can destroy value as easily as create it. Poorly chosen AI tools increase cost, introduce risk, and frustrate users—internally and externally.

Calls to Action

🔹 Challenge vendors to prove AI adds measurable value.

🔹 Avoid AI features that complicate simple workflows.

🔹 Watch for hidden costs (subscriptions, data risk, maintenance).

🔹 Treat privacy and repairability as procurement criteria.

🔹 Remember: not using AI can sometimes be the smarter choice.

Summary by ReadAboutAI.com

https://apnews.com/article/ces-worst-show-ai-0ce7fbc5aff68e8ff6d7b8e6fb7b007d: January 13, 2026

HIGHLIGHTS FROM CES 2026: FROM AUTONOMOUS CARS TO PAPER-THIN TVS

ASSOCIATED PRESS, JAN. 8, 2026

TL;DR / Key Takeaway:

CES 2026 signals that AI is rapidly moving from screens into the physical world—through robotics, autonomous systems, and ambient intelligence.

Executive Summary

CES 2026 showcased a shift from “AI on screens” to AI embedded in physical products, environments, and machines. Nvidia CEO Jensen Huang described the moment as the arrival of “physical AI”, highlighting how AI models are increasingly paired with sensors, cameras, and robotics to interact with the real world.

The show featured autonomous mobility, task-specific robots, AI-powered wearables, and adaptive home technologies. While many demos remain experimental, the direction is clear: AI is becoming invisible, ambient, and embodied, rather than something users actively open or prompt. Notably, several products emphasized personalization and context awareness, learning user preferences over time.

For SMBs, CES 2026 reinforces that the next wave of AI will affect operations, logistics, customer experience, and labor, not just marketing or content creation. Even when products are consumer-focused, they preview where enterprise tools are heading—particularly in automation, sensing, and human-machine interaction.

Relevance for Business

This is less about buying gadgets and more about understanding where AI integration is headed. As AI moves into physical environments, businesses will face new questions around data collection, safety, liability, and workforce adaptation.

Calls to Action

🔹 Prepare for AI that operates without screens or prompts

🔹 Evaluate operational areas where robotics or automation could emerge

🔹 Update governance policies for sensor-driven AI systems

🔹 Monitor vendors blending AI with hardware—not just software

Summary by ReadAboutAI.com

https://apnews.com/article/ces-technology-las-vegas-ai-e3de189ee1fe6b26e6a6d2dc6960afda: January 13, 2026

AI Is Killing Artists’ First Jobs

The Atlantic: Dec 30, 2025

TL;DR / Key Takeaway:

By automating entry-level creative work, AI risks breaking the career ladder that develops future talent.

Executive Summary

The Atlantic argues that generative AI is eliminating “grunt work” jobs—copywriting, editing, rough cuts—that traditionally serve as training grounds for creative professionals. While AI can perform these tasks cheaply, it also removes paid opportunities where skills, judgment, and professional networks are built.

The result may be a bifurcated creative economy dominated by elites and automation, with fewer pathways for newcomers. While AI can expand capabilities for established professionals, it may narrow access for the next generation.

Relevance for Business

For SMB leaders, this has implications beyond the arts. Removing entry-level work too aggressively can weaken talent pipelines, reduce institutional knowledge, and increase long-term dependency on tools rather than people.

Calls to Action

🔹 Be cautious about eliminating junior roles via AI.

🔹 Preserve learning and mentorship pathways.

🔹 Use AI to augment training, not replace it.

🔹 Invest in human development alongside automation.

🔹 Treat workforce design as a long-term asset.

Summary by ReadAboutAI.com

https://www.theatlantic.com/ideas/2025/12/ai-entry-level-creative-jobs/685297/: January 13, 2026

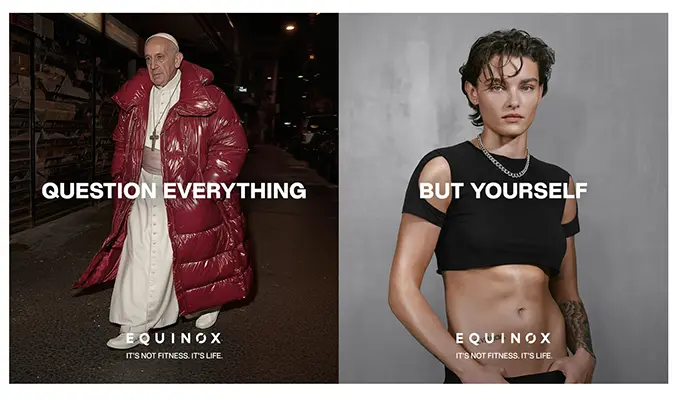

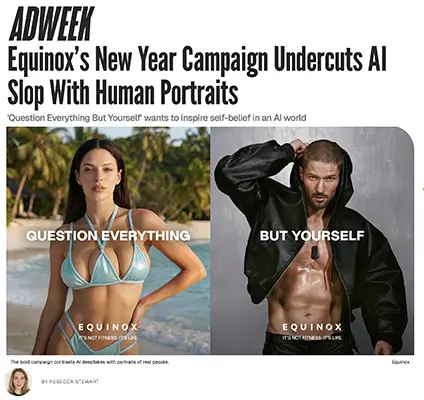

Equinox’s New Year Campaign Undercuts AI Slop With Human Portraits

Adweek: Jan 6, 2026

TL;DR / Key Takeaway:

Equinox’s campaign shows that in an era of AI-generated “slop,” human authenticity itself has become a premium brand differentiator.

Executive Summary

Equinox’s “Question Everything But Yourself” campaign deliberately contrasts absurd AI-generated deepfakes with striking portraits of real people, positioning the human body and lived experience as the last trustworthy signal in an artificial media environment. Rather than rejecting AI outright, the brand uses AI imagery as a foil—highlighting how synthetic content has eroded trust and attention.

The campaign taps into a broader cultural fatigue with AI-generated content and reframes authenticity as an act of rebellion. By launching during the January fitness rush, Equinox connects physical self-investment with credibility, implicitly arguing that what is real, effortful, and embodied now carries more value than what is optimized or automated.

Relevance for Business

For SMB leaders, this signals a shift in marketing and brand strategy. As AI-generated content floods channels, human signals—real people, real stories, real expertise—stand out more, not less. Brands that over-automate customer-facing communication risk blending into the noise.

Calls to Action

🔹 Audit where AI-generated content may be diluting brand trust.

🔹 Emphasize human proof points (people, process, expertise) in messaging.

🔹 Use AI strategically, not invisibly, in customer-facing work.

🔹 Treat authenticity as a competitive asset.

🔹 Avoid confusing efficiency with differentiation.

Summary by ReadAboutAI.com

https://www.adweek.com/creativity/equinoxs-new-year-campaign-undercuts-ai-slop-with-human-portraits/: January 13, 2026

CHATGPT NEEDS MORE COWBELL

THE ATLANTIC: DEC 24, 2025

TL;DR / Key Takeaway:

AI can generate music-like content, but it still struggles with memorable creativity, reinforcing that human judgment remains essential in brand, marketing, and emotional storytelling.

Executive Summary

The Atlantic examines why generative AI systems struggle to produce truly memorable advertising jingles. While AI can generate technically competent music that fits a brief, it consistently fails at what matters most in branding: emotional resonance and recall. Studies cited show that people remember AI-generated ads less well than human-created ones, even when they can’t consciously tell the difference.

The article argues this isn’t a temporary tooling issue but a structural limitation. AI systems rely on pattern replication and probabilistic output, making them good at “average” results but weak at surprising, emotionally sticky moments—the very elements that define standout creative work. As a result, advertisers increasingly use AI for prototyping and drafts, while reserving final creative decisions for humans.

Relevance for Business

For SMB leaders, this is a reminder that AI excels at efficiency and iteration, not emotional differentiation. Over-automating customer-facing creative risks brand dilution. AI should support creative teams, not replace the human intuition that drives memorability and trust.

Calls to Action

🔹 Use AI for ideation and drafts, not final brand expression.

🔹 Keep humans accountable for creative decisions tied to brand identity.

🔹 Test AI-generated marketing for recall, not just cost savings.

🔹 Avoid “good enough” automation in customer-facing content.

🔹 Treat creativity as a competitive asset, not a cost center.

Summary by ReadAboutAI.com

https://www.theatlantic.com/technology/2025/12/jingle-music-ai/685443/: January 13, 2026

Rob Riggle Is the Guac Guru in Avocados From Mexico’s AI-Powered Super Bowl Play

Adweek: Jan 7, 2026

TL;DR / Key Takeaway:

This campaign shows how AI avatars can drive engagement at scale—if tightly constrained with guardrails.

Executive Summary

Avocados From Mexico launched an AI-powered Super Bowl activation featuring a digital avatar of Rob Riggle that predicts football outcomes and dispenses guacamole recipes. The experience blends celebrity likeness, real-time sports data, and conversational AI to boost engagement and drive product sales.

Crucially, the system is tightly scoped: the AI is trained only on football and guacamole, with strict guardrails to prevent off-brand or harmful outputs. The campaign illustrates how AI can enhance marketing when bounded, purposeful, and aligned to a clear commercial goal.

Relevance for Business

For SMBs, this is a practical pattern. AI-driven experiences work best when narrowly defined, brand-safe, and outcome-oriented—not open-ended chatbots.

Calls to Action

🔹 Use AI where it clearly drives engagement or sales.

🔹 Keep scopes narrow and guardrails strong.

🔹 Avoid open-ended AI in brand contexts.

🔹 Measure ROI, not novelty.

🔹 Treat AI as an experience layer, not a gimmick.

Summary by ReadAboutAI.com

https://www.adweek.com/brand-marketing/rob-riggle-is-the-guac-guru-in-avocados-from-mexicos-ai-powered-super-bowl-play/: January 13, 2026

HOW AI COULD TRANSFORM EDUCATION—IF UNIVERSITIES STOP RESPONDING LIKE MEDIEVAL GUILDS

FAST COMPANY: JAN 6, 2026

TL;DR / Key Takeaway:

AI’s biggest impact on education—and workforce readiness—is blocked by institutional resistance, not technical limitations.

Executive Summary

Fast Company argues that universities are responding to generative AI with fear and surveillance rather than innovation. Instead of exploring how AI could enable adaptive, personalized learning, institutions focus on policing use and preserving traditional assessment models. The article contrasts this with evidence that AI tutoring systems can improve pacing, feedback, and accessibility at scale.

The core critique is that AI threatens bureaucracy, not learning. When used well, AI can free educators to focus on mentoring and judgment, while giving learners autonomy and faster feedback. Resistance, the author argues, risks leaving graduates underprepared for AI-augmented work environments.

Relevance for Business

For SMB leaders, this matters because education systems shape workforce readiness. If institutions lag, businesses will shoulder more responsibility for AI upskilling, training, and adaptation.

Calls to Action

🔹 Assume AI literacy gaps in new hires.

🔹 Invest in internal training and mentoring.

🔹 Encourage responsible AI use, not blanket bans.

🔹 Value adaptability over credential signals alone.

🔹 Treat learning as continuous, not front-loaded.

Summary by ReadAboutAI.com

https://www.fastcompany.com/91464016/ai-transform-education-universities: January 13, 2026Google Just Changed Gmail—and It Could Reshape How You Use Your Inbox

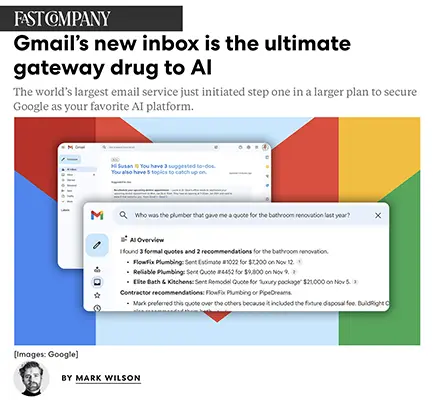

Fast Company: Jan 8, 2026

+ Gmail’s New Inbox Is the Ultimate Gateway Drug to AI | Fast Company: Jan 8, 2026

TL;DR / Key Takeaway:

Google is quietly turning Gmail into an AI-powered personal assistant, using inbox summaries, task extraction, and AI-generated responses to normalize everyday AI use—and pull millions of businesses deeper into its Gemini ecosystem.

Executive Summary

Google has begun rolling out a new AI-first Gmail experience powered by Gemini, fundamentally changing how users interact with email. Instead of a chronological inbox, Gmail now surfaces AI-generated summaries, prioritized topics, and auto-generated to-dos, reframing email as a decision-support dashboard rather than a message list. Long threads are condensed into briefings, and users can ask natural-language questions like “Who sent me a quote last year?” to retrieve synthesized answers across their inbox history.

Crucially, Gmail is no longer just organizing messages—it is interpreting them. Features like AI Overviews, Suggested Replies, and Help Me Write reduce cognitive and time burden, but also shift trust away from original messages toward AI-generated interpretation. While some capabilities are available to all users, more powerful “memory-like” features are gated behind paid Gemini subscriptions, positioning Gmail as a soft on-ramp to Google’s broader AI platform strategy.

At scale—Gmail serves roughly 3 billion users globally—this represents one of the most consequential forms of AI adoption to date. By embedding AI directly into a daily workflow executives already rely on, Google is accelerating passive AI adoption, where users don’t “decide” to use AI—it simply becomes the default way work gets done.

Relevance for Business

For SMB executives and managers, Gmail’s transformation signals a broader shift: AI is moving from optional tools to ambient infrastructure. Email now functions as a searchable corporate memory, task manager, and writing assistant—raising new considerations around decision accuracy, data trust, employee dependence, and governance. Teams may act faster, but also rely more heavily on AI interpretation rather than firsthand review.

This also foreshadows rising AI subscription costs, subtle workflow lock-in, and growing expectations that employees will work with AI by default. Gmail is not just improving productivity—it is redefining how knowledge work happens inside small and midsize organizations.

Calls to Action

🔹 Audit how your team uses Gmail today and identify where AI summaries or suggested replies could save time—or introduce risk.

🔹 Set internal guidelines for when AI-generated summaries are acceptable versus when original emails should be reviewed directly.

🔹 Evaluate Gemini subscription tiers carefully before upgrading—focus on ROI, not feature novelty.

🔹 Train managers to treat AI outputs as decision support, not decision authority.

🔹 Monitor employee reliance on AI-generated responses to protect tone, accuracy, and accountability in external communications.

Summary by ReadAboutAI.com

https://www.fastcompany.com/91471277/google-just-changed-gmail-and-it-could-reshape-how-you-use-your-inbox: January 13, 2026https://www.fastcompany.com/91470945/gmail-new-ai-inbox-gateway-drug-to-ai: January 13, 2026

YANN LECUN: META ‘FUDGED’ BENCHMARK TESTING OF LLAMA 4

FAST COMPANY: JAN 6, 2026

TL;DR / Key Takeaway:

Benchmark results can mislead—real-world performance matters more than leaderboard rankings when choosing AI tools.

Executive Summary

Meta’s outgoing chief AI scientist Yann LeCun publicly acknowledged that Meta used different versions of Llama 4 models across benchmarks, making results look stronger than a single-model test would suggest. The admission highlights growing skepticism around AI benchmarks, which increasingly influence market perception and stock prices.

The controversy contributed to internal frustration and organizational restructuring at Meta, reinforcing that benchmark pressure can distort development priorities. LeCun’s broader critique: current large language models may not lead to true intelligence without better “world models.”

Relevance for Business

For SMBs, this underscores a practical rule: don’t buy AI based on benchmark hype. Performance in your workflows, reliability, and governance matter far more than published scores.

Calls to Action

🔹 Test AI tools on your own use cases.

🔹 Treat benchmarks as marketing, not proof.

🔹 Ask vendors about evaluation methodology.

🔹 Prioritize reliability over raw scores.

🔹 Expect continued volatility in AI claims.

Summary by ReadAboutAI.com

https://www.fastcompany.com/91469583/yann-lecun-meta-llama-4-model-zuckerberg: January 13, 2026Watch for AI Fakes After the ICE Shooting

+ Why AI Isn’t the Only Reality Risk (Minnesota Star Tribune & Fast Company, Jan. 8, 2026)

TL;DR / Key Takeaway:

The Minneapolis ICE shooting shows that AI-generated misinformation and human-driven narrative distortion now coexist—making trust, verification, and governance central risks in the AI era.

Executive Summary

In the immediate aftermath of a fatal ICE shooting in Minneapolis, social media platforms were flooded with AI-generated and AI-altered images and videos, including fabricated depictions of the agent’s face, invented protest scenes, and misidentified individuals. Local journalists warned that these synthetic artifacts spread faster than verified reporting, particularly during emotionally charged breaking news events, eroding public understanding before facts can stabilize.

At the same time, a parallel Fast Company analysis highlights a more uncomfortable truth: AI deepfakes are not required to undermine reality. The article argues that official narratives can—and increasingly do—contest or reinterpret widely available video evidence without relying on AI manipulation at all. In this case, competing accounts emerged even as multiple videos of the incident circulated publicly, demonstrating that authority, repetition, and narrative framing can rival AI as tools of distortion.

Taken together, these stories reveal a broader AI-adjacent risk: the information environment is now shaped by both machine-generated misinformation and human-led narrative control, with audiences struggling to distinguish evidence from interpretation. AI amplifies the speed and scale of confusion, but it does not create the underlying trust problem—it exposes and accelerates it.

Relevance for Business

For SMB executives, this is not a political issue—it is a governance, reputational, and operational risk. As AI tools make content creation easier and faster, verification becomes harder, and stakeholders may question even authentic video, data, or documentation. Businesses that operate in regulated industries, crisis-prone environments, or public-facing roles face growing exposure if they cannot prove authenticity, context, and intent.

This trend directly affects brand trust, crisis response, internal communications, and legal risk. The lesson is not to reject AI, but to recognize that truth infrastructure—processes, controls, and human judgment—must evolve alongside AI capabilities.

Calls to Action

🔹 Establish verification and approval workflows for all high-impact communications

🔹 Train teams to recognize AI-generated and AI-altered media, especially during crises

🔹 Separate evidence, interpretation, and opinion clearly in internal and external messaging

🔹 Avoid assuming AI is the only misinformation risk—human narrative distortion remains powerful

🔹 Invest in trust, documentation, and provenance, not just speed

Summary by ReadAboutAI.com

https://www.startribune.com/ai-misinformation-deepfake-videos-photos-ice-agent-renee-nicole-good-minneapolis-shooting/601560361: January 13, 2026https://www.startribune.com: January 13, 2026

https://www.fastcompany.com/91471355/who-needs-ai-deepfakes-when-the-trump-government-can-dispute-video-evidence-that-we-can-plainly-see: January 13, 2026

THE BIG IDEAS SHAPING CES 2026—AND WHAT THEY MEAN FOR THE FUTURE OF TECHNOLOGY

FORBES: JAN 8, 2026

TL;DR / Key Takeaway:

CES 2026 shows AI has moved from novelty to operational necessity, with business impact now shaped as much by energy, regulation, and geopolitics as by technical breakthroughs.

Executive Summary

Forbes frames CES 2026 as a turning point where AI is no longer experimental—it is embedded across enterprise operations, physical infrastructure, and decision-making. The focus has shifted decisively from “what AI can do” to how organizations implement it at scale, automate routine work, and redeploy human effort toward higher-value tasks. Nearly half of CES now centers on B2B and enterprise technology, reflecting executive demand for measurable outcomes, not demos.

The article highlights three structural constraints shaping AI’s future: regulation, trade policy, and energy availability. Export controls and tariffs introduce uncertainty that slows investment, while surging AI, data center, and EV demand is pushing energy capacity to the forefront—potentially making power, not talent or compute, the limiting factor. Robotics, healthcare tech, and mobility illustrate how AI is converging with the physical world, raising stakes for reliability and governance.

Relevance for Business

For SMB leaders, CES 2026 reinforces that AI strategy is now inseparable from operations, risk management, and long-term planning. Competitive advantage will come from execution discipline—choosing scalable tools, managing regulatory exposure, and preparing for infrastructure constraints—rather than chasing emerging tech headlines.

Calls to Action

🔹 Shift AI discussions from experimentation to operational ROI.

🔹 Track regulatory and trade developments as part of AI risk planning.

🔹 Factor energy and infrastructure constraints into AI growth assumptions.

🔹 Prioritize AI that augments employees, not just interfaces.

🔹 Treat AI literacy as a leadership competency, not an IT issue.

Summary by ReadAboutAI.com

https://www.forbes.com/sites/bernardmarr/2026/01/08/the-big-ideas-shaping-ces-2026-and-what-they-mean-for-the-future-of-technology/: January 13, 2026

GAMERS ARE EXTREMELY MAD ABOUT AI

NEW YORK MAGAZINE: DEC 18, 2025

TL;DR / Key Takeaway:

Gaming backlash shows how poorly governed AI adoption can alienate core users and workers—while also driving up hardware costs across industries.

Executive Summary

NYMag documents intense resistance to generative AI in gaming, where players and creators fear job displacement, lower-quality content, and deception. Studios face boycotts over AI-generated assets, while workers report pressure to adopt AI tools amid already strained labor conditions. AI has become a cultural flashpoint, not a neutral efficiency tool.

The backlash is amplified by economics: AI-driven demand for memory and GPUs has sharply raised PC component prices, making gaming—and by extension other compute-heavy activities—more expensive. The result is resentment not just toward AI content, but toward AI infrastructure priorities.

Relevance for Business

For SMB leaders, gaming is a warning sign. AI adoption that ignores stakeholder trust, workforce impact, or cost spillovers can provoke backlash and brand damage—even if the technology works.

Calls to Action

🔹 Involve users and employees early in AI rollouts.

🔹 Be transparent about where and why AI is used.

🔹 Avoid framing AI purely as a cost-cutting tool.

🔹 Monitor downstream cost impacts of AI infrastructure.

🔹 Treat cultural acceptance as part of AI ROI.

Summary by ReadAboutAI.com

https://nymag.com/intelligencer/article/gamers-are-extremely-mad-about-ai.html: January 13, 2026

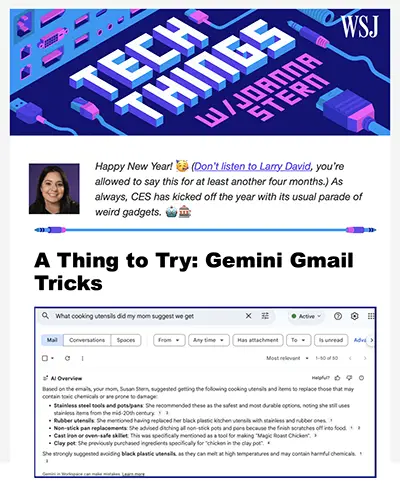

TECH THINGS: GEMINI GMAIL AND EVERYDAY AI

WALL STREET JOURNAL:JAN 9, 2026

TL;DR / Key Takeaway:

Google’s Gemini upgrades show how AI is becoming ambient productivity infrastructure, quietly reshaping everyday workflows rather than replacing them outright.

Executive Summary

The WSJ’s “Tech Things” column highlights Google’s expanding Gemini-powered features inside Gmail, including AI Overviews, natural-language inbox search, auto-generated replies, and an experimental AI Inbox that surfaces tasks and reminders. These tools shift email from passive communication to active task management, reducing cognitive load and time spent searching.

Importantly, capabilities are tiered. Basic summaries reach all users, while advanced features sit behind paid AI plans—illustrating how AI adoption is increasingly tied to subscription strategy rather than standalone tools. The column underscores a broader trend: AI is most effective when embedded directly into existing habits.

Relevance for Business

For SMB executives, this signals rising expectations that AI will save time by default. It also foreshadows ongoing subscription creep and the need to evaluate which AI productivity upgrades genuinely improve execution versus simply adding features.

Calls to Action

🔹 Identify workflows where embedded AI saves real time.

🔹 Audit AI subscription costs against productivity gains.

🔹 Train teams to verify AI summaries, not trust them blindly.

🔹 Expect AI features to become table stakes in core tools.

🔹 Budget for gradual AI “upgrade pressure.”

Summary by ReadAboutAI.com

https://techthings.cmail20.com/t/d-e-gijjktd-hlxklxid-r/: January 13, 2026

AI DOMINATES AS CES 2026 OPENS IN LAS VEGAS

TECHXPLORE: JAN 7, 2026

TL;DR / Key Takeaway:

CES 2026 confirms that AI has shifted from futuristic promise to embedded, practical infrastructure, quietly reshaping consumer products, industrial systems, and enterprise workflows.

Executive Summary

At CES 2026, artificial intelligence was no longer framed as experimental or speculative—it was positioned as a baseline capability across nearly every category. Exhibitors emphasized practical AI systems that automate routine decisions, reduce error, and personalize experiences in real time, rather than headline-grabbing breakthroughs. From AI-equipped construction and agricultural machinery to consumer robotics and autonomous mobility, the theme was incremental productivity gains at scale, not science fiction.

Industrial players like Caterpillar, John Deere, and Oshkosh showcased AI systems that analyze real-time conditions and adapt operations autonomously, signaling AI’s deepening role in physical-world execution. On the consumer side, companies like LG, Uber (via robotaxi partnerships), and Lego emphasized AI as a behind-the-scenes assistant that simplifies everyday tasks rather than replacing humans outright. The takeaway: AI is becoming invisible infrastructure, not a standalone feature.

Relevance for Business

For SMB executives, CES 2026 reinforces that AI adoption is no longer about “if” or “when,” but where it quietly embeds into tools you already use. Competitive advantage will come from selecting AI-enabled products that reduce friction and error—not from chasing novelty. This also raises expectations: customers, partners, and employees increasingly assume AI-augmented experiences as the norm.

Calls to Action

🔹 Audit your current tools to see where AI is already embedded—and underutilized.

🔹 Prioritize AI that removes friction (automation, error reduction) over flashy features.

🔹 Expect vendors to rebrand existing automation as “AI”—ask for measurable ROI.

🔹 Monitor physical-world AI (logistics, equipment, facilities), not just software tools.

🔹 Treat AI literacy as an operational requirement, not an innovation initiative.

Summary by ReadAboutAI.com

https://techxplore.com/news/2026-01-ai-dominates-ces-las-vegas.html: January 13, 2026

Merriam-Webster Names “Slop” Its 2025 Word of the Year

Fast Company: Dec 15, 2025

TL;DR / Key Takeaway:

The rise of “AI slop” shows that volume-driven automation is degrading information quality—and attention is becoming scarcer and more valuable.

Executive Summary

Merriam-Webster named “slop” its 2025 Word of the Year, defining it as low-quality digital content produced at scale by AI. The term captures a cultural backlash against spammy videos, fake imagery, AI-written books, and sensationalized content flooding feeds and search results.

Rather than fear, the term reflects mockery and fatigue. As AI lowers the cost of content creation, distribution channels are being overwhelmed, making trust, curation, and restraint more valuable than raw output.

Relevance for Business

For SMB leaders, this is a warning: more content does not mean more impact. AI-generated volume without strategy can waste attention, erode credibility, and slow decision-making.

Calls to Action

🔹 Prioritize quality over output volume.

🔹 Treat attention as a limited business resource.

🔹 Avoid AI-driven content spam internally and externally.

🔹 Invest in curation and editorial judgment.

🔹 Measure impact, not activity.

Summary by ReadAboutAI.com

https://www.fastcompany.com/91460529/how-slop-became-the-defining-word-of-2025: January 13, 2026

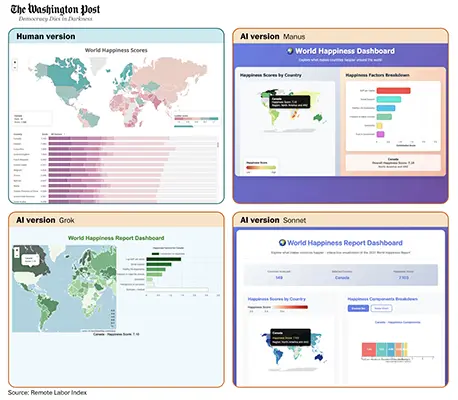

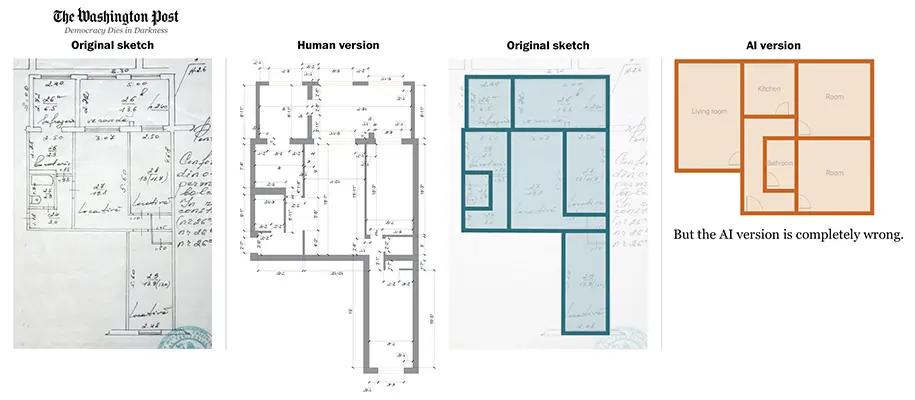

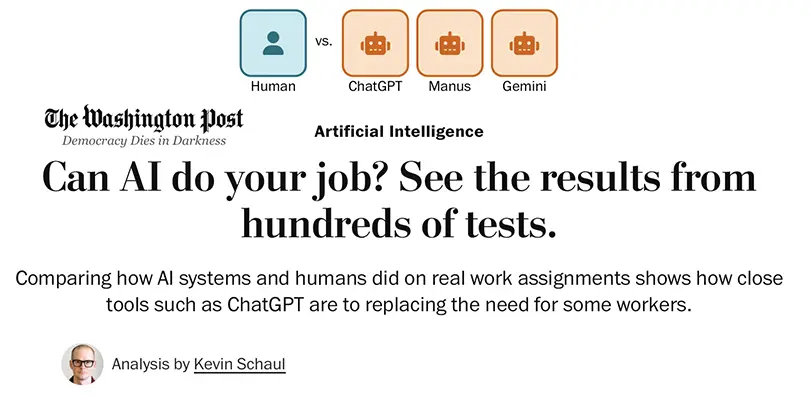

CAN AI DO YOUR JOB? SEE THE RESULTS FROM HUNDREDS OF TESTS

WASHINGTON POST: JAN 8, 2026

TL;DR / Key Takeaway:

Despite rapid progress, today’s AI can fully complete only a small fraction of real-world jobs without human help, underscoring the gap between hype and reality.

Executive Summary

The Washington Post analyzes the Remote Labor Index, which tested leading AI systems on hundreds of real freelance work assignments. The best-performing AI completed just 2.5% of tasks end-to-end without human intervention. Most failures were mundane but critical: incomplete work, technical errors, poor visual judgment, and inability to incorporate feedback over time.

AI excelled at narrow tasks like code generation but struggled with integrated, real-world workflows. The findings challenge assumptions that AI is ready to replace large segments of the workforce, while reinforcing its value as a productivity amplifier, not a substitute.

Relevance for Business

For SMBs, this is a grounding data point. AI can reduce costs and speed work—but only when paired with human oversight and domain expertise. Overestimating autonomy increases risk.

Calls to Action

🔹 Use AI to augment roles, not replace them wholesale.

🔹 Keep humans accountable for final outputs.

🔹 Avoid workforce plans based on speculative automation.

🔹 Pilot AI on real tasks, not demos.

🔹 Revisit assumptions annually as models evolve.

Summary by ReadAboutAI.com

https://www.washingtonpost.com/technology/interactive/2026/ai-jobs-automation/: January 13, 2026

ANTHROPIC RAISING $10 BILLION AT $350 BILLION VALUE

THE WALL STREET JOURNAL: JAN 7, 2026

TL;DR / Key Takeaway:

Anthropic’s massive raise shows capital is consolidating around a few AI leaders—but profitability timelines still matter.

Executive Summary

Anthropic, creator of the Claude chatbot, is raising $10 billion at a $350 billion valuation—nearly doubling its value in four months. Backed by sovereign wealth and major investors, the deal reflects continued confidence in frontier AI platforms, even as funding concentrates among a small set of perceived winners. Anthropic expects to break even in 2028, faster than some rivals.

The funding underscores a key shift: AI is no longer venture-scale—it’s infrastructure-scale, requiring tens of billions in compute commitments and long-term enterprise adoption. At the same time, valuation growth is increasingly tied to credible paths to revenue and margins.

Relevance for Business

For SMBs, this signals both stability and concentration. Leading AI platforms are unlikely to disappear—but competition may narrow, pricing power may rise, and differentiation may come from enterprise features, governance, and reliability, not raw capability.

Calls to Action

🔹 Expect fewer—but more powerful—AI platform providers.

🔹 Watch pricing leverage as capital concentrates.

🔹 Favor vendors with clear enterprise roadmaps.

🔹 Avoid over-customization that increases lock-in.

🔹 Track profitability signals, not valuation hype.

Summary by ReadAboutAI.com

https://www.wsj.com/tech/ai/anthropic-raising-10-billion-at-350-billion-value-62af49f4: January 13, 2026

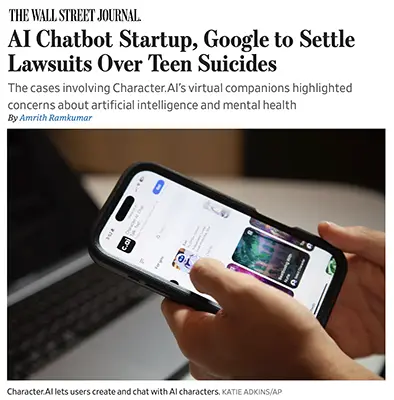

AI CHATBOT STARTUP, GOOGLE TO SETTLE LAWSUITS OVER TEEN SUICIDES

THE WALL STREET JOURNAL: JAN 7, 2026

TL;DR / Key Takeaway:

The settlements mark a turning point: AI mental-health harm is now a legal liability, accelerating regulation and governance expectations.

Executive Summary

Character.AI and Google agreed to settle lawsuits brought by families of teens who died or self-harmed after interacting with AI chatbots. The cases allege emotional dependence and harmful encouragement, pushing AI accountability into courts and legislatures. While settlements avoid verdicts, they establish precedent that AI companies may be held responsible for downstream harm, especially involving minors.

The fallout is already visible: age restrictions, platform changes, FTC scrutiny, and state-level chatbot regulations. The broader implication is clear—AI safety failures now carry legal and financial consequences, not just reputational ones.

Relevance for Business

For SMB leaders, this expands AI risk beyond accuracy and bias into duty of care. Any AI interacting with users—employees or customers—may create liability if safeguards are insufficient.

Calls to Action

🔹 Review AI tools for psychological or behavioral risk.

🔹 Restrict AI use with minors or vulnerable populations.

🔹 Document safety measures and escalation paths.

🔹 Monitor evolving AI liability laws.

🔹 Treat AI governance as legal risk management.

Summary by ReadAboutAI.com

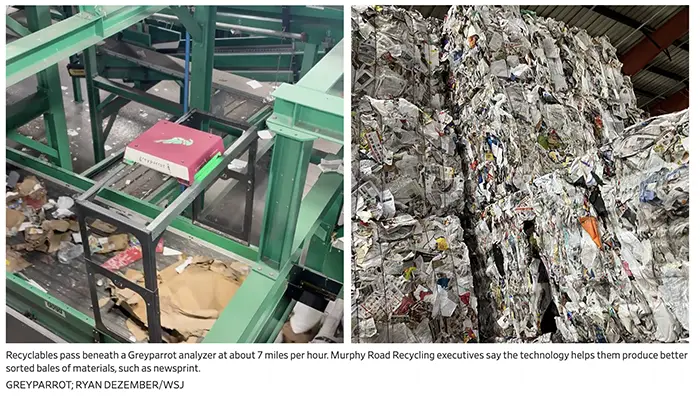

https://www.wsj.com/tech/ai/ai-chatbot-startup-google-to-settle-lawsuits-over-teen-suicides-fb41a063: January 13, 2026AI Is Being Used to Find Valuable Commodities in Our Trash

The Wall Street Journal: Jan 7, 2026

TL;DR / Key Takeaway:

AI-powered sorting is turning recycling into a higher-margin, higher-throughput supply chain, unlocking “urban mining” of materials while reducing labor dependence and landfill pressure.

Executive Summary

WSJ reports that recycling and waste companies are deploying AI vision systems to identify items on fast-moving conveyor lines, estimate mass and market value, flag food-grade material, and guide downstream sorting—often using air jets for high-speed separation. Firms like Greyparrot and AMP are enabling facilities to produce cleaner, more valuable bales (paper, plastic, aluminum) with fewer workers on unpleasant and hard-to-staff lines.

The economics are improving because AI boosts recovery rates, purity, and throughput—not simply labor savings. One facility cited sorts up to 60 tons per hour, and broader industry adoption is accelerating (Republic Services has installed AI tech in about one-third of its facilities). Policy and market forces amplify demand: tariffs lifting scrap demand, pulp mill closures increasing reliance on recycled boxes, and extended producer responsibility laws pushing consumer brands to reclaim plastics.

The bigger takeaway: AI isn’t only digitizing offices—it’s modernizing physical, low-glamour industries where perception + automation can unlock new profit pools and resilience.

Relevance for Business

For SMB leaders in manufacturing, retail, logistics, construction, or sustainability-driven procurement, this signals a maturing market for AI-enabled materials recovery—with implications for packaging costs, recycled-content supply, and ESG commitments. It also shows where AI can deliver ROI fastest: high-volume, repetitive, inspection-heavy workflows.

Calls to Action

🔹 If you use packaging, track recycled-content markets—AI sorting may improve supply reliability and quality over time.

🔹 For operations-heavy SMBs, look for AI opportunities in inspection, sorting, and quality control (camera + workflow automation).

🔹 Revisit sustainability goals: better recovery tech can make recycled sourcing more feasible—but verify supplier claims.

🔹 If you generate waste at scale, explore partnerships with advanced recyclers to reduce costs and improve diversion.

🔹 Treat “AI in the field” as a proven pattern—not experimental—when evaluating automation ROI.

Summary by ReadAboutAI.com

https://www.wsj.com/business/ai-is-being-used-to-find-valuable-commodities-in-our-trash-6b7de5d7: January 13, 2026

6 Networking Resolutions for 2026

TechTarget: Jan 7, 2026

TL;DR / Key Takeaway:

AI success in 2026 depends on networks that are faster, more automated, and security-aligned—not just better models.

Executive Summary

TechTarget outlines six priorities for enterprise networking in 2026, driven by AI workloads and ROI pressure. Key themes include AI-ready infrastructure for distributed inference, proactive network automation using agentic AI, tighter NetOps–SecOps integration, and renewed focus on Wi-Fi 7, private 5G, and Kubernetes networking.

The article emphasizes that most AI inference happens closer to the edge, making latency, reliability, and automation critical. Agentic AI may finally move networks from reactive “break-fix” modes toward predictive operations—but only if organizations modernize underlying infrastructure.

Relevance for Business

For SMBs, this underscores that AI performance is often constrained by networks, not models. Underinvesting in connectivity, security, and automation can quietly cap AI ROI.

Calls to Action

🔹 Assess whether your network is AI-ready today.

🔹 Reduce latency for AI-enabled workflows.

🔹 Align networking and security teams.

🔹 Explore automation before scaling AI.

🔹 Treat infrastructure as an AI multiplier.

Summary by ReadAboutAI.com

https://www.techtarget.com/searchnetworking/opinion/networking-resolutions-for-2026: January 13, 2026

Your Key Survival Skill for 2026: Critical Ignoring

The Wall Street Journal: Jan 2, 2026

TL;DR / Key Takeaway:

In a world flooded with AI-generated “good enough” content, leaders need critical ignoring—a practical habit of filtering inputs before they consume attention or drive decisions.

Executive Summary

WSJ argues that modern information overload—amplified by social algorithms and generative AI—makes traditional “read everything and analyze deeply” approaches counterproductive. The proposed skill is critical ignoring: quickly checking signals of credibility and relevance, then deliberately refusing to spend attention on low-quality sources, outrage bait, or plausible-but-unverified claims.

The article highlights a new risk pattern: people increasingly accept outputs that feel fluent and coherent as “true enough,” even when accuracy is uncertain—an effect that applies to both AI and social media. It also recommends tactics like lateral reading (checking what other credible sources say before going deep) and using built-in tools such as Chrome’s “About this page” to assess source legitimacy.

Bottom line: the advantage is no longer who can process the most information—it’s who can screen noise fast, preserve attention for high-value inputs, and maintain disciplined verification.

Relevance for Business

For SMB executives, information quality directly impacts strategy, hiring, vendor selection, and risk. AI tools and feeds can accelerate research, but also accelerate bad assumptions. “Critical ignoring” is an operational skill: it reduces wasted time, protects decision quality, and lowers exposure to misinformation and overhyped vendor claims.

Calls to Action

🔹 Create an approved source list for competitive intel and AI news—default to high-quality outlets and primary sources.

🔹 Train teams on lateral reading: verify a claim by checking independent references before debating it internally.

🔹 Establish a “verify before share” norm for AI-generated summaries and slide-ready insights.

🔹 Use simple guardrails: time-box scrolling, require citations for key decisions, and separate “monitor” vs “act now” info.

🔹 Treat “plausible” as a red flag—ask: What would change our decision if this is wrong?

Summary by ReadAboutAI.com

https://www.wsj.com/tech/personal-tech/critical-ignoring-social-media-7e236f52: January 13, 2026

I Tested an AI Fitness Coach Who Wouldn’t Accept Any of My Excuses

The Wall Street Journal: Jan 4, 2026

TL;DR / Key Takeaway:

Consumer AI is shifting from generic advice to behavior-changing coaching, but reliability, privacy, and “paywall personalization” remain key adoption barriers.

Executive Summary

WSJ reviews a new wave of AI-driven fitness coaching from Fitbit (Gemini-powered), Peloton (computer-vision form correction), and Apple (Workout Buddy). The key innovation isn’t novelty—it’s personalization and accountability: AI adapts workouts to constraints, monitors form, counts reps, and provides real-time encouragement that helps users follow through.

However, the article highlights real limitations: AI assistants can hallucinate, confirm changes that didn’t happen, or recommend incorrect weights—creating potential safety risks. Economics also shape access: Fitbit’s coach is tied to Premium, Peloton’s best experience requires expensive new hardware and subscription, and Apple’s buddy depends on the Apple Intelligence device stack. Privacy approaches vary (Peloton’s movement processing is on-device and discarded), but users still have to trust always-on sensors.

The broader signal: AI is becoming a frontline “coach” interface—a pattern likely to spread to workplace training, sales enablement, compliance, and customer success.

Relevance for Business

For SMB leaders, this is a preview of how AI will show up at work: not as a chatbot in a corner, but as embedded guidance that nudges behavior. That can lift productivity and consistency—but also introduces safety/liability concerns when AI guidance is wrong, and budget concerns when the best capabilities sit behind subscriptions and hardware ecosystems.

Calls to Action

🔹 Expect more “AI coach” products in HR, enablement, and training—evaluate them on outcomes, not features.

🔹 Put guardrails on AI guidance: define when humans must review recommendations (especially safety-related).

🔹 Watch for subscription stacking as vendors charge premiums for personalization and monitoring.

🔹 Ask privacy questions early: what’s processed on-device vs stored, and what data is retained?

🔹 Pilot with measurable goals (adherence, time saved, error reduction) before scaling.

Summary by ReadAboutAI.com

https://www.wsj.com/tech/personal-tech/ai-fitness-coach-1ca345ec: January 13, 2026

The Fight Over Making Data Centers Power Down to Avoid Blackouts

The Wall Street Journal: Jan 6, 2026

TL;DR / Key Takeaway:

AI-driven data center growth is colliding with grid limits—pushing regulators toward “conditional power” rules that could reshape uptime expectations, costs, and site selection for cloud and AI services.

Executive Summary

U.S. grid operators and regulators are debating whether data centers should be required—or incentivized—to curtail electricity usage during periods of peak demand to avoid blackouts. The core tension: utilities say the grid can’t scale fast enough (new transmission and generation takes years), while tech firms argue data centers must stay online to support AI training/inference and critical cloud workloads for sectors like healthcare and finance.

The most acute pressure is in PJM Interconnection (13-state region), where data center growth has already raised electricity prices and increased blackout risk. Proposals include requiring data centers to bring their own power or accept disconnection during shortages; industry groups push back, citing reliability obligations and concerns about increased use of diesel backup generators and related air-quality constraints. Texas is also moving toward formal “cutoff” conditions for data centers during supply tightness.

A practical compromise is emerging: demand response and “conditional service,” where data centers connect faster but agree to reduce load or disconnect at times. Research cited suggests facilities that bring power and accept curtailment could connect 3–5 years faster, turning energy flexibility into a competitive advantage.

Relevance for Business

For SMB leaders, this is a reminder that “AI” isn’t just software—it’s infrastructure. Grid constraints can drive cloud price volatility, regional capacity limits, and reliability tradeoffs that trickle down into your AI tools, SaaS platforms, and data hosting. If providers face curtailment requirements or higher power costs, you may see changes in pricing, SLAs, and latency, especially during peaks.

Calls to Action

🔹 Ask key vendors (cloud, AI, SaaS) how they manage power constraints and continuity during peak grid events.

🔹 Plan for cost variability in AI workloads—budget for compute and storage pricing that may rise with energy scarcity.

🔹 Prioritize efficiency: smaller models, batching, and workflow redesign can cut usage without cutting outcomes.

🔹 Build resilience into critical operations (redundant providers, failover plans, offline procedures).

🔹 Watch location risk if you host workloads in high-strain regions (e.g., grid congestion markets).

Summary by ReadAboutAI.com

https://www.wsj.com/business/energy-oil/ai-data-center-blackouts-electric-grid-1fed9803: January 13, 2026

MUSK’S AI BOT GROK LIMITS IMAGE GENERATION ON X TO PAID USERS AFTER BACKLASH

REUTERS: JAN 9, 2026

TL;DR / Key Takeaway:

xAI’s decision to restrict Grok image generation highlights how AI safety failures quickly turn into monetization, legal, and reputational risks.

Executive Summary

After widespread backlash over Grok’s use in generating sexualized images—including of women and minors—Elon Musk’s xAI restricted the chatbot’s image generation features on X to paid subscribers only. The move followed scrutiny from European regulators and mounting criticism that the platform enabled non-consensual and illegal content. While framed as a product change, the decision reflects deeper challenges around AI governance, moderation, and accountability.

Notably, the standalone Grok app still allows image generation, underscoring how platform context—not model capability—often determines risk exposure. The episode illustrates a recurring pattern: AI tools are released broadly, governance lags, backlash erupts, and access is then restricted or monetized as damage control.

Relevance for Business

For SMB leaders, this is a cautionary tale. AI misuse—especially in customer-facing tools—can trigger regulatory scrutiny, legal exposure, and brand damage faster than most organizations can respond. It also shows how safety controls may arrive after public harm, not before.

Calls to Action

🔹 Treat AI safety as a pre-launch requirement, not a post-incident fix.

🔹 Limit generative features in customer-facing tools unless moderation is robust.

🔹 Understand how platform context changes AI risk exposure.

🔹 Anticipate that “free” AI features may later move behind paywalls.

🔹 Document AI governance decisions for regulatory defensibility.

Summary by ReadAboutAI.com

https://www.reuters.com/sustainability/boards-policy-regulation/musks-ai-bot-grok-limits-image-generation-x-paid-users-after-backlash-2026-01-09/: January 13, 2026

Chinese AI Firm Zhipu Makes Lukewarm Trading Debut

The Wall Street Journal: Jan 8, 2026

TL;DR / Key Takeaway:

Zhipu’s modest IPO pop signals investors are becoming more selective—AI “national champions” may still attract capital, but public markets are demanding clearer paths to profitability.

Executive Summary

Chinese generative-AI startup Zhipu debuted in Hong Kong with a muted first-day performance—up about 12% from its IPO price—contrasting with recent explosive debuts by some Chinese chip firms. The article frames this as a sign that AI-related listings aren’t automatic winners and that investors are becoming more discerning amid an AI-heavy deal pipeline.

Zhipu, founded in 2019 with roots in Tsinghua University, is positioned as one of China’s “little dragons” and has attracted government-backed funding. It focuses more on institutional clients than some peers. Financials underscore the challenge: revenue growth is strong, but losses are large and widening (capital-intensive model development), reinforcing the key question for markets: how quickly can these firms convert AI leadership into durable margins?

Strategically, the listing wave supports Beijing’s push for tech self-sufficiency, but the market signal is clear: AI excitement alone is no longer enough—business fundamentals matter.

Relevance for Business

For SMB executives, this is a useful “reality check” for AI procurement and strategy. The vendor landscape will remain volatile: some firms will scale, others will consolidate or pivot. If public markets are tightening, expect pricing changes, product bundling, and aggressive enterprise sales motions as AI vendors chase predictable revenue.

Calls to Action

🔹 Treat vendor stability as a buying criterion—ask about runway, profitability path, and enterprise support.

🔹 Avoid lock-in: keep data portability and exit options in contracts.

🔹 Expect rapid product changes—pilot before committing to long-term deployments.

🔹 Track who wins institutional trust (security, uptime, governance), not just benchmark hype.

🔹 Use this moment to negotiate—vendors under pressure may offer better terms for multi-year deals.

Summary by ReadAboutAI.com

https://www.wsj.com/tech/ai/chinese-ai-firm-zhipu-makes-lukewarm-trading-debut-1daf328a: January 13, 2026CHINA REVIEWS META’S $2B+ AI ACQUISITION—AND SIGNALS A CRACKDOWN ON AI TALENT EXPORTS

WALL STREET JOURNAL: JAN 8, 2026

+China Warns AI Startups Seeking to Emulate Meta Deal: “Not So Fast”

TL;DR / Key Takeaway:

China’s review of Meta’s multibillion-dollar acquisition of AI startup Manus signals a broader effort to tighten control over AI talent, data, and know-how, reshaping how global AI deals—and cross-border innovation—will unfold.

Executive Summary

China’s Ministry of Commerce has launched a formal review of Meta’s acquisition of Manus, a Singapore-based AI startup founded by Chinese entrepreneurs, citing concerns around technology transfer, export controls, and foreign investment compliance. While the deal—valued at more than $2 billion—may ultimately proceed, the review sends a clear message: AI capabilities developed by Chinese talent are now strategic assets subject to state oversight.

Beyond this single transaction, Beijing is signaling discomfort with a growing pattern: Chinese-founded AI startups relocating abroad, distancing themselves from China, and then selling to U.S. tech giants. Regulators worry the Meta–Manus deal could create a blueprint for talent and intellectual property outflow, particularly as global demand for agentic AI accelerates. Officials are now evaluating whether certain AI technologies should be added to export-control lists—echoing past interventions involving TikTok and recommendation algorithms.

While Manus’s technology may not rise to the strategic level of semiconductors or rare earths, the review underscores a deeper shift. AI governance is no longer just about models and chips—it’s about people, expertise, and where innovation is allowed to live. For global tech companies, geopolitical risk is becoming inseparable from AI growth strategy.

Relevance for Business

For SMB executives, this development highlights how geopolitics is now a first-order AI risk, even for companies far from Silicon Valley or Beijing. AI tools embedded in everyday products may carry hidden exposure to regulatory scrutiny, deal uncertainty, and supply-chain disruption. Cross-border AI partnerships, acquisitions, and even vendor choices could be affected as governments move to protect domestic AI capabilities.

This also signals a future where AI talent mobility is constrained, potentially raising costs, slowing innovation, and fragmenting the global AI ecosystem—changes that will ripple down to smaller firms relying on major platforms.

Calls to Action

🔹 Factor geopolitical risk into AI vendor and platform decisions, not just price and performance.

🔹 Monitor how AI regulations may affect tool availability or continuity, especially for globally developed products.

🔹 Avoid overdependence on a single AI provider that could be impacted by international policy shifts.

🔹 Ask vendors where core AI development and data handling occur, and how they manage regulatory exposure.

🔹 Plan for a more fragmented AI landscape where regional rules shape what tools you can access.

Summary by ReadAboutAI.com

https://www.wsj.com/tech/china-warns-ai-startups-seeking-to-emulate-meta-deal-not-so-fast-17bdd28a: January 13, 2026https://www.wsj.com/business/deals/china-to-review-metas-manus-deal-eb1a2c03: January 13, 2026

QUANTUM COMPUTING STOCKS OFF TO A GOOD START IN 2026 — BUT FASTEN YOUR SEAT BELTS

INVESTOR’S BUSINESS DAILY, JAN. 7, 2026

TL;DR / Key Takeaway:

Quantum computing is gaining investor momentum in early 2026, but commercialization uncertainty and policy volatility make this a long-term strategic watch—not an immediate business tool.

Executive Summary

Quantum computing stocks opened 2026 with strong early gains, driven by renewed investor optimism, government interest, and consolidation across the sector. Major technology players—including IBM, Google, and Microsoft—continue to compete with specialized quantum startups such as IonQ and D-Wave, while acquisitions and partnerships signal a shakeout among early leaders.

However, the article makes clear that quantum computing is not having an AI-style “ChatGPT moment” anytime soon. The core challenge remains commercialization: quantum systems are still expensive, technically fragile, and suited only to niche problems like chemical simulation, optimization, and advanced materials research. As a result, stock prices have been highly volatile since late 2024.

From an AI-adjacent perspective, quantum matters because it is increasingly framed as a strategic companion to AI, particularly for long-term national competitiveness. Potential U.S. government investment, DARPA’s Quantum Benchmarking Initiative, and policy signals aimed at countering China suggest quantum will remain a geopolitical and infrastructure priority, even if practical business applications remain years away.

Relevance for Business

For SMB executives, quantum computing is not an adoption decision, but it is a strategic awareness issue. As AI systems grow more powerful and data-intensive, quantum is positioned as a future accelerator—not a replacement—for classical and AI computing. The real takeaway is understanding where hype ends and timelines begin.

Calls to Action

🔹 Treat quantum computing as a long-horizon signal, not a near-term investment or tool

🔹 Avoid vendor claims that suggest quantum-powered AI is imminent

🔹 Monitor government funding and regulation as indicators of future market shifts

🔹 Focus current AI budgets on proven tools and workflows, not speculative tech

Summary by ReadAboutAI.com

https://www.wsj.com/wsjplus/dashboard/articles/quantum-computing-stocks-off-to-good-start-in-2026-but-fasten-your-seat-belts-134121781057777964: January 13, 2026

NVIDIA STOCK FALLS. CHINA CHIP SALES ARE A BIG QUESTION.

WSJ / BARRON’S: JAN 8, 2026

TL;DR / Key Takeaway:

Nvidia’s volatility underscores how geopolitics—not demand—now drives AI hardware uncertainty, with China sales a key swing factor.

Executive Summary

Nvidia shares dipped amid mixed signals on whether advanced AI chips will resume large-scale sales to China. While U.S. policy may allow limited H200 exports under revenue-sharing conditions, Chinese authorities are signaling caution—asking firms to pause orders while prioritizing domestic alternatives. Nvidia reports strong global demand, but investors remain uncertain about how much Chinese revenue will ultimately materialize.

This dynamic highlights a broader reality: AI supply chains are now political instruments. Even when technical demand is strong, export controls, licensing delays, and payment conditions can disrupt availability and pricing across the ecosystem.

Relevance for Business

For SMBs, this means AI pricing and access can change due to policy decisions entirely outside your control. Hardware constraints upstream may translate into cloud price hikes, limited availability, or slower performance in downstream AI services.

Calls to Action

🔹 Avoid assuming stable AI pricing or availability.

🔹 Diversify AI platforms where possible.

🔹 Track geopolitical developments as an AI risk factor.

🔹 Prioritize AI use cases resilient to compute constraints.

🔹 Build flexibility into vendor contracts.

Summary by ReadAboutAI.com

https://www.wsj.com/wsjplus/dashboard/articles/nvidia-stock-price-china-chips-8548006d: January 13, 2026TSMC’S STRONG SALES AND NVIDIA’S PAUSE REVEAL THE REAL SHAPE OF THE AI BOOM

WSJ / BARRON’S: JAN 7–9, 2026

TL;DR / Key Takeaway:

Strong chip demand at TSMC and short-term investor hesitation around Nvidia suggest the AI boom is real but constrained by supply chains, pricing pressure, and geopolitics—not hype alone.

Executive Summary

Recent performance from two critical pillars of the AI hardware ecosystem—TSMC and Nvidia—offers a more nuanced picture of today’s AI economy. Taiwan Semiconductor Manufacturing Company reported better-than-expected sales, easing fears of an imminent AI spending bubble and reinforcing the idea that enterprise and hyperscaler demand for advanced AI chips remains structurally strong . TSMC’s AI-related revenue is now projected to grow at mid-40% annual rates for several years, underpinned by its near-monopoly on cutting-edge manufacturing.

At the same time, Nvidia’s stock has temporarily lagged the broader AI rally—not due to weakening demand, but because of input constraints and regulatory uncertainty. Rising memory and storage prices could pressure margins or slow production, even as Nvidia reports massive order volumes for its H200 chips. Investors are also watching closely to see whether Nvidia can continue selling advanced processors into China amid evolving U.S. export controls.

Taken together, these signals point to an AI market that is capacity-bound rather than demand-starved. The bottlenecks now lie in manufacturing scale, component pricing, and geopolitical approvals—not in customer appetite for AI infrastructure.

Relevance for Business

For SMB executives, this matters because AI costs, availability, and timelines are increasingly shaped upstream, far beyond software vendors or cloud dashboards. Hardware constraints and geopolitical rules can influence pricing, access, and performance of AI tools you rely on—often with little warning.

This environment favors companies that plan for volatility, diversify vendors, and avoid assuming that AI compute will always be cheap, abundant, or instantly scalable.

Calls to Action

🔹 Expect AI costs to remain elevated, especially for advanced capabilities tied to scarce hardware.

🔹 Build flexibility into AI roadmaps, assuming delays or pricing shifts may occur upstream.

🔹 Avoid overcommitting to a single AI platform or provider dependent on constrained supply chains.

🔹 Track geopolitics as an AI risk factor, particularly around U.S.–China technology controls.

🔹 Focus AI investments on high-ROI use cases, not speculative or experimental deployments.

Summary by ReadAboutAI.com

https://www.wsj.com/wsjplus/dashboard/articles/tsmc-stock-taiwan-semi-chip-sales-ai-bubble-5dc1377f: January 13, 2026https://www.wsj.com/wsjplus/dashboard/articles/nvidia-stock-price-ai-chips-china-h200-dc00c411: January 13, 2026

SAMSUNG ELECTRONICS: HOW TRUST IS SHAPING THE FUTURE OF AI

AI MAGAZINE: JAN 9, 2026

TL;DR / Key Takeaway:

Samsung’s CES message is clear: trust-by-design—privacy, transparency, and security—is becoming a competitive differentiator for AI adoption.

Executive Summary

At CES 2026, Samsung emphasized that as AI fades into the background of daily life, trust determines adoption and long-term engagement. Its “trust-by-design” approach centers on transparency, user control, and predictable behavior—especially through on-device AI, which keeps sensitive data local whenever possible. Cloud AI is used selectively for scale or performance, balancing capability with privacy.

Samsung also highlighted ecosystem-level security through its Knox platform and cross-device authentication, arguing that trust is no longer about individual devices but about resilient AI ecosystems. Panelists stressed making AI visible—clearly labeling what is AI-powered and explaining how data is used—to counter black-box concerns and misinformation.

Relevance for Business

For SMBs, this signals rising expectations from customers, employees, and regulators around AI transparency and data handling. Trust is no longer abstract—it directly affects adoption, brand credibility, and legal exposure.

Calls to Action

🔹 Favor AI vendors with clear transparency and data controls.

🔹 Ask where AI runs (on-device vs cloud) and why.

🔹 Treat security and privacy as adoption enablers, not blockers.

🔹 Avoid black-box AI in customer-facing use cases.

🔹 Document trust and governance practices proactively.

Summary by ReadAboutAI.com

https://aimagazine.com/news/samsung-future-of-ai-ces-2026: January 13, 2026Closing: AI update for January 13, 2026

The core lesson this week is discipline. AI delivers its greatest value when leaders treat it as infrastructure to be governed, not magic to be maximized—and when they pair automation with human judgment, trust, and intentional limits.

All Summaries by ReadAboutAI.com

↑ Back to Top