AI Development Summaries 9/2/2025

Introduction:

This week in AI highlights seismic battles for control, infrastructure bottlenecks, and the deepening influence of AI across society. The headlines were dominated by Elon Musk’s nuclear lawsuit against Apple and OpenAI, which could reshape the industry’s power balance, alongside Meta’s launch of a new superintelligence lab that signals escalating competition at the frontier. At the same time, infrastructure realities are pressing: Nevada’s desert data center boom raises urgent questions about water and energy use, while even Nvidia is showing growth limits despite record profits. On the cultural side, debates around Wikipedia’s “Signs of AI Writing” guide, the AI takeover of classrooms, and the reinvention of middle management reveal how deeply AI is reshaping both trust and daily work.

For executives and managers, the signal is unmistakable: AI is no longer experimental—it’s systemic. This week’s developments underscore both new opportunities—like healthcare breakthroughs in prior authorization, design platforms like Figma expanding with AI, and Reddit reinventing search—and rising risks, from sustainability concerns to monopolistic control of AI access. The outcomes of these battles will determine who controls the next generation of AI tools, how much businesses will pay, and how organizations must adapt to survive and thrive in the AI-driven economy.

AI For Humans: August 29, 2025

Executive Summary for this week’s AI For Humans (Aug 29, 2025) podcast episode.

AI developments are accelerating across consumer tools, enterprise infrastructure, and robotics, with Google, OpenAI, Meta, and NVIDIA each making major moves this week. Google’s new “Nano Banana” AI image editor is being called the most powerful and user-friendly model to date. It enables natural-language photo manipulation with unprecedented character consistency, sparking comparisons to a “Photoshop killer.” Analysts and creators alike see it as both a threat to legacy workflows and a springboard for thousands of new startups built on accessible, fun, and powerful visual editing.

OpenAI introduced its Realtime Voice API, offering faster, cheaper, more expressive conversational AI. The system is already being integrated into telecom use cases (e.g., T-Mobile demos), but the bigger story is its developer flexibility—support for multimodal inputs and function calls makes it far more adaptable to enterprise systems. While consumer adoption may take longer, this update positions OpenAI as a leader in interactive voice and customer-support automation, a sector worth billions annually.

Meanwhile, Meta is struggling to hold talent at its SuperIntelligence Lab as engineers leave for OpenAI. In a strategic pivot, Meta struck a major deal with Midjourney, effectively outsourcing its image model capabilities. Combined with rumors of its upcoming “Hypernova” AR glasses, Meta is signaling that rather than building everything in-house, it will invest heavily in ecosystem partnerships. This suggests a tightening battle among Big Tech to consolidate best-in-class AI tools into user-facing platforms.

Finally, infrastructure and robotics made headlines. NVIDIA unveiled its “Jet Nemotron” algorithm, claiming speedups of up to 53x for large language models, potentially slashing compute costs by nearly 98%. Microsoft open-sourced its Vibe Voice TTS system, adding momentum to rapid progress in AI-generated audio. Krea demonstrated a real-time AI video model that hints at the future of dynamic media production. And in robotics, Unitree’s A2 robot carried over 500 pounds upstairs, while Boston Dynamics showcased Spot performing a triple backflip—highlighting how far robotic agility and load-bearing capabilities have advanced.

Relevance for Business

For SMB executives and managers, this week underscores how AI tools are becoming both cheaper and more powerful while expanding across new modalities (voice, video, robotics). Google’s Nano Banana signals a new wave of consumer-facing AI services that could disrupt creative industries, while OpenAI’s realtime voice models will influence customer service, sales, and B2B workflows. Meta’s Midjourney deal reveals that consolidation and strategic alliances will shape the AI ecosystem, and NVIDIA’s breakthroughs point toward a near-future where AI deployment costs plummet, opening doors for businesses of all sizes.

Calls to Action for Executives

- Explore new creative workflows: Pilot tools like Google’s Nano Banana to reduce design and content-production costs.

- Assess customer-service automation: Test OpenAI’s Realtime Voice API for support centers, call routing, or interactive voice assistants.

- Monitor vendor alliances: Track moves like Meta + Midjourney; expect tighter platform ecosystems and plan integration strategies accordingly.

- Prepare for cost shifts: NVIDIA’s algorithm may drastically lower compute expenses—consider revisiting AI project ROI assumptions in light of potential cost reductions.

- Experiment with AI video and robotics: Krea’s real-time video generation and Unitree’s heavy-lift robotics highlight emerging opportunities in media, logistics, and operations.

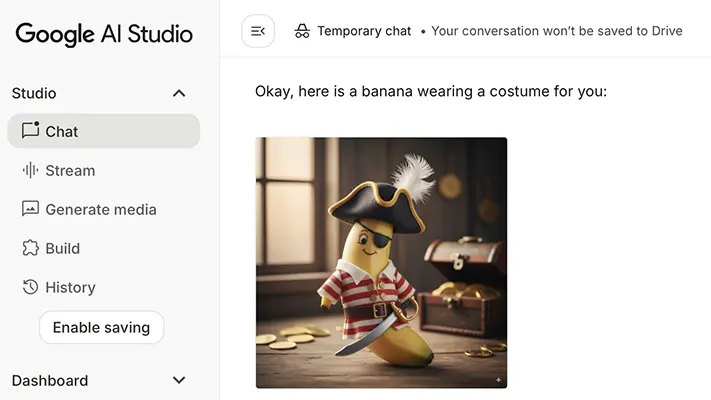

Here’s what the tech media and AI community are saying about Google’s newly launched “Nano Banana” (officially Gemini 2.5 Flash Image):

Tech Coverage Highlights

- Axios reports that Google confirmed the viral “Nano Banana” image‑editing tool and is rolling it out via the Gemini app on web and mobile. Free users can make up to 100 edits per day; paid users get 10× more. The tool excels at refining existing images, making nuanced edits like multi-step room renovations or blending two images effectively—while preserving the original subject’s identity. (Axios)

- Tom’s Guide praises its ability to maintain accurate likenesses of people and pets even amid dramatic style or background changes. It supports features like multi-turn editing (iterative adjustments), style mixing (transferring patterns/textures), and is globally available to free and paid users, with visible and invisible (SynthID) watermarks to denote AI‑generated content. (Tom’s Guide)

- The Economic Times emphasizes the upgrade’s intuitive, versatile visual editing within Gemini, positioning it as a major step in Google’s push into multimedia generative AI. (TechCrunch)

Commentary & Context

- TechCrunch frames this as Google’s strategic leap to compete with OpenAI in image editing, offering “much better ability to follow instructions” and seamless edits—particularly in preserving likeness, a challenge for rival tools. The tool has drawn attention for topping benchmarks like LMArena even before being formally revealed as Google’s. (TechCrunch)

- Google Developer Blog (via their developer channels) highlights that Gemini 2.5 Flash Image enables:

- Maintaining character consistency across edits,

- Natural language–prompted, localized transformations,

- Multi-image fusion, and

- “World knowledge” to understand context in editing operations. It’s available via Gemini API, AI Studio, and Vertex AI, with pricing at approximately $0.039 per image (1290 tokens), and includes watermarking for transparency. (Google Developers Blog)

- PC Gamer takes a more cautious tone, noting that while the realism and consistency of the edits are impressive (“frankly alarming consistency”), there’s concern about deepfake risks. The “multi-turn editing” feature (sequentially adjusting parts of an image without noticeable drift) is a standout. (PC Gamer)

- Ars Technica confirms the model’s rapid climb to the top of LMArena’s leaderboard, underlining its technical acclaim in the AI benchmarking community. (Ars Technica)

Community Buzz

- On Reddit (r/ArtificialIntelligence), users were quick to celebrate the consistency of character rendering. One user remarked:

“Its capabilities at keeping the characters consistent is extreme! Some people are claiming it has made Photoshop … obsolete.”

While Photoshop offers a far broader toolkit, many community members see a fundamental shift in how photo editing is being approached. (Reddit)

Summary of Sentiment

| Source | Key Takeaway |

| News Outlets | Emphasize usability, fidelity, and watermarking features; broad rollout. |

| Tech Press | Applaud instruction-following, benchmark dominance, and expressive control. |

| Developers | Highlight API access, pricing, and creative flexibility. |

| Gaming Media | Spot potential misuse and deepfake concerns despite technical impressiveness. |

| Community (Reddit) | Celebrate its consistency; see disruptive editing potential. |

Bottom Line

Nano Banana (Gemini 2.5 Flash Image) is being heralded as a technically advanced and user-friendly leap in AI image editing—especially for its ability to preserve identity across creative transformations. It’s widely available, competitively priced (for developers), and has already achieved benchmark leadership. While excitement is universal, thoughtful observers are noting the deepfake implications and prompting caution around responsible use.

https://aistudio.google.com/prompts/new_chat?model=gemini-2.5-flash-image-preview: September 2, 2025

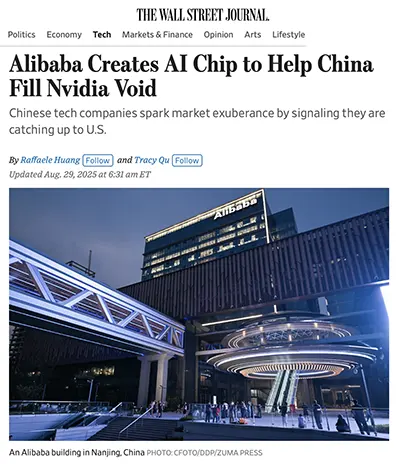

Alibaba Creates AI Chip to Help China Fill NVIDIA Void (WSJ)

Executive Summary: Alibaba Creates AI Chip to Help China Fill NVIDIA Void (WSJ)

Alibaba has developed a new AI inference chip to reduce China’s reliance on NVIDIA amid U.S. restrictions. While not as advanced as Nvidia’s top chips, Alibaba’s processor, along with efforts from MetaX and Cambricon, aims to substitute for Nvidia’s H20. Beijing is investing heavily in AI hardware self-sufficiency, with Huawei also showcasing large-scale clusters powered by Ascend chips. Alibaba plans to invest $53 billion over three years in AI and cloud, underscoring China’s intent to challenge U.S. dominance.

Relevance for Business:

Geopolitical rivalries are fueling rapid advances in China’s semiconductor ecosystem. Global firms must prepare for a bifurcated AI hardware market, where U.S. and Chinese ecosystems diverge in standards, supply chains, and compatibility.

Calls to Action:

- Assess supply chain exposure to U.S.–China chip tensions.

- Monitor Chinese alternatives to Nvidia for cost, performance, and compatibility.

- Anticipate long-term implications of a dual-track AI hardware ecosystem.

This Year Will Be the Turning Point for AI College (The Atlantic)

Executive Summary:

The class of 2026 has experienced nearly all of college under the shadow of generative AI. Surveys show 92% of undergraduates already use AI for assignments, summarization, and study aids, making it as ubiquitous as social media. Professors remain divided—some banning online work, others redesigning curricula—but the shift is irreversible. Students see AI less as a cheat and more as a practical tool for coping with academic and career pressures.

Relevance for Business:

Tomorrow’s workforce will emerge deeply accustomed to AI as a daily tool. Employers should expect graduates to bring AI fluency into the workplace, but also potential overreliance if training and ethical guidance are not provided.

Calls to Action:

- Anticipate incoming employees with ingrained AI habits.

- Provide AI literacy and ethics training for new hires.

- Adapt recruitment to assess real human skills, not just AI-assisted performance.

The AI Takeover of Education Is Just Getting Started (The Atlantic)

Executive Summary:

AI is rapidly embedding itself in K–12 education. Students use chatbots for homework, exam prep, and personalized study guides, while teachers employ AI for lesson plans, grading, and administrative work. Tools like MagicSchool AI have millions of teacher users, and districts like Miami are rolling out Gemini in classrooms. Meanwhile, policymakers and corporations—from Microsoft to OpenAI—are investing billions to mainstream AI in schools, even as concerns about cheating, bias, and quality persist.

Relevance for Business:

The next generation of workers will not only be AI-native but AI-trained in formal schooling. Companies must prepare for both the opportunities of this literacy and the risks of shallow learning or dependency.

Calls to Action:

- Partner with education systems to shape responsible AI use.

- Support balanced AI curricula that emphasize critical thinking.

- Prepare workforce pipelines for AI-native generations.

AI Will Never Be Your Kid’s Friend (The Atlantic)

Executive Summary:

This article argues that AI chatbots, marketed as “friends” for children, deprive kids of crucial opportunities to learn social and emotional skills. While AI companions provide constant validation and frictionless interactions, they eliminate the challenges—arguments, negotiations, reconciliations—that help young people develop empathy and resilience. Cases of harm, such as inappropriate conversations or lawsuits over teen suicides linked to chatbots, highlight risks, but the deeper issue is that even “safe” AI relationships lack authenticity and growth potential.

Relevance for Business:

Companies developing AI companions face ethical scrutiny similar to that experienced by social media firms a decade ago. Parents, educators, and policymakers may demand stronger guardrails, while businesses targeting youth markets risk reputational harm if they promote AI “friendship” as a substitute for real human bonds.

Calls to Action:

- Reevaluate products targeting children and teens to ensure ethical safeguards.

- Position AI tools as supplements for education and creativity, not companionship.

- Anticipate regulatory pressure around child-focused AI products.

The AI Cheating Panic Is Missing the Point (Washington Post)

Executive Summary:

Gen Z students know that using AI to cheat on essays is wrong, but the fixation on “AI cheating” obscures the real challenge: how to teach responsible, effective AI use. Surveys show 53% of K–12 schools lack AI policies, leaving students anxious and unsure how to integrate AI in learning. Students want clear guidelines, AI literacy courses, and opportunities to use AI for brainstorming, note-taking, and research, not just as a forbidden shortcut.

Relevance for Business:

Workforce readiness hinges on AI fluency, not prohibition. Organizations will benefit from graduates trained in ethical, practical AI use rather than students stigmatized into underuse or misuse.

Calls to Action:

- Support AI literacy and training programs in schools.

- Develop clear internal guidelines for responsible AI use.

- Partner with educators to shape a workforce ready for AI collaboration.

The Atlantic – A Better Way to Think About AI

Executive Summary

This article by David Autor and James Manyika argues that the future of AI should not be seen solely through the lens of automation, but rather as a chance to build collaborative systems that amplify human expertise. Using examples from radiology, aviation, and healthcare, the authors show how overreliance on automation often fails, while tools that enable collaboration—like heads-up displays for pilots—can enhance human decision-making. The key message is that AI should be designed to complement, not replace, human judgment, preserving critical expertise while expanding its reach.

Relevance for Business

For SMB executives, the lesson is clear: blind automation can erode employee skills, create hidden risks, and fail in high-stakes moments. By contrast, AI-as-collaborator strengthens decision-making, maintains human expertise, and drives better outcomes. Businesses that design workflows around collaboration rather than substitution will be better positioned to sustain performance and resilience.

Calls to Action

- Evaluate whether current AI tools in use are designed for collaboration or full automation.

- Train teams to integrate AI outputs critically, not blindly.

- Prioritize vendor solutions that emphasize transparency, reasoning, and explainability.

- Consider AI’s role in employee development, ensuring that reliance on machines does not erode critical skills.

xAI vs. Apple and OpenAI

Future of AI: AGI and Superintelligence (First Movers – Aug 28, 2025)

Executive Summary:

On August 25, 2025, Elon Musk’s xAI filed a 61-page lawsuit against Apple and OpenAI, escalating what Julia McCoy’s First Movers podcast calls the “nuclear AI war.” The suit accuses Apple of secretly feeding billions of Siri interactions into OpenAI’s ChatGPT and manipulating App Store rankings to suppress Musk’s Grok AI assistant. This marks the culmination of three stages: Musk’s split from OpenAI in 2017, the empire-building of both sides (OpenAI’s rise to a $500B valuation, xAI’s merger with Twitter/X), and now open legal warfare over who controls AI access. The battle exposes a broader issue: a handful of tech giants controlling which AI tools billions of users can access and how information is filtered.

Relevance for Business:

This conflict signals how control over AI platforms is shifting from innovation to market access battles. For SMB leaders, the outcome will determine pricing, availability, and trust in AI assistants, while also shaping regulatory scrutiny and consumer choice in the AI marketplace.

Calls to Action:

- Track legal and antitrust developments around Apple, OpenAI, and xAI—these will define AI market access.

- Avoid over-reliance on one ecosystem; diversify AI tool adoption across vendors.

- Prepare for volatility: lawsuits, platform manipulation, and policy changes could reshape access and cost structures overnight.

Mark Zuckerberg Just Declared War on the iPhone (WSJ)

Executive Summary:

Meta CEO Mark Zuckerberg outlined a vision of “personal superintelligence” embedded in smartglasses, aiming to unseat the iPhone as the primary computing device. Meta is investing heavily, recruiting top AI talent with $100 million packages, and betting that multimodal glasses that see, hear, and interact will replace screen-based smartphones. Rivals like Apple remain cautious, while Amazon and OpenAI are also exploring new wearable form factors.

Relevance for Business:

If successful, this shift could redefine the tech hardware market and reshape consumer ecosystems around AI-first devices. Businesses will need to adapt marketing, apps, and services to new multimodal, always-on interfaces.

Calls to Action:

- Explore how AI-first wearables could disrupt customer access points.

- Prepare products/services for multimodal, context-aware platforms.

- Monitor competitive plays from Meta, Apple, Amazon, and OpenAI.

What You May Have Missed About GPT-5 (MIT Technology Review)

Executive Summary:

Despite high expectations, GPT-5 has landed as more of a product update than a revolution. Early users report flaws in its “automatic model selection” and ongoing hallucinations, though sycophancy has improved. OpenAI is increasingly positioning GPT-5 for application-specific domains, particularly health advice, raising ethical and liability questions after cases of harmful guidance.

Relevance for Business:

GPT-5 shows that AI progress is plateauing, with marginal improvements marketed as breakthroughs. At the same time, its push into healthcare underscores the regulatory and ethical scrutiny businesses must anticipate when AI enters high-stakes domains.

Calls to Action:

- Manage expectations around “next-gen” AI models.

- Watch for legal/regulatory action on AI in healthcare.

- Apply AI in high-stakes fields cautiously with human oversight.

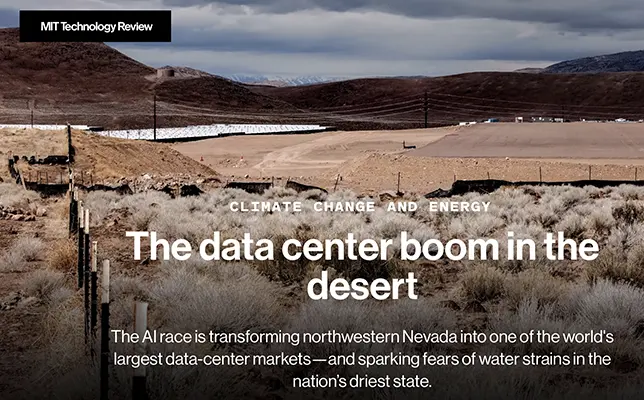

The Data Center Boom in the Desert (MIT Technology Review)

Executive Summary:

Northwestern Nevada is rapidly transforming into one of the world’s largest data center hubs as companies like Google, Apple, Microsoft, and OpenAI race to secure land and energy resources in the Tahoe Reno Industrial Center. The scale is staggering: 13 million square feet under construction, equal to nearly five Empire State Buildings, with demand for six gigawatts of additional electricity—requiring Nevada’s power sector to expand by 40%. What began as a real estate gamble in the late 1990s has turned into a strategic anchor for the AI revolution, drawing in billions in investment and state tax incentives.

Yet, this growth comes with mounting concerns. Nevada is the driest U.S. state, already facing droughts and declining groundwater. The Pyramid Lake Paiute Tribe warns that new water demands threaten its cultural lifeblood, while scientists estimate billions of gallons annually could be consumed directly or indirectly through cooling and electricity generation. Companies tout efficiency measures like air cooling or closed-loop systems, but experts argue this only shifts the burden to power plants, making the true water cost far higher.

The boom pits short-term economic wins—jobs, tax revenue, diversification—against long-term sustainability risks. Residents and environmental groups fear data center demands will dictate water and energy policy, exacerbating megadrought conditions and sparking legal battles over resource rights. This local struggle foreshadows a global tension: as AI accelerates, can infrastructure expansion keep pace without exhausting natural resources?

Relevance for Business:

The Nevada boom illustrates the hidden costs of AI infrastructure. For SMBs, rising AI adoption depends not only on software advances but on the physical limits of power and water. Data center expansion may drive energy prices up, trigger regulatory shifts, and invite scrutiny from stakeholders concerned about sustainability. Businesses that rely on cloud and AI services should anticipate environmental constraints becoming cost and compliance factors.

Calls to Action:

- Audit cloud vendors for sustainability commitments and transparency.

- Factor potential cost increases from energy and water demands into AI budgets.

- Support renewable and efficient infrastructure development through partnerships or procurement policies.

- Monitor environmental regulations tied to AI infrastructure—these could reshape pricing and availability.

Why AI Is Vulnerable to Data Poisoning—and How to Stop It (Fast Company)

Executive Summary:

Data poisoning—feeding false or malicious inputs into AI training—remains a critical vulnerability. Attacks can corrupt models over time, as seen in the Microsoft Tay chatbot fiasco, leading to harmful or biased outputs. New defenses like federated learning and blockchain-based validation offer decentralized safeguards, helping isolate poisoned data and trace its origins.

Relevance for Business:

Companies adopting AI must treat data integrity as a security priority. As AI scales across industries, poisoned data could undermine performance, safety, and trust.

Calls to Action:

- Audit AI training pipelines for poisoning risks.

- Invest in decentralized defenses like federated learning and blockchain.

- Build anomaly detection to catch compromised data early.

Even Nvidia Has Speed Limits (WSJ)

Executive Summary:

Nvidia reported record revenue and profit, with data center sales up 56% year-over-year, yet this was its slowest growth in two years. Despite a $4 trillion market cap and strong demand for Blackwell chips, geopolitical challenges loom. The Trump administration reversed course to allow limited chip sales to China, but regulations and trade war risks persist. Nvidia retains pricing power with gross margins above 70%, though competitors like AMD, in-house chips from Amazon/Google, and new startups are challenging its dominance.

Relevance for Business:

Nvidia’s performance highlights both the resilience and vulnerability of AI’s core infrastructure provider. Firms reliant on Nvidia must prepare for supply chain and geopolitical risks, as well as rising costs if trade disputes escalate.

Calls to Action:

- Diversify chip sourcing strategies beyond Nvidia.

- Monitor U.S.-China trade policies that may impact AI hardware access.

- Factor hardware cost volatility into AI adoption roadmaps.

WSJ – Meta Freezes AI Hiring After Blockbuster Spending Spree

Executive Summary

Meta has placed a freeze on AI hiring after aggressively adding more than 50 researchers and engineers in recent months, including high-profile recruits from OpenAI, Google DeepMind, and Anthropic. The decision follows investor concerns over spiraling costs, especially stock-based compensation packages reportedly reaching into the billions. Internally, Meta reorganized its AI division into four units—superintelligence, products, infrastructure, and fundamental research—reflecting Mark Zuckerberg’s ambition to lead in “superintelligence.” The freeze underscores the tension between rapid AI expansion and shareholder demands for fiscal discipline.

Relevance for Business

SMB leaders should note how runaway AI spending can unsettle investors and destabilize strategy. Even industry giants must balance ambition with financial sustainability. This highlights the importance of disciplined budgeting, prioritization, and governance in AI initiatives.

Calls to Action

- Align AI investment with clear ROI benchmarks to avoid unchecked cost overruns.

- Monitor talent markets, as freezes at large firms may create opportunities for SMBs to recruit skilled AI professionals.

- Diversify AI partnerships rather than relying on one vendor’s trajectory.

- Incorporate financial oversight and scenario planning into AI roadmaps.

Meta’s Superintelligence AI SWAT Team Is Now Called TBC Lab (WSJ)

Executive Summary:

Meta has consolidated its AI efforts under “Meta Superintelligence Labs,” with a core team dubbed TBD Lab leading development of new frontier models like Llama 4.5. Overseen by Chief AI Officer Alexandr Wang (brought in via a $14B deal with Scale AI), the group is aggressively recruiting talent from OpenAI and Google, offering hundreds of millions—and in some cases billion-dollar—pay packages. Their mission: deliver “personal superintelligence” and extend AI reasoning and agent capabilities.

Relevance for Business:

Meta’s investment signals intensifying competition for top AI talent and frontier model development. Businesses should expect rapid advances but also escalating costs and consolidation among fewer, more powerful AI providers.

Calls to Action:

- Track Meta’s Llama roadmap for ecosystem impacts.

- Anticipate rising AI costs as salaries and R&D escalate.

- Prepare for AI consolidation around mega-labs with vast resources.

Meta’s Response to FTC Monopoly Claim: Social Media Doesn’t Exist (Fast Company)

Executive Summary:

Meta’s defense against the FTC’s antitrust case is that “personal social networking” no longer exists. Instead, user behavior has shifted to algorithmic content discovery, short-form video, and group messaging, leaving the traditional friend-based feed behind. Meta argues that competition with TikTok and YouTube undermines the FTC’s monopoly claim, while also admitting that AI-driven “unconnected” content dominates its platforms.

Relevance for Business:

This case shows how AI-driven content has transformed the definition of “social media.” For executives, it highlights the importance of adapting to platforms where discovery algorithms—not social graphs—drive engagement.

Calls to Action:

- Reassess marketing strategies for algorithm-first platforms.

- Monitor antitrust outcomes shaping digital competition rules.

- Anticipate rising scrutiny of AI-driven recommendation systems.

The Trouble With Agent, ChatGPT’s New Web-Browsing AI (Fast Company)

Executive Summary:

OpenAI’s Agent promises autonomous web-browsing, but current results are clunky. In testing, Agent took 13 minutes to search flights, miscompiled recipes, and failed to log into sites, frequently blocked by CAPTCHAs. While it reveals the vision of “agentic AI” handling tasks independently, today’s internet is not built for bots, and privacy concerns about handing over logins further complicate adoption.

Relevance for Business:

Agent highlights both the promise and barriers of AI automation. Companies betting on autonomous AI must contend with structural roadblocks, security risks, and user patience before such tools can scale.

Calls to Action:

- Monitor agentic AI progress but temper expectations.

- Invest in API-based integrations as a more reliable automation path.

- Establish strong data privacy protocols for AI tools requiring credentials.

How High-Performing Teams Use AI Differently (Fast Company)

Executive Summary:

Research into high-performing teams reveals that success doesn’t hinge on mastering the latest AI tools but on habits that integrate AI thoughtfully. These teams build human “power skills” (judgment, curiosity, ethics), focus on outcomes over optics, and create structured space for experimentation. Case studies show leaders creating rituals like “Output vs. Outcome Fridays” and “AI Experiments” in retrospectives to align purpose and encourage exploration.

Relevance for Business:

The real competitive edge lies in how teams use AI, not which tools they adopt. Embedding trust, clarity, and learning alongside AI boosts resilience, creativity, and long-term performance.

Calls to Action:

- Build AI literacy while prioritizing irreplaceable human skills.

- Shift from performative productivity to outcome-driven metrics.

- Create low-stakes, high-learning environments for AI experimentation.

How AI Is Killing (and Reinventing) Middle Management (Fast Company)

Executive Summary:

AI is accelerating “unbossing”—flattening corporate hierarchies by eliminating layers of middle management. Gartner predicts 20% of firms will halve middle management roles by 2026, as AI automates scheduling, coordination, and monitoring. Yet the role isn’t disappearing—it’s being reinvented. Middle managers of the future will be orchestrators of AI-human collaboration, change agents, and coaches guiding employees through reskilling and adaptation.

Relevance for Business:

Organizations that indiscriminately slash management risk losing institutional knowledge and cultural glue. The winners will reimagine management as a hybrid role that amplifies both AI and human contributions.

Calls to Action:

- Reskill managers in AI literacy, collaborative leadership, and coaching.

- Redefine success metrics for managers beyond headcount and hierarchy.

- Pilot “AI workflow analysis” to test where management tasks can be automated or augmented.

AI Is Changing Work Even More Than You Think (Fast Company)

Executive Summary:

AI’s impact goes beyond automating tasks—it is reshaping workplaces, management roles, and even organizational missions. From replacing middle managers to restructuring the concept of the office, AI is forcing businesses to rethink fundamental assumptions about leadership, culture, and value delivery. The disruption isn’t just technological; it’s cultural, with implications for identity, collaboration, and trust within organizations.

Relevance for Business:

Executives must prepare for structural upheaval. Organizations that only approach AI as a tool for efficiency risk missing the deeper transformation: AI will redefine corporate hierarchies, strategies, and employee expectations.

Calls to Action:

- Reimagine leadership roles in an AI-augmented organization.

- Invest in change management strategies to navigate cultural disruption.

- Explore new business models that align with AI-driven value creation.

The Do’s and Don’ts of Vibe Coding (Fast Company)

Executive Summary:

Vibe coding—natural language prompts that generate working software—is democratizing app development. Founders can now build MVPs in hours, test ideas quickly, and empower nontechnical teams to solve problems. But limits remain: vibe-coded apps lack scalability, security, and quality control, requiring professional developers for production-grade work.

Relevance for Business:

For SMBs, vibe coding offers a low-cost entry to prototyping and experimentation. However, firms must recognize its limits and know when to transition from no-code AI tools to professional engineering.

Calls to Action:

- Use vibe coding for rapid prototyping and internal tools.

- Budget for professional dev teams when scaling.

- Establish security and compliance checks before public launches.

AI Is Revealing Just How Much Work Doesn’t Need to Exist in the First Place (Fast Company)

Executive Summary:

This article introduces the idea of the “BS economy,” drawing from David Graeber’s concept of “bullshit jobs.” AI highlights inefficiencies by automating low-value tasks—PowerPoints, reports, meeting notes—that often don’t drive business outcomes. Despite productivity boosts for individuals, many AI pilots fail to improve P&L, exposing entire categories of work that add little organizational value.

Relevance for Business:

AI adoption forces leaders to confront whether certain roles or tasks should exist at all. This raises cultural, organizational, and ethical questions: Will firms eliminate wasteful work, or will they cling to outdated structures despite automation’s potential?

Calls to Action:

- Conduct audits to identify “BS jobs” and redundant workflows.

- Shift focus from task automation to business value creation.

- Reinvest productivity gains into innovation, not bureaucracy.

AI Passed the Aesthetic Turing Test, Raising Big Questions for Art (Fast Company)

Executive Summary:

AI-generated images have now passed the “aesthetic Turing Test”—fooling viewers into believing machine-made art is human-created. The Vogue/Guess campaign featuring an AI-generated model sparked backlash for undercutting diversity and authenticity in fashion. As AI’s ability to produce convincing “art” accelerates, society must reconsider what it values in creativity: originality, human expression, or flawless aesthetics.

Relevance for Business:

Industries reliant on creativity—fashion, advertising, media—face disruption. While AI art offers cost and efficiency advantages, overreliance risks alienating consumers, damaging brand trust, and sparking legal and cultural battles over originality and representation.

Calls to Action:

- Audit creative workflows to balance AI cost savings with authentic human input.

- Develop transparency policies for AI-generated marketing or branding materials.

- Monitor consumer sentiment closely, especially around representation and diversity.

Reddit—and a Dash of AI—Do What Google and ChatGPT Can’t (Fast Company)

Executive Summary:

Reddit is positioning itself as a true search destination through Reddit Answers, an AI-powered tool that compiles community discussions into coherent responses. Unlike pure AI outputs, Reddit’s results are grounded in authentic user expertise and linked to ongoing conversations. With strong growth (110M daily users, 21% YoY increase), Reddit is leveraging its human-centered knowledge base as a counterweight to “dead internet” fears of AI-generated slop.

Relevance for Business:

Reddit demonstrates the value of human+AI hybrid models in information retrieval. Companies can learn from its approach to trust, community validation, and licensing deals with Google/OpenAI.

Calls to Action:

- Explore human+AI hybrid models in customer service and knowledge management.

- Consider Reddit as an emerging channel for search and brand visibility.

- Monitor “Dead Internet” risks and prioritize authentic, human-grounded content.

The Dead Internet Theory: Is TikTok Suddenly Making It Come True? (Fast Company)

Executive Summary:

The “Dead Internet” theory—that AI-generated content will overwhelm human voices online—is gaining traction. TikTok feeds are increasingly saturated with AI-generated “slime,” from scripts to imagery. Worse, platforms may accelerate this trend: TikTok is testing AI influencers for ad campaigns, and Instagram is piloting AI chatbots for creators. Experts warn that by 2026, up to 90% of online content could be synthetic.

Relevance for Business:

This shift poses major challenges for advertisers and brands. If engagement is driven by bots, marketing spend risks being wasted on synthetic audiences, undermining trust and ROI.

Calls to Action:

- Audit ad placements to avoid bot-driven engagement.

- Prioritize authenticity in branding and influencer partnerships.

- Develop safeguards against AI “slop” degrading customer trust.

Do AI Companies Actually Care About America? (The Atlantic)

Executive Summary:

This article explores how AI firms like OpenAI, Anthropic, Meta, and Palantir are branding their work as “democratic AI,” aligning themselves with American values while courting the Trump administration. The rhetoric frames AI as a geopolitical clash with China, but in practice, companies often prioritize market growth, data capture, and government contracts over democratic ideals. Their deep ties to military projects and lobbying for lighter regulation echo the rise of social media giants, raising questions about whether they are advancing democracy—or just their own interests.

Relevance for Business:

Executives should note the politicization of AI development. Companies leveraging patriotic branding may gain government favor and contracts but also risk reputational damage if seen as opportunistic. Businesses dependent on AI vendors must recognize that geopolitics, not just technology, increasingly shapes the AI marketplace.

Calls to Action:

- Assess vendor partnerships for long-term stability amid political shifts.

- Prepare for regulatory whiplash as policies swing between administrations.

- Track how “democratic AI” narratives affect procurement, investment, and trust.

Tech Billionaires Are Building Their Own Private Cities (Fast Company)

Fast Company –

Executive Summary

A new wave of billionaire projects is reshaping land and governance: Elon Musk has incorporated Starbase in Texas and controls Snailbrook, while Mark Cuban owns Mustang, Texas. Collectives like California Forever (backed by Marc Andreessen, Chris Dixon, Reid Hoffman, and others) aim to develop vast communities blending housing and advanced manufacturing. Meanwhile, Larry Ellison owns most of Lanai in Hawaii, Peter Thiel backs “startup city” Praxis, Mark Zuckerberg is building a fortified Hawaiian compound, and Bill Gates’s Arizona “smart city” has stalled. These initiatives reveal how private wealth is increasingly extending into governance, infrastructure, and community development.

Relevance for Business

For executives, these projects signal both opportunities and risks: new markets for technology, construction, and services, but also heightened regulatory, social, and ethical scrutiny. SMBs may find openings in supply chains, infrastructure development, and community partnerships, but must also anticipate the long-term implications of private governance models.

Calls to Action

- Track emerging private city projects for potential B2B opportunities.

- Assess how private governance experiments could reshape local regulations.

- Consider reputational impacts when partnering with billionaire-led developments.

- Position products/services to serve self-contained ecosystems that prioritize autonomy, sustainability, and resilience.

Cognition Offers Windsurf Staff the Exit Door (TechCrunch)

Executive Summary:

Three weeks after acquiring Windsurf, AI coding startup Cognition has laid off 30 employees and offered buyouts to the remaining ~200 staff. Despite earlier promises to value “world-class people,” it’s clear the deal was aimed at securing Windsurf’s intellectual property. Those who stay face grueling 80+ hour weeks, underscoring the cutthroat conditions in elite AI labs.

Relevance for Business:

The Windsurf saga highlights consolidation pressures in AI and the human cost of the talent race. Acquisitions may focus more on IP than people, reshaping industry culture and expectations.

Calls to Action:

- Monitor consolidation trends in AI tooling and coding startups.

- Evaluate vendor stability amid rapid acquisitions and restructurings.

- Factor talent retention risks into partnerships with high-growth AI firms.

How AI Can Prevent Satellite Disasters in Space (Fast Company)

Executive Summary:

James Murphy of Réaltra Space Systems describes how AI anomaly detection can prevent costly satellite failures in mega-constellations like Starlink. By embedding lightweight AI models directly on spacecraft, operators can detect malfunctions in power, communications, or sensors before they cascade into major losses. This “edge AI” reduces reliance on Earth-based monitoring and addresses the challenges of scaling to thousands of satellites.

Relevance for Business:

AI’s role in space tech extends beyond science fiction: satellite health monitoring is a critical business application. With billions invested in telecom and navigation constellations, integrating AI can prevent catastrophic losses, improve uptime, and protect return on investment.

Calls to Action:

Consider cross-sector lessons from space anomaly detection for IoT and critical infrastructure.

Explore edge AI applications for predictive maintenance.

Monitor aerospace AI partnerships as models for terrestrial industries.

https://www.fastcompany.com/91388212/an-engineer-explains-how-ai-can-prevent-satellite-disasters-in-space: September 2, 2025

Here’s How AI Can Fix the Prior Authorization Problem (Fast Company)

Executive Summary:

Prior authorization delays in U.S. healthcare harm patients and burn out clinicians, with 93% of doctors saying it hurts outcomes. Agent-based AI is now slashing turnaround times by 50%, automating 76% of requests, and saving thousands of hours monthly for mid-size health plans. Rather than replacing people, AI handles document-heavy work, enabling doctors and nurses to focus on care. The technology exists today, meaning reforms promised for 2027 could be accelerated immediately.

Relevance for Business:

Healthcare leaders and insurers that adopt AI now can gain efficiency, improve patient trust, and differentiate themselves in a slow-to-modernize industry. Those who wait risk reputational and financial costs as patients demand faster access to care.

Calls to Action:

- Deploy AI systems to streamline prior authorization and claims processing.

- Redesign operations to integrate AI-human collaboration.

- Move quickly to establish leadership before competitors scale AI solutions.

Figma’s World Is Growing Fast (Fast Company)

Executive Summary:

Figma, once a single-product design platform, is rapidly expanding into AI-powered coding, website building, marketing, and illustration. At its 2025 Config conference, Figma unveiled Figma Make (prompt-to-code), Figma Sites (web hosting/CMS), Figma Buzz (AI-assisted marketing assets), and Figma Draw (vector illustration). CEO Dylan Field framed this expansion not as a land grab but as staying true to Figma’s mission: helping humans design better. Figma is now competing with Adobe, Canva, GitHub, and Wix simultaneously.

Relevance for Business:

Figma’s expansion signals how design and productivity tools are converging with AI to become all-in-one platforms. Companies should anticipate shifts in licensing, workflow integration, and vendor competition as platforms consolidate capabilities.

Calls to Action:

- Evaluate design and collaboration tool stacks for overlap with Figma’s new offerings.

- Consider cost/benefit of consolidating onto integrated AI platforms.

- Watch how rivals (Adobe, Canva, GitHub) adapt to increased competition.

Wikipedia AI list: How to Spot Bad AI Writing Using Wikipedia’s New List (Fast Company)

Executive Summary:

Wikipedia editors have published Signs of AI Writing, a detailed guide cataloging clichés, tropes, and stylistic tics of LLM-generated text. Examples include exaggerated symbolism, formulaic “rule of three” phrasing, and overuse of transitions like “in conclusion.” This effort highlights the rising threat of “AI slop” infiltrating trusted sources and the arms race between AI-generated content and detection tools.

Relevance for Business:

As AI-written content proliferates, brand credibility is at risk. Organizations must safeguard communications, marketing, and knowledge bases against formulaic or misleading AI prose that can erode trust with customers and stakeholders.

Calls to Action:

Monitor Wikipedia and similar platforms for reputation-related risks.

Train teams to identify and edit AI-generated writing flaws.

Establish editorial standards and authenticity checks for AI-assisted content.

httpss://www.fastcompany.com/91392747/want-disguise-your-ai-writing-start-wikipedias-new-list: September 2, 2025Wikipedia: Signs of AI Writing (Wikipedia)

Executive Summary:

Wikipedia’s Signs of AI Writing page provides a field guide for spotting AI-generated text, cautioning editors not to rely solely on detection tools like GPTZero due to high error rates. Instead, it lists common markers—such as puffery, overuse of connectors, weasel wording, editorializing, and formatting quirks (e.g., boldface, title-case headings, Markdown leakage)—that often indicate undisclosed AI use. While these signs are not proof, they are red flags requiring closer human review to protect Wikipedia’s neutrality and reliability.

Relevance for Business:

The guide reflects a broader challenge: AI-generated content often introduces bias, puffery, or subtle inaccuracies that can erode trust if unchecked. For SMB leaders using AI in content, marketing, or documentation, the lesson is clear—human oversight and editorial standards remain critical.

Calls to Action:

- Establish internal editorial standards for AI-assisted writing.

- Train staff to spot common “AI tells” in content.

- Pair AI use with robust human review to preserve credibility.

Here’s what the tech media and broader community are saying about Wikipedia’s new “Signs of AI Writing” guideline (from the page at Wikipedia:Signs of AI Writing):

Tech Media Reaction

Fast Company – “Want to disguise your AI writing? Start with Wikipedia’s new list”

This article calls Wikipedia’s guide a “master class in clichés, strange tropes, obsequious tones of voice, and other assorted oddities of AI-generated prose.” The author praises its specificity—highlighting how AI tends to overemphasize symbolism (“majestic,” “fascinating”) and trounce through writing with formulaic patterns like the “Rule of Three” or too many concluding phrases such as “In summary.” Rather than relying on fleeting hack-like signals (e.g., em-dashes), the guide focuses on deeper stylistic patterns that feel robotically polished. (Fast Company)

TechSpot – “The subtle signs that give away chatbot writing, according to Wikipedia”

TechSpot highlights repetitive, overhyped phrases—AI tends to describe things as “important” or “historic” in a theatrical tone, which can tip off discerning readers to manufactured writing.

Complete AI Training – “How to Spot AI Writing: Wikipedia’s Guide to Common Clichés, Tropes, and Giveaways”

This recent coverage summarizes the key elements of the guide—overused praise, repetitive language, formulaic structure—and positions it as a practical tool for anyone wanting to detect or soften the telltale signs of AI-assisted text. (Complete AI Training)

Broader Context: AI Writing & Wikipedia’s Defense

The Washington Post – “Volunteers fight to keep ‘AI slop’ off Wikipedia”

Reports that Wikipedia editors are combatting a deluge of AI-generated drafts stuffed with false citations and poor quality writing. Volunteers have added warning labels to hundreds of articles and embraced a “speedy deletion” policy—bypassing the usual review delays for content that clearly exhibits AI “tell-tale signs.” Wikimedia leaders liken this vigilance to an immune system at work preserving the site’s credibility.

The Verge – “How Wikipedia is fighting AI slop content”

Explains how the WikiProject AI Cleanup initiative uses a list of writing quirks—like em-dashes, curly quotes, promotional adjectives (“breathtaking”), or overly formal transition words (“moreover”)—to flag content. Editors stress that these markers alone aren’t enough; they must be contextualized with broader patterns of AI misuse. The piece also notes that Wikipedia is exploring tools like Edit Check (non-AI-powered) to help contributors catch tone or citation issues, and prevent unverified AI text submissions. (The Verge)

Summary of Sentiment & Utility

| Perspective | Insight |

| Tech Media | Applaud Wikipedia’s guide for its nuanced, stylistic approach to AI detection. |

| Platform Defenders | See the guidelines as tools within a broader “immune response” to maintain quality. |

| Pragmatic Tool | Useful both for spotting AI-generated prose and retooling it for authenticity. |

Takeaways for You

- Spot fake finesse: The guide teaches you that AI often writes in overly flattering, “majestic,” or “captivating” language to compensate for thin content—this is a red flag.

- Go beyond punctuation: It’s not just em-dashes or formatting—patterns in tone, transitions, and structure reveal more about machine-generated text.

- Use it defensively or adaptively: Whether you’re verifying authenticity or trying to humanize AI-generated content, these heuristics improve your editing radar—and help you intervene more effectively.

Conclusion: 9/2/25

This week’s stories collectively underscore a pivotal truth: AI is no longer just about algorithms, but about power, resources, and trust. From lawsuits to data centers, from classrooms to boardrooms, the challenge for business leaders is clear—adapt strategically, diversify your AI dependencies, and stay vigilant as the rules of this new economy are written in real time.

↑ Back to Top